@Will_Butler My script check if file (acd-check) is accessible eg if you want to use it you need to check for a file that you will have in your drive.

You can also check the actual mount

if mountpoint -q -- "/storag/acd"; then

echo "mount present"

else

echo "mount not present, remount"

fusermount -uz /storage/.acd

rclone mount --allow-non-empty --allow-other --read-only --max-read-ahead 14G --acd-templink-threshold 0 acd:/ /storage/.acd/

fi

The reason I do it with checking a file is as it was more reliable, because at beginning when I used acd_cli the mount could be present but files were not accessible.

@toomuchio yea i think templink is not really needed, however since ncw may change defaults in future versions maybe it would be best to always set and use fix values so if performance changes you know its something with that rclone version and not the actual setting that may changed.

Atm i made acdmount.sh that is being called when I mount at boot or remount if mount drops

( testing with this settings now )

#!/bin/bash

rclone mount

--read-only

--allow-non-empty

--allow-other

--dir-cache-time 5m

--max-read-ahead 14G

--acd-templink-threshold 0

--no-modtime

--bwlimit 0

--checkers 16

--contimeout 15s

--low-level-retries 1

--no-check-certificate

--quiet

--retries 3

--stats 0

--timeout 30s

--transfers 16

acd:/ /storage/.acd/ &

exit

I doubled the amount of checkers, I use to have 40 BUT when tested I saw in logs 10s connection timeouts due multiple connections.

Added --no-modtime as based on docs it may speed up things

Changed contimeout to 15 seconds, default is 1m ( @ncw I assume rclone reconnects ? if thats the case I would even set it to 5 sec )

Changed --low-level-retries to 1 , but i dont think mount even use this.

--no-check-certificate , hoping for additional speed up

--quiet, hoping for additional speed up

--retries 3 ( its default - not sure if used by mount )

--timeout 30s , changed from 5m .. not sure what will happen here

--stats 0 hoping for additional speed up

--transfers 16, not sure if its used by mount, but I would raise this one to +5 of my maximum concurrent streams.

@ncw are any of above settings ignored by mount command ? and is anything else missing that I could add even if its just default value ?

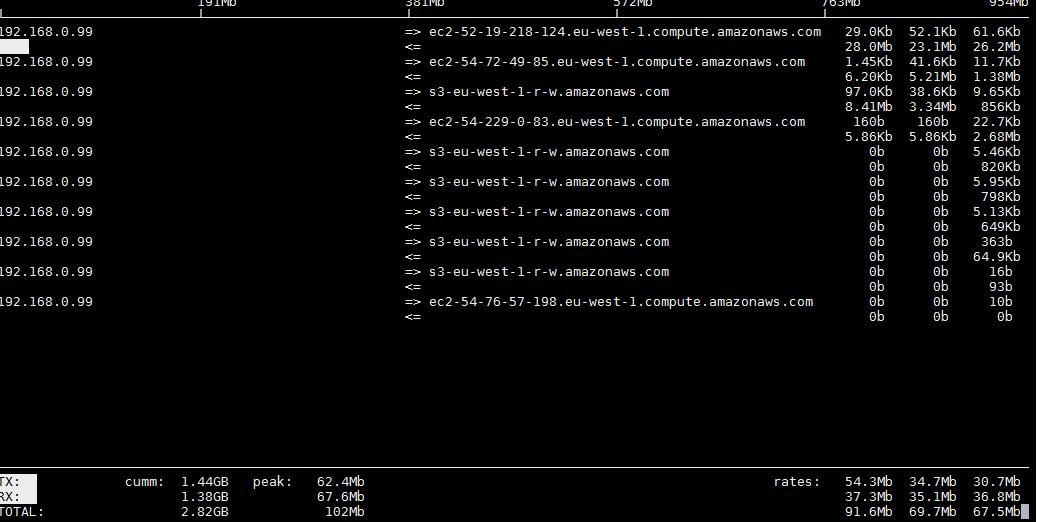

I open multiple videos (4 of them while 2 were the same 24GB file) with above settings, and in iftop saw both connections the S3 and EC2 one

So even with --acd-templink-threshold 0 the 24GB files were connecting on S3