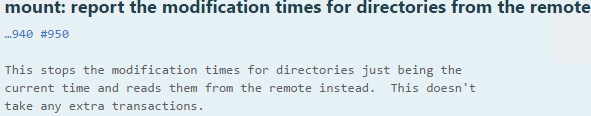

A must version for for all Plex users: http://beta.rclone.org/v1.34-64-g13b705e/

Folders will show correct modification times now.

Does this enhancement benefit google drive as well or on only acd?

So would most people say that rclone is the defacto way to mount acd now?

well you can still use acd_cli but personally with all the problems with drive disconnects i would never switch back.

16 days running checking every 15 seconds if drive got disconnected and logging reconnects so far NOT 1 disconnect happend.

With acd_cli it go so retarded that i had like 50 cron script for some kind of checking and trying to remount drive.

eg tailing log to see if database got corrupted and deleting it and doing full sync.

The biggest issue I had was once my library got over 2500 folders and 20.000 files in most cases it could not sync in first itteration eg multiple syncs needed to be runned. The problem with that was when DB got corrupted the drive was disconnected and each sync took around 2 minutes before it stopped and then could not be resynced for another 5.

Acdcli worked perfectly at beginning when my lib was bellow 10TB then …

So if rclone ever implements some sql3 db for local cashe it must be as parameter eg optional.

Atm this was my test with latest beta

3304 directories, 28933 files 32TB

14.12.2016/17:51 tree -D -s -h completed in 317 seconds

if I runned tree -D -s -h with acd_cli mount it would take less then 20 seconds as it would just read the database, but got forbid some upload and syn was being done during that time as it was more then likely DB will be corrupted.

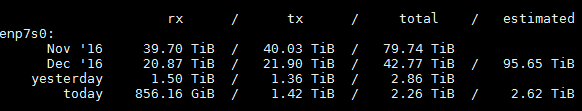

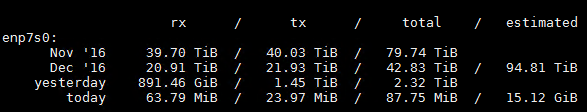

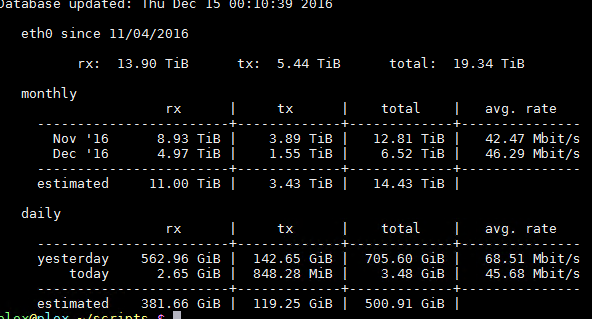

Iam quite heavy user this is my download/upload server stats

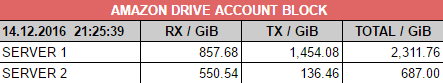

and this is just plex

both mounted with rclone 0 problems. acd_cli really dislikes having multiple mounts.

If you dont want to risk database corruption with it then proper way is to have it mounted only on 1 machine.

When you are uploading something with acdcli disconnect the drive then sync it then reconnect it eg useless for Plex server or stuff when you want to have it on all the time, but good if you just need to access files fast, search etc…

Thank you for the insight, my current usage is 5TB. Right now I use acd_cli with encfs and upload with rclone. I’ve definitely experienced database corruption. Figure it won’t hurt to try rclone mount. Currently mulling over if it is feasible to re-encrypt my data to rclone crypt or if It would even be worth it.

Just to confirm, are you running clone with crypt or you're using just using clone to mount and then encfs to decrypt?

I've moved all my data to clone encrypted but I found acd_cli for mounting and then rclone to decrypt to be more stable than straight up rclone crypt mount.

NO IAM RUNNING RCLONE WITH ENCFS ( I STARTED WITH ACD_CLI, then used rclone for upload only and now using it for mount + upload )

I dont plan to switch to crypt i like encfs and its part of OS so today rclone tomorrow bclone dont wanna have crypt part of my mount / upload setup

If ncw did something amazing that would insanly lower CPU for crypting I would consider switching, for now dont see any need as CPU impact and performance is same.

To be honest we could use some fast encryption that would merge folder and files into one name ( looked less obvious ) and did that as fast as possible completely not secured and we could use that for media files only .

I think there’s a enough people with a lot of data encrypted using crypt now, that it will not disappear from future projects. Also even if a superior project were to somehow come about, you could still mount your crypt files the same way you use encfs. As mentioned by @ladude626 some people use acd_cli to mount their ACD, then mount the acd_cli mount with rclone crypt. Instead of pointing rclone crypt at a remote, like acd: you can instead point it at a local folder, such as /home/user/acd_climount/

Yea it would make stuff much cleaner with crypt to be heard but omfg even thinking about doing whole LIB of 32TB is making me sick, especially since amazon baned me now 2 days in a row … at close to same time.

I will start tracking amount of data, but the weird thing is today i did not even have that much traffic

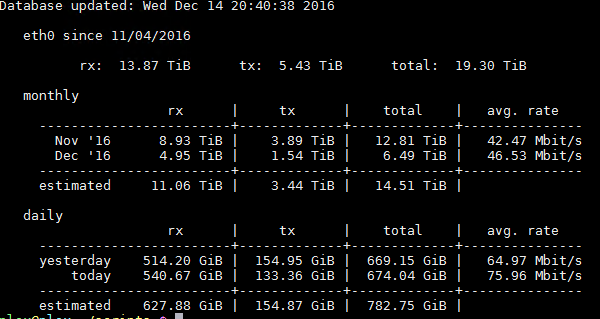

this is vnstats overall traffic on network card, server 1 is my download / upload and server 2 is Plex.

Folders will show correct modification times now. Can you explain how this will improve performance?

@Seigele

Previously remounting your ACD would result in Plex re-analyzing your files upon refreshing (re-downloading all of your media and re-generating metadata.). Now there is consistency, and Plex shouldn't wastefully rescan your files.

@Ajki I have pretty exclusively used rclone with Google Drive, so I can't say why you're being temporarily(?) banned, but I assume it's for heavy usage. Google Drive in particular takes issue with accessing too many folders in a day, you can get banned for 24 hours pretty easily if you excessively refresh your Plex library, for example. My understanding is that Amazon doesn't have any quota for this, but at least one post on a github issue I created indicated some ACD users have experienced similar effects.

Could be very useful for ACD as well, since if you hammer that too much on outbound requests you get a similar effect, in very rare cases some users on Reddit have reported having their drives disabled

One way or another I recommend that you extract your Movie files and place them all in a singular directory:

Good:

acd:Movies/file.2016.mkv

Not so good:

acd:Movies/file.2016/file.2016.mkv

Doing this you either lighten the load on Amazon's servers and have significantly faster Plex refresh times, or you just have significantly faster Plex refresh times and it turns out amazon doesn't care.

So will this solve the problem of:

- Plex taking ridiculously long times refreshing a library when new media is added despite the mount not being remounted

- Plex auto-updating on folder changes and running a partial scan?

@Seigele

This will possibly allow for partial scans to work with rclone (not sure that they did before?) which would reduce the necessity of you doing a full refresh of your library/speed up new content appearing, assuming it works.

If your files have already been analyzed and you haven’t remounted since they were last analyzed, it’s likely you’re referring to the normal duration of time it takes to refresh Plex using rclone. It does take quite a lot of time because rclone currently sends individual requests asking for the contents of each folder. Comparing the speed of this to a local hard drive is like comparing a toy car and a ferrari. We may see the option to cache the entire drive structure on mount in the future which would make similar operations extremely fast by comparison.

@Seigele test it at let us know, personally i did not have problems with plex refreshing a library when new media is added.

@jpat0000 Iam hammering them a lot since iam also making a full backup of my amazon drive to google drive. (at 50% atm … eg rclone copy acd: gdrive:

p.s. Partial scans should not work as I think those needs to be messaged by OS and OS is not messaging even on network drives. ( i may be wrong )

@Ajki Just curious what makes the new better specifically. I’m assuming you aren’t using --no-modtime anymore?

I dont have no-modtime and the main thing is the actual folder date.

SInce i regularly update to next beta ( on top of amazon bans ) there is tons of remounts and Plex have big problems if you loose drive access during library update. The main problem is loosing video analyzation info and those videos are not playable until plex reanalyze them. ( since yesterday ban i made crontab script that stop plex when drive is not accessible and start it when it gets back )

For some weird reason Plex allow only owner to manually trigger analyze in interface so half of the videos were not playable because of it.

I even went so far that i made a bookmarklet so my friends can trigger analyze as well.

I made suggestion on Plex forums that they should consider implementing automatic analyzation when user press play ( if video have none ) as it takes only 3 to 5 seconds.

p.s. Bellow is the letter from Amazon ( i got 2 more bans after it ) when I asked them to escalate and answer is the drive really unlimited as it say 100TB and why my account got banned.

@Ajki

Very interesting, while the email does say that “Unlimited” does mean “unlimited”, it does not say the limit is 100TB. The rep claims that you used hundreds of terabytes of their traffic in “a single 24 hour period”. I will ask, just for the sake of covering all bases, but is this remotely true? I don’t think it is. You would need to be constantly transferring at 10Gbit for 24 hours to achieve 100TB of traffic used in a day, a minimum of 20Gbit constant to achieve plural of 100TB (200TB).

Out of curiosity, how much data was being stored? What’s the most data you have transferred in a 24 hour period?

It really sounds like they’re taking an issue with your bandwidth usage, not the amount you have stored.

Yea the hunderds of TB part is not true and I use 1Gbit on both of my servers

This is my current stats ( download / upload machine )

Plex server

But its interesting to see how much data plex download as that server is running just Plex and PlexPy nothing else.

( the only upload that it does every 8h are subtitles files that are negligible in size )

In 2016 we have a lot of commercial 1Gbit offerings, it’s not fair of amazon to claim that you’re using your ACD for non commercial use (business use). If you haven’t already, I would take the rep up on his offer, and explain how they reached their judgement in error, and that you’re using ACD to store your media.

The most data you could have possibly used in a day is 21TBs using both servers to upload at 1Gbit each for 24 hours. Your statistics show that you actually used an average of 1.6TBs per day, which only requires an internet speed of 150 megabits!

The problem is spiking, I usually download with 700 to 800Mbit then upload with 300 to 600Mbit but all requests are done and server is just waiting for some new movie to be released or TV Show to air.

I would switch to GoogleDrive but that 24h possible ban is just to much risk

I have high hopes with Amazon since few days ago they added Syncing Feature to their application so there will be much more traffic than they have now and hopefully they will raise limits a bit in theirs auto ban scripts.

p.s. In few weeks i will have full lib on googledrive too and then I plan to update both at same time and just make script if banned on Amazon change unionfs to point to google  ( and back once my drive is unblocked )

( and back once my drive is unblocked )