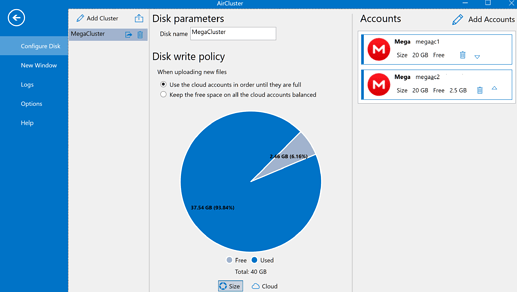

I am trying to make an union storage (Mega upstreams). My expectation is very simple. Suppose I have configured the union storage with two or three upstreams, I want to fill up upstreams sequentially, such that when one upstream is completely filled up only then rclone should start filling up the second upstream. In this way, I can keep adding new upstreams when required. This mode is more reasonable for incremental backups, where data will remain organized sequentially across upstreams.

So, I configured the create policy with eplfs (while keeping the action and search policy to default). I was expecting it will keep filling up a single upstream until its quota is over, then it will start filling up the second one. But, what happened is that, after the first upstream quota is full, rclone stops uploading new files with quite full error.

Can you please suggest how it should be configured to achieve what I am trying to?

I know one can probably do, is to use epmfs create policy, then wait until rclone throws quota full for all upstream, then halt rclone, add a new upstream, and then again restart copying. But this is not an optimal solution as I have to halt in between.

What is your rclone version (output from rclone version)

Latest: v1.55.1

Which OS you are using and how many bits (eg Windows 7, 64 bit)

Windows 10

Which cloud storage system are you using? (eg Google Drive)

Union with Mega.nz upstreams

The command you were trying to run (eg rclone copy /tmp remote:tmp)

rclone copy src dest:/