What is the problem you are having with rclone?

I have a 10G LAN connection with my server, and am struggling to get the 1GiB/s download speeds consistently.

I have an rclone mount as my network storage solution. On default settings, I get a consistent ~600MiB/s download.

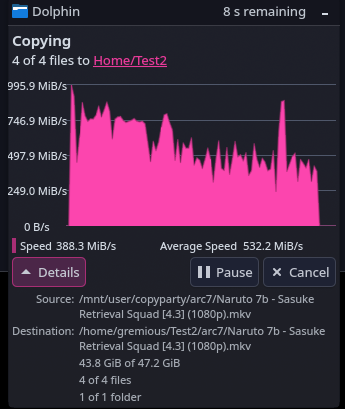

It should be possible, as rclone copy does it, and as I spent two hours fiddling with the config numbers randomly, I do sometimes peak at that speed, but it either drops and peaks wildly, or starts at 1GiB/s for a solid 10 seconds before dropping down gradually over time.

But I just cannot for the life of me find consistent configuration to just hit ~1GiB/s for the whole download.

My test data is a folder of anime movies (5 files, each between 6 and 13 Gb in size).

Perhaps someone here already has something similar configured, and can share their settings?

Run the command 'rclone version' and share the full output of the command.

rclone v1.71.2

- os/version: endeavouros (64 bit)

- os/kernel: 6.12.55-1-lts (x86_64)

- os/type: linux

- os/arch: amd64

- go/version: go1.25.3 X:nodwarf5

- go/linking: dynamic

- go/tags: none

Which cloud storage system are you using? (eg Google Drive)

webdav?

The command you were trying to run

rclone mount --vfs-cache-mode writes --dir-cache-time 5s --no-check-certificate --vfs-read-chunk-streams 8 --vfs-read-chunk-size 32M --vfs-read-chunk-size-limit 0 --transfers 12 -v gremy-copyparty-local: /mnt/user/copyparty

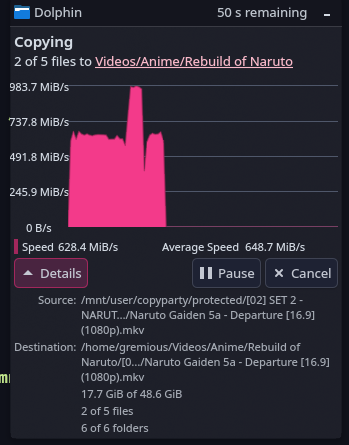

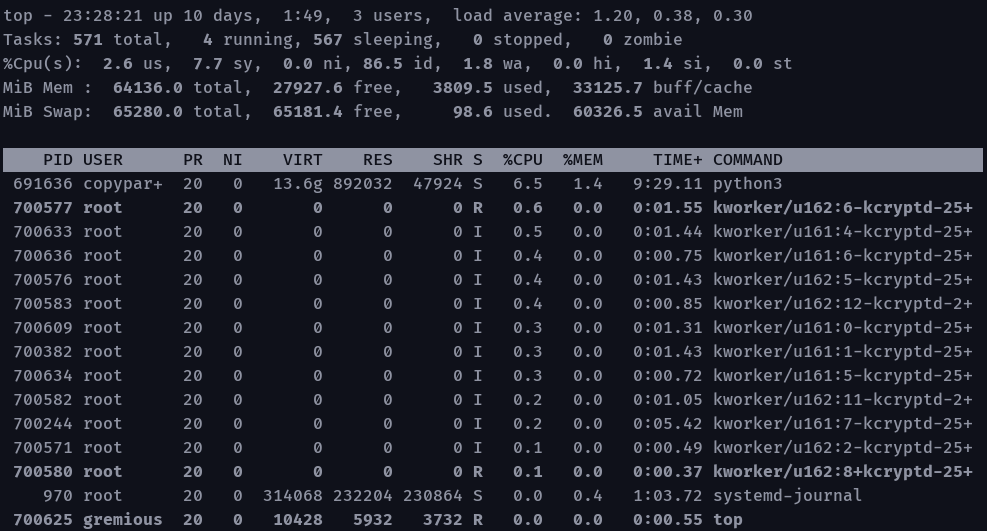

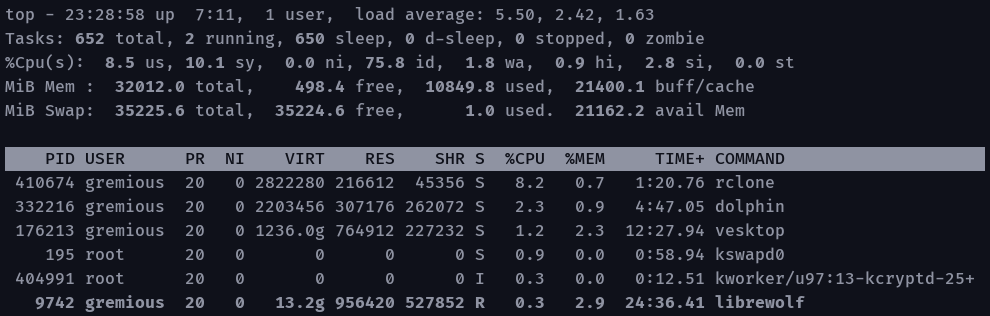

Then I go to /mnt/user/copyparty and I copy-paste the test folder with dolphin.

Output:

You can see it reached 10Gib/s there for a second, too.

Please run 'rclone config redacted' and share the full output. If you get command not found, please make sure to update rclone.

[gremy-copyparty-local]

type = webdav

vendor = owncloud

pacer_min_sleep = 0.01ms

user = XXX

pass = XXX

url = https://192.168.1.130:3923/gremious

A log from the command that you were trying to run with the -vv flag

rclone-log.txt (6.9 MB)

Server-side nginx config if relevant:

upstream cpp_uds {

# there must be at least one unix-group which both

# nginx and copyparty is a member of; if that group is

# "www-data" then run copyparty with the following args:

# -i unix:770:www-data:/dev/shm/party.sock

server unix:/dev/shm/party.sock fail_timeout=1s;

keepalive 1;

}

server {

listen 192.168.1.130:3923 ssl;

listen 443 ssl;

# listen [::]:443 ssl;

server_name data.gremy.co.uk;

ssl_certificate /etc/letsencrypt/live/gremy.co.uk/fullchain.pem;

ssl_certificate_key /etc/letsencrypt/live/gremy.co.uk/privkey.pem;

# default client_max_body_size (1M) blocks uploads larger than 256 MiB

client_max_body_size 1024M;

client_header_timeout 610m;

client_body_timeout 610m;

send_timeout 610m;

# uncomment the following line to reject non-cloudflare connections, ensuring client IPs cannot be spoofed:

#include /etc/nginx/cloudflare-only.conf;

location / {

proxy_pass http://cpp_uds;

proxy_redirect off;

# disable buffering (next 4 lines)

proxy_http_version 1.1;

client_max_body_size 0;

proxy_buffering off;

proxy_request_buffering off;

# improve download speed from 600 to 1500 MiB/s

proxy_buffers 32 8k;

proxy_buffer_size 16k;

proxy_busy_buffers_size 24k;

proxy_set_header Connection "Keep-Alive";

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-Proto $scheme;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

# NOTE: with cloudflare you want this X-Forwarded-For instead:

#proxy_set_header X-Forwarded-For $http_cf_connecting_ip;

}

}