you could probably do a bind mount on the host file to the container, or extend the rclone container to include the script and cron job

I’m not 100% sure, but depending on the OS the container should read the hosts file first regardless of the docker network. Are you seeing different behaviour? What OS are you using docker on?

Ok, I overcome many of the usual obstacles and I'm now in a position where I can run the script.

I have problems, though:

ashlar@HTPC:~/gDrive_speeds$ sudo bash googleapis.sh

[sudo] password for ashlar:

Hosts backup file not found - backing up

Please wait, downloading the test file from 216.58.212.170... MiB/s

googleapis.sh: line 114: tmpapi/speedresults/: Is a directory

Please wait, downloading the test file from 142.250.179.138... MiB/s

googleapis.sh: line 114: tmpapi/speedresults/: Is a directory

rm: cannot remove 'tmpapi/testfile': No such file or directory

cat: tmpapi/speedresults/: Is a directory

The fastest IP is at a speed of | putting into hosts file

In the script you state that the "tmpapi/speedresults is a directory" error derives from speed not being expressed in MiB/s. But if I copy the dummy file manually, this is what I get in rclone.log:

2022/07/14 15:39:33 INFO : dummythicc: Copied (new)

2022/07/14 15:39:33 INFO :

Transferred: 143.051 MiB / 143.051 MiB, 100%, 133.081 MiB/s, ETA 0s

Transferred: 1 / 1, 100%

Elapsed time: 2.8s

Hmmm... repeating the copy I see this in the log:

2022/07/14 16:31:31 INFO : dummythicc: Copied (new)

2022/07/14 16:31:31 INFO :

Transferred: 143.051 MiB / 143.051 MiB, 100%, 0 B/s, ETA -

Transferred: 1 / 1, 100%

Elapsed time: 2.3s

No speed being reported at all. I'm using rclone 1.59.0.

Every time I try I am reminded of why I hate Linux... I am too dumb for it, apparently.

Ah nah I see what's going on. Thanks for your log example.

What's happening here is your internet is way faster than mine and it's downloading within a second. So the log doesnt have a chance to get a average speed, resulting in the not quite correct "0 B/s". I ran into this problem all the time when I was making the script and using a 10KB file for testing. That's how i discovered it has to be bigger.

Use a bigger dummy file. maybe 500MB. Enough that it will take more than a few seconds to download so rclone can log the speed correctly. Then I'm sure it will work fine.

Don't worry you're not too dumb, you worked it out. Rclone logging is the one whose too dumb when it comes to small files and doesn't log correctly.

Yes, that was the problem. Now the script runs.

BUT. I am seeing strange behavior from it. While the script runs I open the hosts file in a text editor. At any given moment I would expect to find a single IP in it, during script execution.

Instead, every new IP that is tested is appended to a list, in last position. Unless I am mistaken, this leads to only the first IP actually been tested, repeatedly.

I also wonder if you flush the dns cache after every test and after modifying the hosts file during the script.

If I'm not mistaken the command to use would be:

systemd-resolve --flush-caches

I mean... the script ended with:

The fastest IP is 216.58.214.10 at a speed of 95.37 | putting into hosts file

And yet the hosts file contains this:

142.250.184.74 www.googleapis.com

142.250.180.138 www.googleapis.com

142.250.180.170 www.googleapis.com

142.251.209.10 www.googleapis.com

142.250.184.42 www.googleapis.com

142.250.184.106 www.googleapis.com

142.251.209.42 www.googleapis.com

216.58.209.42 www.googleapis.com

142.250.179.138 www.googleapis.com

216.58.214.10 www.googleapis.com

216.58.208.106 www.googleapis.com

172.217.168.234 www.googleapis.com

142.251.36.10 www.googleapis.com

172.217.168.234 www.googleapis.com

142.251.39.106 www.googleapis.com

142.250.179.202 www.googleapis.com

142.251.36.42 www.googleapis.com

172.217.168.202 www.googleapis.com

142.250.179.170 www.googleapis.com

142.250.184.42 www.googleapis.com

142.250.184.106 www.googleapis.com

142.250.180.170 www.googleapis.com

142.250.180.138 www.googleapis.com

142.251.209.42 www.googleapis.com

216.58.209.42 www.googleapis.com

142.250.184.74 www.googleapis.com

142.251.209.10 www.googleapis.com

216.58.214.10 www.googleapis.com

As you can see the fastest IP was tested in 10th position and then it gets appended at the end of the file. With an hosts file like this I expect that the machine would actually end up using 142.250.184.74 all the times (first position in the list).

EDIT: I tested manually under Windows and some IPs were still slow. The script was reporting all of them as extremely fast. Which leads me to believe it was always and only testing the first IP in the list.

Hey Man, I'm in Canada! And have the same issue. Some days my plex managed users can stream UHD Remux, others day not a chance.

Ok so this either because you began this test with a already modified hosts file with all those IPs. Or its because your hosts file isn't being overwritten from backup. I'm guessing that's because you used a incorrect password for sudo permissions (all hosts file related commands require sudo) and the script just simply carried on. But there might be another reason that will need a little searching for you.

I'd recommend clearing out those IPs manually from your hosts file "sudo nano /etc/hosts", and then delete the hosts backup that it's got to start fresh "sudo rm /etc/hosts.backup". Then give it another shot starting from this fresh begining.

To explain whats happening in the script a bit more:

-It firsts checks if you have a hosts.backup file, if not it creates one. If yes it restores from the backup to reset your hosts file back to what the backup has.

-Then during the IP checking, it adds a single line to the hosts file. Does the download. Then restores your hosts.backup file, overwriting it back to fresh, loops back around and tries the next IP. During this time, it's assuming there's only a single IP in the hosts file, if you have more then it would appear to be lying to you by checking the wrong IP.

-It repeats until done, it then restores the backup one last time, then does the logic for finding the fastest IP, and edits your hosts file one more time with the fastest one.

Again sorry, my script has 0 error detection and assumes everything will work perfectly. So in turn we have to act perfectly with it, with a perfect starting hosts file and a perfect password entry.

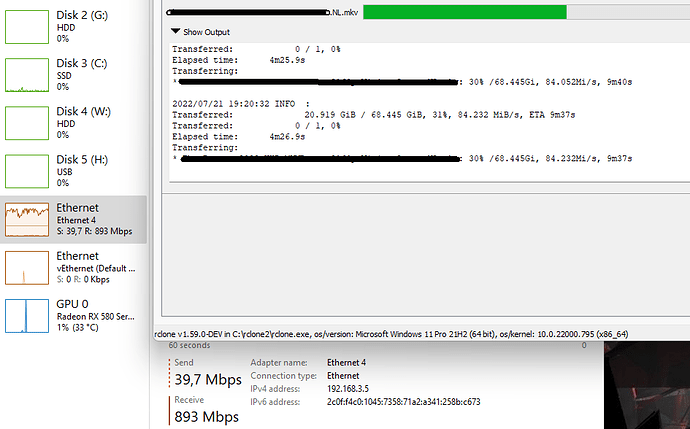

I ran the script as detailed above. On my 1gbps up/down connection I am seeing 72 MiB/s as the max and it seems the rclone mount is now stable and working as previousily. Quick question, since I am in Canada, are there specific google IP's for me? My understanding is that they have datacenters in Edmonton Alberta ![]()

Right, I think it's now working correctly. One single IP in hosts file at any given time during script running. One at the end, the fastest measured.

It all appears to be working just fine. There's no need to flush DNS as, I tested this, hosts file content takes precedence. As soon as I change the IP in hosts file a ping to www.googleapis.com goes to that IP.

I still suggest anyone capable of translating the script to a Windows batch file to do so. The hurdles to overcome to have it run under WSL are... tedious, let's say.

EDIT:

One thing you might wanna check out in your script: if more than one IP gives the same result, all are inserted in the hosts file but only one with correct syntax, like this:

142.251.209.42

216.58.212.170

142.250.184.42

142.250.180.170 www.googleapis.com

I don't think it creates an error in use but it might be problematic for following runs of the script, maybe? Maybe not, since hosts.backup should be an empty file and it's restored before testing... well, I thought it was worth to point this out anyway.

Hi guys,

I have a similar issue (downloaded approx 50tb in a few weeks without any issue, then a few days ago, it started at full speed, then dropped to under a kilobyte per second speeds!!!)

I run rclone on my unraid server at home. Do anyone here have a script I could try to use to fix this ? I'm located in EU -> Belgium.

I've seen an improvement over the past few days in the US.

All endpoints consistently reporting full speeds.

That's great. I'm currently on vacation, so I haven't been able to do any further testing.

Yep. Both of our scripts do a little check for what IPs Google gives out in your region and checks those ones. Your list of IPs will be different from mine in Aus. In this thread someone posted a bunch of IPs from around the world, feel free to add some extra ones from other countries in the whitelist file while using my script.

Heh funny bug. The way my script works is by saving each IP in it's own file called the speed, ie "82.11". Then when it's done, it sorts them and finds the highest number. Then the IP in that highest number file is what it puts into the hosts file.

I've never encountered the same speed more than once in my tests and havent accounted for it at all. Again its a shitty script with no error detection at all and just expects everything to run perfectly. Definately needs to be babied a bit.

Same! I just ran mine again without a blacklist file so it checks them all and there were no bad IPs. Maybe they finally fixed the issue and like someone mentioned above, the new config gets slowly rolled out to the different regions.

Out of eight IPs today only one is slow. Which makes the manual configuration still necessary.

And today all IPs for my country/region are fast as they used to be. I'll monitor on a daily basis for a while...

I wish to thank @Nebarik for the script and all the help he provided in making it work for my "special" case (WSL under Windows).

It turns out that some of the script variables don't like absolute paths. Once I got that sorted out, everything is working.

check the DEFAULT_ variables for reference

I've observed the same change in south america. do not think the scripts are necessary anymore but I'll keep running just in case.

All my endpoints in australia are back to permanent full speed as well, including the IP that always slow. Seems google have fixed their F UP.

I have unlimited download on my host machine, so I'll continue to run the script just in case.

Big thank you to those who put together and shared their scripts. Much appreciated.

The issue is still ongoing in South Africa. All the South African endpoints are giving around ~200Mbps some even lower at 15Mbps or so.

There is a server in Kenya mba01s09-in-f10.1e100.net which is only ~50ms from Johannesburg that is pretty fast and I can max my 1Gbps connection by just using the --drive-chunk-size 256M flag.

2a00:1450:401a:800::200a

172.217.170.170