What is the problem you are having with rclone?

I use rclone to backup (rclone sync) my docker volume for gitlab on azureblob storage. I also use docker to run rclone (using the official rclone/rclone image). I limit the rclone docker container memory to 500 mb.

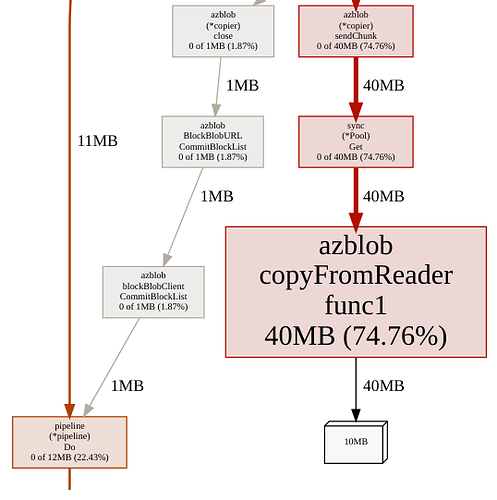

This was working fine with version 1.52.3, memory would be stable at around 360 MB for many hours. When I update to rclone 1.53.3 it runs out of memory after a few minutes (between 2 and 4 minutes).

What is your rclone version (output from rclone version)

1.53.3

Which OS you are using and how many bits (eg Windows 7, 64 bit)

official docker container on centos 7.9

Which cloud storage system are you using? (eg Google Drive)

azureblob

The command you were trying to run (eg rclone copy /tmp remote:tmp)

rclone sync --bwlimit=10M --azureblob-chunk-size 10M --links --create-empty-src-dirs --retries 1 --ignore-errors --delete-during --progress -v --log-file=$LOG_FILE $AZUREBLOB_BACKUP_SOURCE $$AZUREBLOB_BACKUP_DESTINATION

The rclone config contents with secrets removed.

The config file is created just before the sync is run using the following command

rclone config create azureblob azureblob type azureblob account $AZUREBLOB_ACCOUNT key $AZUREBLOB_KEY config_is_local false config_refresh_token false

A log from the command with the -vv flag

I cannot see anything interesting so here are the first few lines.

"--delete-during" "--progress" "-vv" "--log-file=/logs/rclone.log" "/volumes" "azureblob:/backup-sc01-trt-test-2020-11-25"]

2020/11/25 14:00:47 DEBUG : Creating backend with remote "/volumes"

2020/11/25 14:00:47 DEBUG : Using config file from "/config/rclone/rclone.conf"

2020/11/25 14:00:47 INFO : Starting bandwidth limiter at 10MBytes/s

2020/11/25 14:00:47 DEBUG : Creating backend with remote "azureblob:/backup-sc01-trt-test-2020-11-25"

2020/11/25 14:00:47 DEBUG : fs cache: renaming cache item "azureblob:/backup-sc01-trt-test-2020-11-25" to be canonical "azureblob:backup-sc01-trt-test-2020-11-25"

2020/11/25 14:00:47 DEBUG : Waiting for deletions to finish

2020/11/25 14:00:48 INFO : rclone.log: Deleted

then a lot of skipping of existing files:

2020/11/25 14:00:49 DEBUG : gitlab_logs/grafana/@400000005dd32ca50895b354.s: Size and modification time the same (differ by 0s, within tolerance 1ns)

2020/11/25 14:00:49 DEBUG : gitlab_logs/grafana/@400000005dd32ca50895b354.s: Unchanged skipping

2020/11/25 14:00:49 DEBUG : gitlab_logs/grafana/@400000005ddd0cdd1faa1634.s: Size and modification time the same (differ by 0s, within tolerance 1ns)

2020/11/25 14:00:49 DEBUG : gitlab_logs/grafana/@400000005ddd0cdd1faa1634.s: Unchanged skipping

2020/11/25 14:00:49 DEBUG : gitlab_logs/grafana/@400000005de8ea5e1aa73ff4.s: Size and modification time the same (differ by 0s, within tolerance 1ns)

2020/11/25 14:00:49 DEBUG : gitlab_logs/grafana/@400000005de8ea5e1aa73ff4.s: Unchanged skipping

2020/11/25 14:00:49 DEBUG : gitlab_logs/grafana/@400000005e24558e1412a624.s: Size and modification time the same (differ by 0s, within tolerance 1ns)

2020/11/25 14:00:49 DEBUG : gitlab_logs/grafana/@400000005e24558e1412a624.s: Unchanged skipping

2020/11/25 14:00:49 DEBUG : gitlab_logs/grafana/@400000005e51311320a1997c.u: Size and modification time the same (differ by 0s, within tolerance 1ns)

2020/11/25 14:00:49 DEBUG : gitlab_logs/grafana/@400000005e51311320a1997c.u: Unchanged skipping

2020/11/25 14:00:49 DEBUG : gitlab_logs/grafana/@400000005e52829327c69ff4.s: Size and modification time the same (differ by 0s, within tolerance 1ns)

2020/11/25 14:00:49 DEBUG : gitlab_logs/grafana/@400000005e52829327c69ff4.s: Unchanged skipping

Then it is sending files until it crashes, with the -vv options it only takes a few seconds before it crashes. These are the last lines in the log file:

2020/11/25 14:01:14 DEBUG : gitlab_data/prometheus/data/28/ef6d8f5a0c673d.db: MD5 = 63a1b8e03b7bc15706ba122e0502ee2c OK

2020/11/25 14:01:14 INFO : gitlab_data/prometheus/data/28/ef6d8f5a0c673d.db: Copied (new)

2020/11/25 14:01:14 DEBUG : gitlab_data/prometheus/data/28/f53ec267f2ed75.db: MD5 = 2e24681a8cb87f824176e62d0a457807 OK

2020/11/25 14:01:14 INFO : gitlab_data/prometheus/data/28/f53ec267f2ed75.db: Copied (new)

2020/11/25 14:01:14 DEBUG : gitlab_data/gitlab-rails/shared/artifacts/1f/f7/1ff7b91c979ea344298826c212adf745783ae8a14a3eded36d56ba1b0f4bdeee/2020_02_18/48429/67894/job.log: MD5 = 4969e00fd6e50230da06fd15d74f5e47 OK

2020/11/25 14:01:14 INFO : gitlab_data/gitlab-rails/shared/artifacts/1f/f7/1ff7b91c979ea344298826c212adf745783ae8a14a3eded36d56ba1b0f4bdeee/2020_02_18/48429/67894/job.log: Copied (new)

2020/11/25 14:01:14 DEBUG : gitlab_data/gitlab-rails/shared/artifacts/1f/f7/1ff7b91c979ea344298826c212adf745783ae8a14a3eded36d56ba1b0f4bdeee/2020_02_07/46941/65967/job.log: MD5 = 0e24d8e2ec637e239a9f22a4e3efc743 OK

2020/11/25 14:01:14 INFO : gitlab_data/gitlab-rails/shared/artifacts/1f/f7/1ff7b91c979ea344298826c212adf745783ae8a14a3eded36d56ba1b0f4bdeee/2020_02_07/46941/65967/job.log: Copied (new)

2020/11/25 14:01:14 DEBUG : gitlab_data/gitlab-rails/shared/lfs-objects/1a/ad/4150f7141b6a7c87633372aa1b4620f478b30b66a61584a5956a28ccd2f5: MD5 = 06bed324dbed803568bd76dd45b48ba6 OK

2020/11/25 14:01:14 INFO : gitlab_data/gitlab-rails/shared/lfs-objects/1a/ad/4150f7141b6a7c87633372aa1b4620f478b30b66a61584a5956a28ccd2f5: Copied (new)

2020/11/25 14:01:15 DEBUG : gitlab_data/gitlab-rails/shared/artifacts/21/6d/216da54b5931a6d37cca8e29953361fe02c680bbd8b482343f508e32e8e9cc3b/2019_09_04/21258/25787/job.log: MD5 = 4943fe6d52c48a6e61577f42203df9e5 OK

2020/11/25 14:01:15 INFO : gitlab_data/gitlab-rails/shared/artifacts/21/6d/216da54b5931a6d37cca8e29953361fe02c680bbd8b482343f508e32e8e9cc3b/2019_09_04/21258/25787/job.log: Copied (new)

2020/11/25 14:01:15 DEBUG : gitlab_data/gitlab-rails/shared/artifacts/1f/f7/1ff7b91c979ea344298826c212adf745783ae8a14a3eded36d56ba1b0f4bdeee/2020_02_13/47711/66998/job.log: MD5 = ac308e9bcf5672fd8af25c4c51c5b4ba OK

2020/11/25 14:01:15 INFO : gitlab_data/gitlab-rails/shared/artifacts/1f/f7/1ff7b91c979ea344298826c212adf745783ae8a14a3eded36d56ba1b0f4bdeee/2020_02_13/47711/66998/job.log: Copied (new)

2020/11/25 14:01:15 DEBUG : gitlab_data/gitlab-rails/shared/lfs-objects/1e/81/638215e29c6e68acd0f50884abe33cc26d3b2cb269f618c0597146d7771f: MD5 = f13b0028a60d2da4bea2d8a944944519 OK

2020/11/25 14:01:15 INFO : gitlab_data/gitlab-rails/shared/lfs-objects/1e/81/638215e29c6e68acd0f50884abe33cc26d3b2cb269f618c0597146d7771f: Copied (new)

2020/11/25 14:01:15 DEBUG : gitlab_data/gitlab-rails/shared/artifacts/20/9e/209eb5f20ab018ff6f1e42b98e5b57921aa4e2b7a7b683de32458b7153720a28/2019_01_17/2030/1950/job.log: MD5 = 6293774f59afd8ce2df3224579d26ae0 OK

2020/11/25 14:01:15 INFO : gitlab_data/gitlab-rails/shared/artifacts/20/9e/209eb5f20ab018ff6f1e42b98e5b57921aa4e2b7a7b683de32458b7153720a28/2019_01_17/2030/1950/job.log: Copied (new)