Hello

I have been scanning across these lands all day and although my findings have been very informative. Nothing has cured my issue

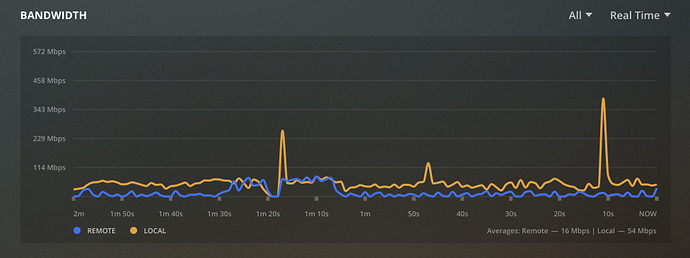

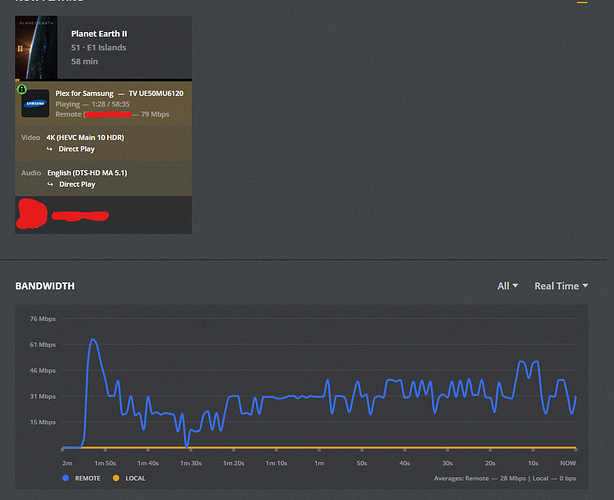

I have a VPS that i use for Plex and use Rclone to mount Google Drive to it and when i am trying to stream 4K (via direct play or direct stream) it gets so far then buffers forever until Plex calls it quits

I have a feeling it could be Rclone not 'being man enough' for what Plex is trying to do but that is a bit of speculation in all honesty

i was wondering if anyone could point me in the correct path?

Judging on other discussion i assume you'd need the follow but of course, if you need anything else i'll sort it for you

Server: Ubuntu 18.04 LTS - Hetzner

Rclone config:

[GDrive]

type = drive

client_id = Custom one i made

client_secret = Secret that follows

scope = drive

token = TOKEN

root_folder_id =

chunk_size = 64M

[GCache]

type = cache

remote = GDrive:Plex

plex_url = 127.0.0.1

chunk_size = 64M

info_age = 1d

chunk_total_size = 10G

[plexlib]

type = drive

client_id = Custom Client ID i made

client_secret = Secret that follows

scope = drive.readonly

token = "Token"

chunk_size = 32M

Now the "plexlib" was more me testing, i tried to avoid using the cache entirely and just see what happened if i mounted the drive directly as read only and pointed my plex library to it. My theory was the cache just being another step in the chain slowing it down but it did not go to plan if anything made it worse

My Systemd.Service

For the plexlib one if was messing around with

[Unit]

Description=RClone Service

After=plexdrive.target network-online.target

Wants=network-online.target

[Service]

Type=simple

ExecStart=/usr/bin/rclone mount plexlib:Plex /mnt/plexlib

--allow-other

--dir-cache-time=24h

--poll-interval 15s

--buffer-size 64M

--log-level INFO

--log-file /var/log/plexlib.log

ExecStop=/usr/bin/sudo /usr/bin/fusermount -uz /mnt/plexlib

Restart=on-abort

[Install]

WantedBy=default.target

And for the GCache

[Unit]

Description=RClone Service

After=plexdrive.target network-online.target

Wants=network-online.target

[Service]

Type=simple

ExecStart=/usr/bin/rclone mount GCache: /mnt/gdrive

--allow-other

--dir-cache-time=240h

--cache-workers=5

--cache-db-purge

--umask 002

--rc

--buffer-size 64M

--cache-tmp-upload-path /mnt/rclone_cache_upload

--cache-tmp-wait-time 60m

--log-level INFO

--log-file /var/log/rclonemount.log

ExecStop=/usr/bin/sudo /usr/bin/fusermount -uz /mnt/gdrive

Restart=on-abort

[Install]

WantedBy=default.target

I did play around with the cache sizes and making cache directories and cache DB directories, using --fast-list, increasing the workers and a whole lot more but i feel like i am more tryagnosing than diagnosing at this point

If you need anything else let me know