What is the problem you are having with rclone?

Guys, I recently discovered this wonderful tool that is Rclone, after a lot of reading I got excellent results and different methodologies for my cloud backups. The only problem I'm having is the following:

One of my scripts involves creating a complete image of my Windows Server, and then after the image is created, Rclone uploads it to the One Drive cloud.

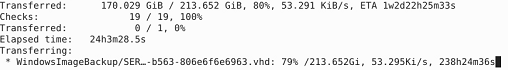

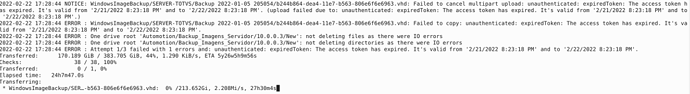

The problem itself is that the system image is 213 GB, and initially I had the problem that the upload was stopped with an error because it said that the file had been modified or something, and after research I discovered that One Drive has this problem where it somehow changes the file sizes and with that Rclone does not synchronize the folder correctly which causes the error and cancellation of the upload, but this problem I managed to solve using --ignore-size to the rclone sync command. The problem I am facing now is the error :

"Failed to copy: unauthenticated: expiredToken: The access token has expired. It's valid from '2/20/2022 12:26:23 PM' and to '2/21/2022 12:26:23 PM'."

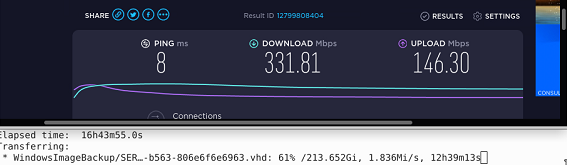

I'm not sure what is causing this problem, it seems that because the file is too big and takes more than 24 hours to finish, the token expires...

I wanted a way to be able to upload this without problem, I don't know if I can add some command to the line so that the token doesn't expire, and in the last case, some way in which the file is divided, I've seen that there is the command " chunk" but I didn't understand and I couldn't find examples of how it could apply to my situation.

Run the command 'rclone version' and share the full output of the command.

rclone v1.57.0

- os/version: ubuntu 21.10 (64 bit)

- os/kernel: 5.13.0-28-generic (x86_64)

- os/type: linux

- os/arch: amd64

- go/version: go1.17.2

- go/linking: static

- go/tags: none

Which cloud storage system are you using? (eg Google Drive)

One Drive