What is the problem you are having with rclone?

Lack of Progress / Statistics Reports for WebDav / SharePoint

that has no backend commands.

Run the command 'rclone version' and share the full output of the command.

rclone --version

rclone v1.57.0-DEV

- os/version: rocky 8.10 (64 bit)

- os/kernel: 4.18.0-553.33.1.el8_10.66.x86_64 (x86_64)

- os/type: linux

- os/arch: amd64

- go/version: go1.16.12

- go/linking: dynamic

- go/tags: none

This is the latest version in the Rocky RHEL8.10 distro.

Which cloud storage system are you using? (eg Google Drive)

SharePoint

The command you were trying to run (eg rclone copy /tmp remote:tmp)

/usr/bin/rclone mount --daemon --log-file=/root/rclone.log --log-format=pid --vfs-cache-mode writes --vfs-write-wait 10s ${REMOTE}: ${URL}

Please run 'rclone config redacted' and share the full output. If you get command not found, please make sure to update rclone.

[$REMOTE]

type = webdav

url = https://${ORG}sharepoint.com/${URL_STEM}

vendor = sharepoint

user = $USER

pass = $PASS

A log from the command that you were trying to run with the -vv flag

No output written to log

I am trying to transfer 264 2GB files to SharePoint , which together make up

a set of Linux Volume Manager (LVM) Physical Volume (PV) files,

which comprise an LVM Volume Group (VG) that is 100% utilized by

an LVM Logical Volume (LV) , that is to contain a backup of an @ 500GB

XFS filesystem, such that one Linux host can mount these remote PVs in RW mode,

and other Linux hosts can mount it in RO mode.

I started off by creating about 80 of these PVs, initializing them to 2GiB (1<<31) of

zero bytes, mounting them on the loop device using 'losetup(8)', running 'pvcreate(8)' for each of them, adding them to a new Volume Group created with 'vgcreate(8)', (NOT using sharing / lvmlockd) , and creating

a new resizable Logical Volume using all of them, creating an XFS filesystem,

and dumping directories containing the first @ 150GB of directories to dump .

That was about 6 days ago.

Since then, NO files have appeared in SharePoint directory when viewed by a

browser, nothing has been written to the log file, and I have no idea how long

the transfer process will take.

I am trying to move this vast directory to cloud storage, the host has only

about 160GB free storage left, we don't need regular access to many of the files

it contains which date back over 10 years, but we don't want to delete them

altogether.

I can see rclone is writing to the SharePoint server :

$ netstat -nautp | grep rclone

tcp 0 0 ${HOST_IP}:${LOCAL_PORT} 13.107.136.10:443 ESTABLISHED 137412/rclone

$ tcpdump -n -v -tt -l -i any src ${HOST_IP} and dst 13.107.136.10

...

1736936538.836001 IP (tos 0x0, ttl 64, id 22606, offset 0, flags [DF], proto TCP (6), length 2920)

${HOST_IP}.${TMP_PORT} > 13.107.136.10.https: Flags [.], cksum 0x7f6a (incorrect -> 0x678c), seq 1667695:1670575, ack 14855, win 858, length 2880

1736936538.837107 IP (tos 0x0, ttl 64, id 22608, offset 0, flags [DF], proto TCP (6), length 2920)

${HOST_IP}.${TMP_PORT} > 13.107.136.10.https: Flags [.], cksum 0x7f6a (incorrect -> 0xfe9f), seq 1670575:1673455, ack 14855, win 858, length 2880

1736936538.838058 IP (tos 0x0, ttl 64, id 22610, offset 0, flags [DF], proto TCP (6), length 1480)

${HOST_IP}.${TMP_PORT} > 13.107.136.10.https: Flags [.], cksum 0x79ca (incorrect -> 0x79f9), seq 1673455:1674895, ack 14855, win 858, length 1440

On Sunday, I saw that 'netstat -nautp' reported NO open sockets for the rclone

daemon, so I stopped and restarted it, and transfers have since resumed.

So far, not one single 2GB PV file has appeared in SharePoint, since I started last

Thursday (today is Wednesday).

My plan is, once transfer has completed, and I can read back and checksum the remote files, and the checksums of all backed up files match, I can remove the

~/.cache/rclone/vfs/${REMOTE}/* files, remove the original backed up files,

freeing space, and go on to create the next 150 GB of PV files,

dump more of the filesystem, and since we are using '--vfs_cache_mode=writes',

only the new and first of the files will be cached, we won't have to hold a

complete replica of all the PV files in local cache, for which we have insufficient

storage to accommodate.

It would be great to get some indication of the progress of the copy of the

local cache files to the remote SharePoint directory, but I can't find any

rclone command to do this, beyond the backend commands, which do

not exist for SharePoint .

Please, could a future version of rclone have such a command, which, for

every file partially transferred, would print out the number of bytes transferred

and the number still to transfer ? This is a major inadequacy of rclone that

impacts its overall usefulness, IMHO. If I had known there were no status

or progress report commands or log messsages, I would have tried to use

a different tool.

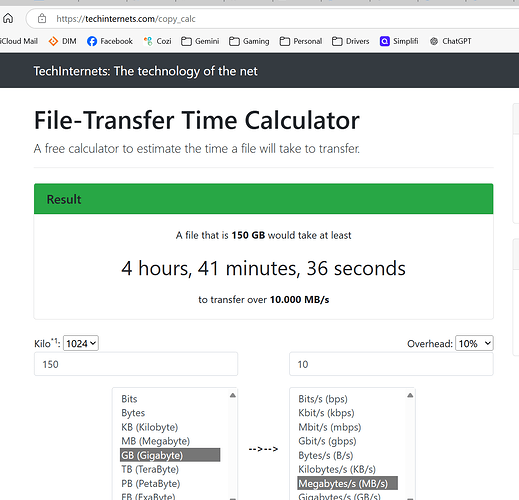

Could anyone hazard a guess as to how long a transfer of 150GB should take,

given our 10MB/s internet connection ?

( This is obviously a log longer than the (( (150 x 10^9) / (10 x 10^6)) / 60)

== 250 minutes theoretical minimum, it has taken @ 6 days now ).

Any suggestions on how to get some idea of progress from rclone or SharePoint

would be most gratefully received.

Please consider adding a progress monitoring facility / commands / options to

rclone mount !