Yes, the cache-db-purge rebuilds each time the service is started. I found this to be helpful just to keep things clean and current in case of anything going out of whack. In a perfect world, it wouldn’t be needed, but I don’t mind the minimal API hits a rebuild does as I’m well under those numbers.

/dev/shm is one half of your system memory in linux. So in my case:

cat /proc/meminfo

MemTotal: 32836332 kB

and my /dev/shm

df -h /dev/shm

Filesystem Size Used Avail Use% Mounted on

tmpfs 16G 2.6G 14G 17% /dev/shm

It’s kind of a cheat to use, but works well. It gets cleaned out every reboot as well so for me, the setup more mirrors a memory only configuration as I don’t want any persistent storage of the chunks as my use case is people don’t watch the same stuff really and bandwidth usage caps are not an issue for me.

For the cache use, you do want 0M buffer size as the cache handles all that stuff and setting a bigger buffer only ‘double caches’ the information.

For plex transcoding, you ideally want that on fast storage as well as I do all my plex transcoding to my local SSD drive so that’s not a slow point. Some people do transcoding to /dev/shm, but for me, once I got bigger files, that really wasn’t a good use case.

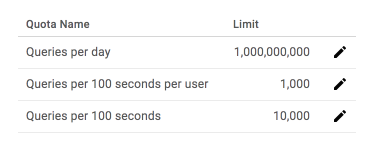

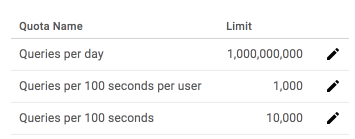

For #2, my understanding that every other operation gets 1 cache worker but with the integration it detect a file being played, it bumps that up to to the configured cache-workers or the default of 4. You can always just turn off plex integration and bump up the cache workers to a bigger number 5-8 and see if that helps speed the uploads. That goes back to my previous statement that a few more API hits for you most likely won’t matter as the daily quote is huge.

In 30 days with all this testing, I’ve personally haven’t hit 1 million queries let alone that actual single day quota.

I use mergerfs now to always write to my /gmedia locally first which maps back to /data/local for me.

My /GD is my rclone mount and my merger command is:

felix@gemini:~/scripts$ cat mergerfs_GD

#!/bin/bash

/usr/bin/mergerfs -o defaults,sync_read,allow_other,category.action=all,category.create=ff /data/local:/GD /gmedia

I use a standard rclone move on my /data/local and let that run nightly to clean up anything put locally. rclone’s standard 1 minute cache polling picks it up and things are on the same path so no changes from plex/radarr/sonarr as everything points to my /gmedia