My GDrive configuration if as follows:

Google Drive -> Cached Encrypted Remote -> Decrypted Remote that is mounted on my OS

[GD]

type = drive

client_id = client_id_key

client_secret = client_secret

token = {"access_token":"access_token_key","expiry":"2018-03-30T09:15:29.049443547-04:00"}

[gcache]

type = cache

remote = GD:media

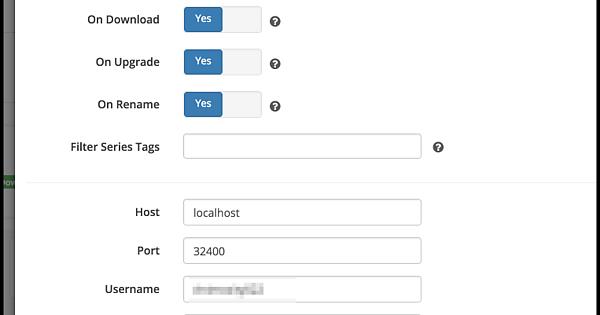

plex_url = http://127.0.0.1:32400

plex_username = Username

plex_password = Password

plex_token = Token

chunk_total_size = 32G

[gmedia]

type = crypt

remote = gcache:

filename_encryption = standard

password = cryptpassword

password2 = cryptpassword

directory_name_encryption = true

I use /gmedia for my mountpoint and that contains a directory for Movies and TV shows.

[felix@gemini gmedia]$ ls -al

total 0

drwxrwxr-x 1 felix felix 0 Apr 19 2017 Movies

drwxrwxr-x 1 felix felix 0 Apr 18 2017 TV

My main user on my box is 'felix' and my 'plex' user is part of my 'felix' group so the plex user can access all my files via the group permissions that I have set on the rclone mount.

My systemd startup:

[felix@gemini system]$ cat rclone.service

[Unit]

Description=RClone Service

AssertPathIsDirectory=/home/felix

After=plexdrive.target network-online.target

Wants=network-online.target

[Service]

Type=simple

ExecStart=/usr/bin/rclone mount gmedia: /gmedia \

--allow-other \

--dir-cache-time=160h \

--cache-chunk-size=10M \

--cache-info-age=168h \

--cache-workers=5 \

--buffer-size=500M \

--attr-timeout=1s \

--syslog \

--umask 002 \

--rc \

--cache-tmp-upload-path /data/rclone_upload \

--cache-tmp-wait-time 60m \

--log-level INFO

ExecStop=/usr/bin/sudo /usr/bin/fusermount -uz /gmedia

Restart=on-abort

User=felix

Group=felix

[Install]

WantedBy=default.target

I have 32GB of memory on my system and not much else running so 500M per file opened hasn't seemed to cause any problems personally for me. I at tops have 8 streams going at a single time.

I have a local disk that temporarily holds my items for an hour before uploading them to my GD. That is located at my /data/rclone_upload as my temporary area. This moves based on time and not size so always good to have an idea on your space.

My Sonarr and Radarr point directly to /gmedia/TV and /gmedia/Movies respectively and my plex libraries point to the same for the TV and Movie libraries.

With no cache at all the first scan for me takes about 10-15 minutes as it rolls through all the direcrtories and stores the items in the cache.

I have ~22k items in my library and ~35TB in my GD.

[felix@gemini system]$ plex-library-stats

30.03.2018 08:31:19 PLEX LIBRARY STATS

Media items in Libraries

Library = TV

Items = 18828

Library = Movies

Items = 1734

Library = Exercise

Items = 279

Library = MMA

Items = 53

22015 files in library

0 files missing analyzation info

0 media_parts marked as deleted

0 metadata_items marked as deleted

0 directories marked as deleted

20898 files missing deep analyzation info.

My overall goal is simplicity so I remove any other programs that didn't add value for me. I let both Radarr and Sonarr scan as they normally would if things were local since all the items are cached anyway. Sonarr only scans a TV folder when it adds something. Radarr is little more annoying as the entire Movie folder is scanned once added to Plex, but again, it's all cached so who cares if it takes a few seconds.

[felix@gemini gmedia]$ time ls -alR | wc -l

29238

real 0m2.303s

user 0m0.051s

sys 0m0.124s

[felix@gemini gmedia]$ time ls -alR | wc -l

29238

real 0m0.692s

user 0m0.060s

sys 0m0.131s

I can live with those times to hit every file and generate no API hits. I run my cache chunks storage on SSD. I have a Verzion Gigabit FIOS so upload/download aren't an issue.