What is the problem you are having with rclone?

Cannot upload big file to B2, when on a fast 2.5 Gbit connection.

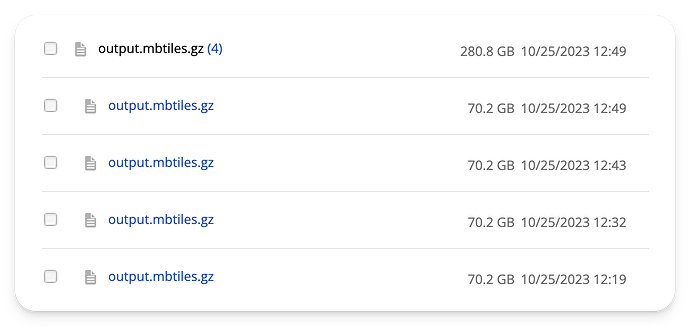

The upload process is super weird, the file is 66 GB, but it transfers to 66 GB first, with some errors, then it transfers to 69 GB, then it starts transferring to 134 GB, then it gets 199 GB, then it finally terminates with error. There are errors in between as well.

Server is a beefy 32 CPU server with 128 GB ram, 2.5 Gbit connection, nothing else is running.

I've never seen anything like this happening with rclone before.

Run the command 'rclone version' and share the full output of the command.

rclone v1.64.2

- os/version: ubuntu 22.04 (64 bit)

- os/kernel: 5.15.0-87-generic (x86_64)

- os/type: linux

- os/arch: amd64

- go/version: go1.21.3

- go/linking: static

- go/tags: none

Which cloud storage system are you using? (eg Google Drive)

B2

The command you were trying to run (eg rclone copy /tmp remote:tmp)

rclone -vv --multi-thread-streams=32 --stats=10s

copyto a.gz b2:b/c.gz --log-file=rclone.log

The rclone config contents with secrets removed.

[b2]

type = b2

account = x

key = x