Perfect and you did most of it yourself by cleverly building on top of my curious questions.

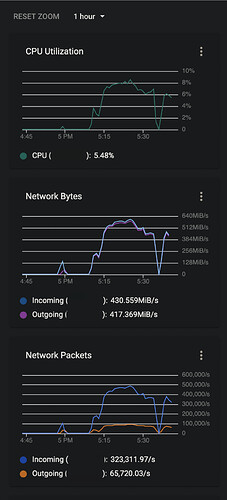

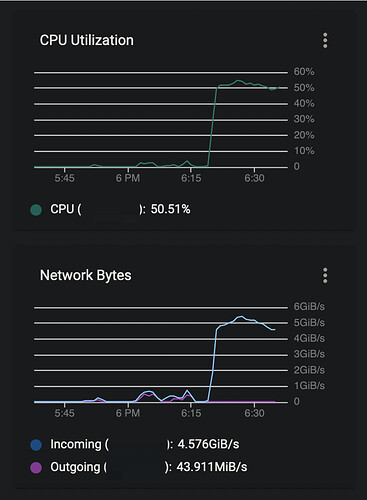

I would consider a sustained data transfer of 6 GiB/s very good. That does require good end-to-end hardware, software and setup. You are now reaching a download on 80% of the result from speedtest.com. Not bad at all, when comparing a controlled file transfer with https encryption, directory traversal, checksums etc with a raw http transfer of random data.

Also remember that the number you see in rclone is only the size of the files transferred. It doesn't show alle the overhead communication needed to perform the file transfers such as directory listings, reading/storing hash sums etc. etc.

Perhaps we can improve, I have some ideas you can test.

I would

- increase transfers to 128 based on your GDrive download test (5632/1400*32); I know that this is upload, but it is a good first guess.

- typically use 2 to 4 times as many checkers as transfers when copying/syncing - especially when the number of files to be transferred are lower than the number of files in the target.

- optimize Google Drive tunning parameters based on forum consensus, that is add

--drive-pacer-min-sleep=10ms --drive-pacer-burst=200

- keep the default --drive-chunk-size, unless you have tested and found a significant improvement. Note, the effect may change with the number of transfers - so it need to be retested when changing --transfers.

So something like this might be faster:

rclone -v copy --disable-http2 --checkers=256 --transfers=128 --drive-pacer-min-sleep=10ms --drive-pacer-burst=200 GCSTakeout:bucket rGDrive:folder --progress

or this (with large chunk-size and fewer transfers to save memory):

rclone -v copy --disable-http2 --checkers=128 --transfers=64 --drive-chunk-size=512M --drive-pacer-min-sleep=10ms --drive-pacer-burst=200 GCSTakeout:bucket rGDrive:folder --progress

I don't know if you clear the target folder before each test, otherwise you may want to consider adding --ignore-times to force a comparable retransfer of all files.

If one of them is faster, then tweak the transfer, checkers and chunk-size up/down until you find the sweet spot.

You may also be able to optimize the command to your specific data/situation. Here are some flags to consider/test:

--no-check-dest

--no-traverse

Here it is again important to stick to the KISS principle of only testing/adding one flag at the time and only keeping the ones that really makes a significant difference.

Note: I don't use Gdrive that much, but I have an impression you can only upload 750GB/24h - are you aware of this?