hi,

about that config file, should use your own client id/secret

https://rclone.org/drive/#making-your-own-client-id

That's pretty much the fix here so that's why we ask for that stuff up front as you/we've wasted a ton of your time/mytime as the default rclone keys are oversubscribed and that's why you get those messages.

That's why tpslimit of 10 won't help.

Please make your own client ID/secret and retest as that will greatly impact your performance.

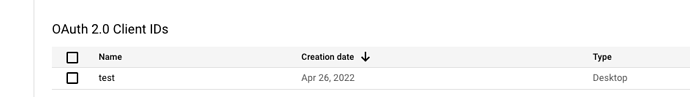

guys I obviously did create a project back in the days. I removed my details from the pasted conf file as it would be as simple as copy/pasting it to get access to my google drive ! ![]()

One more time, this started to drop like 10 days ago, and I am using rclone for years.

I will make sure to re-follow your guide, as it might be that something screwed when I moved to my new server. but I usually was simply transfering the rclone.conf from one server to another to find all my remotes back without any issue.

I will check what happens once i'll have re-configured rclone with my clientID from scratch.

I did figure this out though :

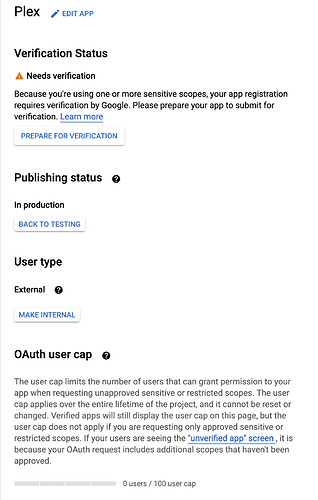

It looks like google has to RE-validate something ?

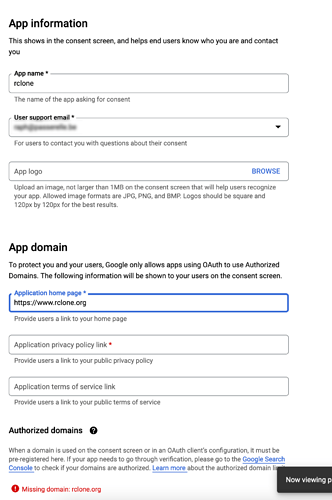

When I try to reconfigure my Oauth consent screen, I am requested to enter more information that the one listed in your tutorial :

it asks me to enter a valid domain, etc.

any clue ?

If you don't share the fact it exists, how would we possibly know it's there as it's not included? We ask to redact/remove the secret part so we know it exists and it isn't obvious by any means as generally folks do not make one.

Any chance you can post the actual screenshot I asked about and shared an example of? That's the bit I'm looking for...

But now I did delete the old one as it needed verification and need to create a new one. But it keeps asking me to enter a domain etc.

OK so I created a totally new client ID and removed the "sensitive" thingy in order to get faster validation.

I recreated a totally new rclone config using the new clientID and secret.

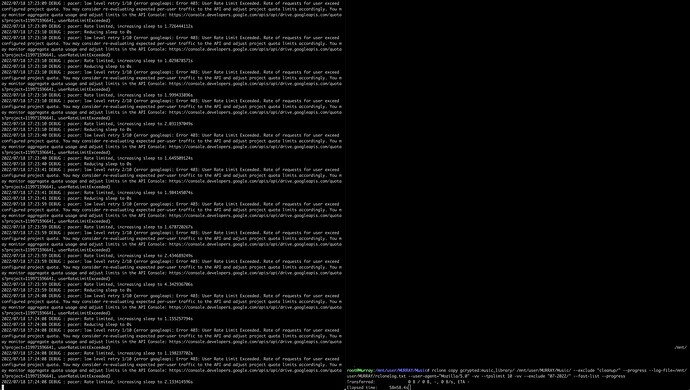

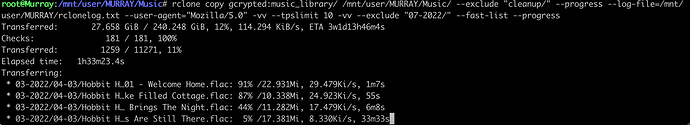

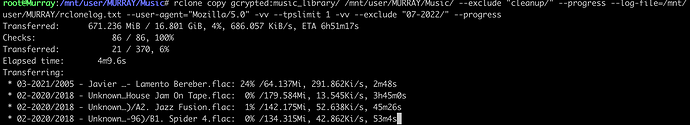

Running this now :

rclone copy gcrypted:music_library/ /mnt/user/MURRAY/Music/ --exclude "cleanup/" --progress --log-file=/mnt/user/MURRAY/rclonelog.txt --user-agent="Mozilla/5.0" -vv --tpslimit 10 -vv --exclude "07-2022/" --fast-list

Lets see if it keeps crashing ! I'll keep you posted.

By the way, thank you so much for your efforts helming me here guys, it is MUCH appreciated.

Deleted the old Client ID/Secret? Deleted the old one what? Consent Screen?

Are you a GSuite / Education / Personal user?

The errors you are seeing with TPS limit "look" like you aren't using a Client ID/Secret that's connected as that's why TPS limit does not seem to work.

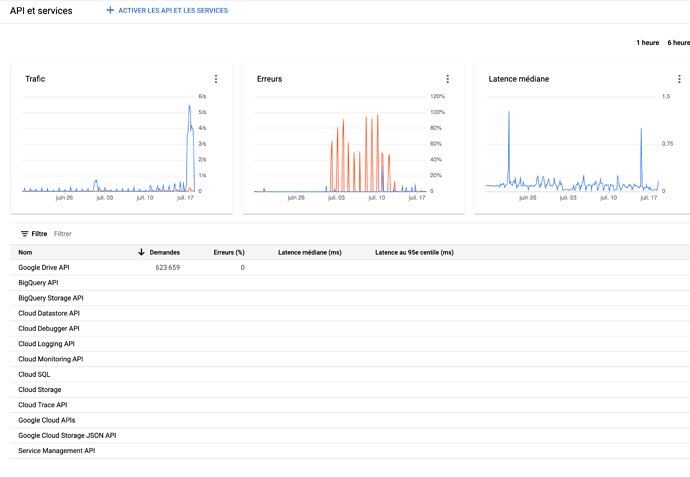

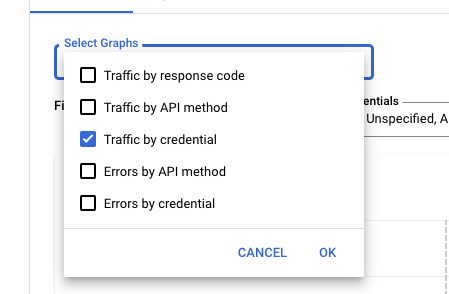

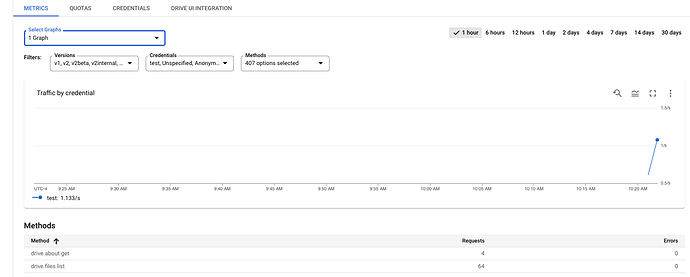

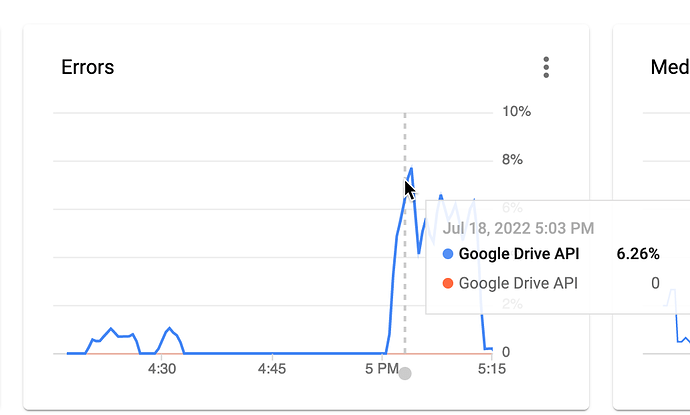

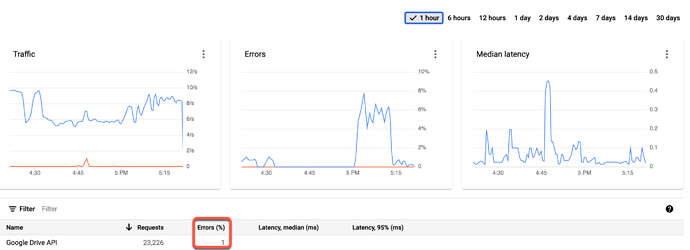

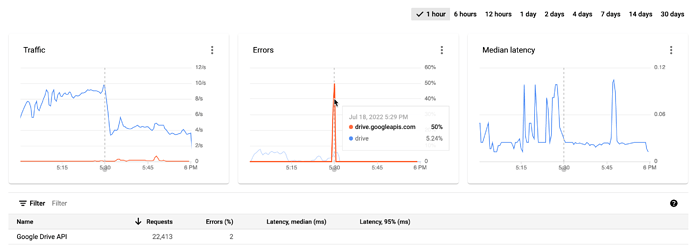

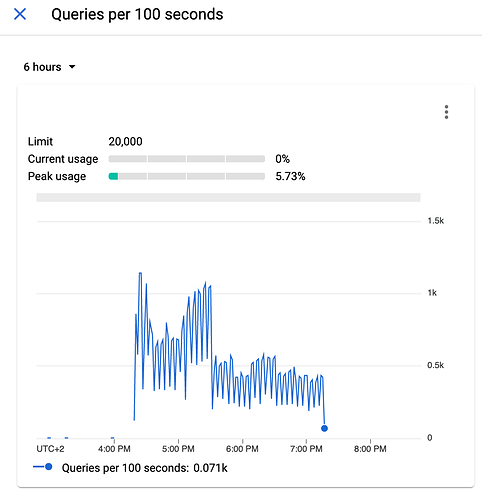

My app is just called test as I don't use Google Drive anymore. You should be able to toggle by Credential in the screenshot I'm sharing and put it to something like 6 hours or 24 hours depending on what you got the errors. The more exact you can be, the better the graph can help as it won't average it out as much.

and my test app.

If you reproduce in the 1 hour or 6 hour window, that should mirror what you are seeing in the logs if you are being rate limited. If you are not seeing traffic, the client ID/Secret isn't connected.

here you can see the peak based on the two last test copies I ran. The second is keeps running.

"rclone copy gcrypted:music_library/ /mnt/user/MURRAY/Music/ --exclude "cleanup/" --progress --log-file=/mnt/user/MURRAY/rclonelog.txt --user-agent="Mozilla/5.0" -vv --tpslimit 10 -vv --exclude "07-2022/" --fast-list --progress

Transferred: 0 B / 0 B, -, 0 B/s, ETA -

Elapsed time: 8m33.4s

I guess something happens in the background, but I'm not sure what, exactly.

The log shows me a lot of 403 errors, but all of them seem to be 1/10 and as you can see, I'm not hitting the limits when checking the API graphs. Right ?

If you are seeing 403s in the log file and not seeing them in the Google Admin Console, something is wrong as I don't think you are using the client ID/secret.

If you recreated something, you may want to

rclone config reconnect YOURREMOTE:

And let it refresh and see.

Is something else using that oauth ID? I'd make one specific for what you are doing so you are 1000% sure that it is using the right one and you can see traffic in the console:

You can make any many apps as you need and they all tie back to the user anyway so it's no harm and helps to separate traffic.

as I did delete the only one I had before, there is no way anything else could be using this clientID.

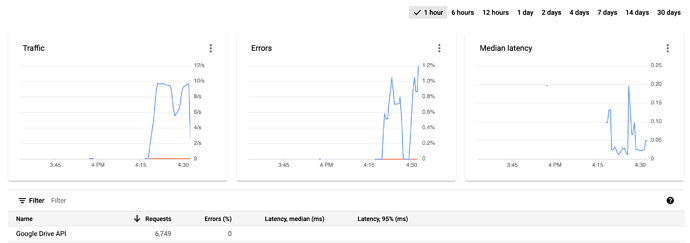

I can see errors :

It's just that's the error rate is quite low:

Since I reconfigured rclone using the newly created clienID, I guess tpslimit now works, and thats the reason why my rclone client is scanning files, and showing me the excludes one by one, without having started the actual copy yet ?

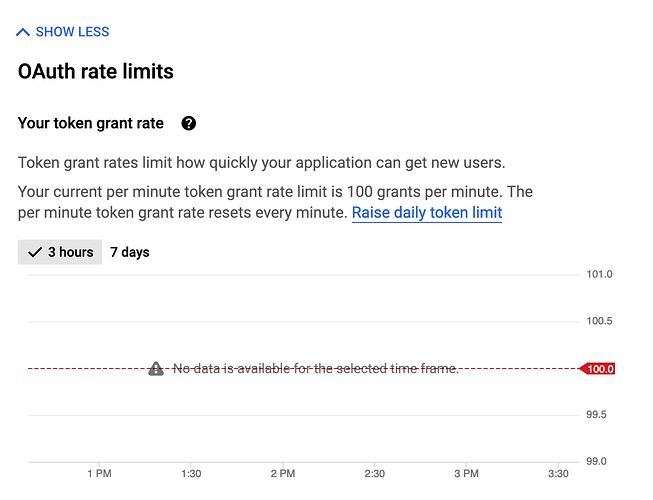

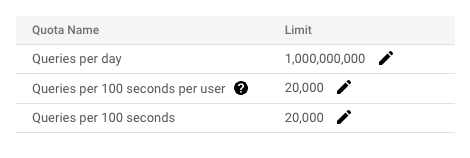

If your quotas as the defaults:

I still don't understand if you are seeing the app name properly in that screen and it's incrementing, with a small tps limit of 10, there shouldn't be any rate limiting going on.

I'd be interested to see if you start at say 1 and work you way up, does it eventually break. If so, what breaks.

If it fails at 1 as an example, either Google has something busted for you or rclone isn't honoring the tps limit.

aaaaand here we are again :

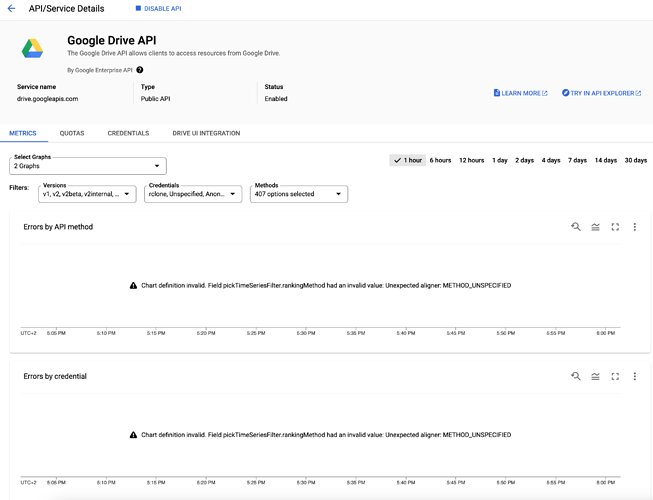

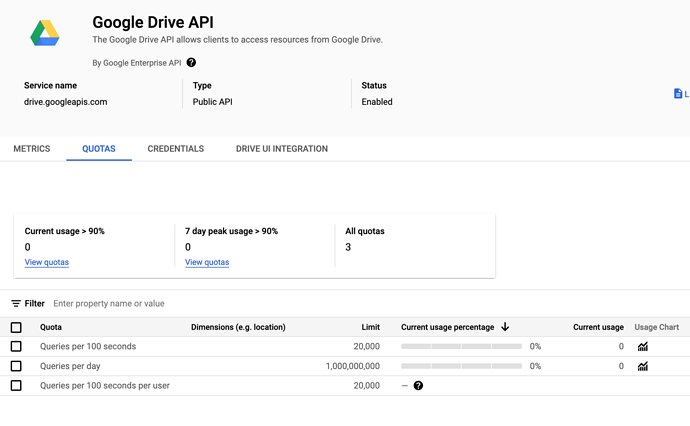

Once I click on google API, I reach this screen :

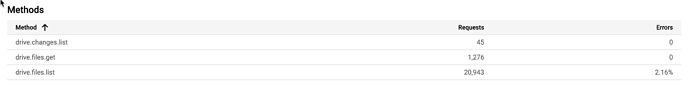

I can't see any graphs there, only the following in the below table

And here are the quotas

But one more time, I re-created the clientID, re-created the remote using rclone config, using clienID and secret, and the behavior is one more time the same, it starts at full speed, then drops to a few kbytes per second.

I restarted the transfer using tpslimit=1 and it directly started copying at super low rates

So we can't really mix issues here as if you are not getting rate limited, the other thread is more applicable:

Rclone mount random slow speeds - Help and Support - rclone forum

As Google has been slow for just about everyone at different spots around the world.

If the rate limiting issue isn't happening, I'd read that other thread as that's more relevant.

running it with only 1 query per second seems to totally avoid the 403's. But the bw still drops. I don't even reach a kbit now.

Not sure what these guys on the other thread are discussing, I'll have to dig it.

But in short, it seems like google is capping us ? right ? or is it a peering issue that suddenly happened? I really, never had any issue downloading my files. The only thing that happened, is I decided to grab my online content back locally. Meaybe do they cap people that downloads more than XX terrabytes in a short period of time ?

No. It just depends on which IP www.googleapis "decides" to answer. Some are really slow.

And this is something new, right ? I've never, ever had an issue in the past.

Anyway, lets go to the other thread I guess.

Still there is something weird. I dont believe my issue is due to slow google servers.

Why do I get al these User rate limit exceeded events, and only do see I actually use 6% of the max :

I just did another test. I used the same user (same google account) and tried to start a copy to a NEWLY created team drive.

Simply copied a test file (a rclone log for instance) of 100Mb. As soon I started the copy, I got the same exact 403 errors again. So my guess is it is user related. What other type of API limit could I be reaching ? and where should I go to display the graphs ?

There isn't any place to easily see exactly what is being rate limited and why because Google doesn't share that. You can see the aggregate values over time / per 100 seconds, but that's about it.

Seeing the fact no other person is reporting rate limiting, there aren't too many other ideas I can think of.

- You aren't using what you think you are albeit, your screenshots seem to make that unlikely but I can't be sure

- Your API is messed up and you'd ask Google Support. Doubtful they will say much.

- You hit an odd regression with a version. You can downgrade back and test and see if other earlier versions produce the same issue or not

You said 1 didn't give you 403s, but you didn't seem to go to 2,3,4,5,etc and get a good test base.

The speeds aren't super relevant as there is a whole thread about bad speeds with Google so let's not mix issues.

you are right, I will --tpslimit 2, 3, 4 .... and see when 403's appears again.

Questin does tpslimit concern every single aspect of the API ? I mean, file list requests, file names, copies, ???