What is the problem you are having with rclone?

I am in the process of downloading a bunch of stuff I had stored in the cloud on my new unraid server. mostly roms, and small files. Problem is, it represents several hundreds of thousand of files, and it seems the action of verifying the files makes me hit the API limits.

FYI. I've checked and I should be allowed to reach 100000 hits per 100 seconds.

I do start the copy with --vv and can see very quickly that I start getting parse errors, telling me the limit is reached. as soon this happens, my full speed downloads (45MB/s max at home) starts to fall, then end up at a terrible speed of approx 600KB/s while I keep getting the following error, and even longer sleep values:

2022-07-16 00:00:46 DEBUG : pacer: low level retry 1/10 (error googleapi: Error 403: Rate Limit Exceeded, rateLimitExceeded)

2022-07-16 00:00:46 DEBUG : pacer: Rate limited, increasing sleep to 16.223130348s

I also got another, more detailed version of the error when trying to copy music files, I did not locate on a shared drive:

"2022-07-15 23:28:15 DEBUG : pacer: low level retry 1/10 (error googleapi: Error 403: User Rate Limit Exceeded. Rate of requests for user exceed configured project quota. You may consider re-evaluating expected per-user traffic to the API and adjust project quota limits accordingly. You may monitor aggregate quota usage and adjust limits in the API Console: userRateLimitExceeded)"

Run the command 'rclone version' and share the full output of the command.

rclone v1.59.0

- os/version: slackware 15.0 (64 bit)

- os/kernel: 5.15.46-Unraid (x86_64)

- os/type: linux

- os/arch: amd64

- go/version: go1.18.3

- go/linking: static

- go/tags: none

Which cloud storage system are you using? (eg Google Drive)

Google drive enterprise (where the files are stored)

Unraid (local destination folder)

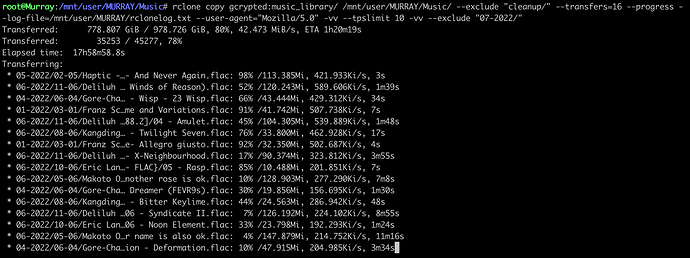

The command you were trying to run (eg rclone copy /tmp remote:tmp)

rclone copy team2:Games/No-Intro/ /mnt/user/Emulation/Roms/ --progress --size-only --transfers=16 --vv

A log from the command with the -vv flag

the only errors that needs to be noticed are pasted above. really.

So my question is, how can I grab rest of my files at a decent speed ?

Thanks ! ![]()