What is the problem you are having with rclone?

The rclone consumes a lot of memory during running copy from Azure blob storage to another azure blob storage.

IIUC from my basic calculation I should have in memory:

TRANSFERS * RCLONE_AZUREBLOB_UPLOAD_CONCURRENCY * RCLONE_AZUREBLOB_CHUNK_SIZE = 1280Mi

Run the command 'rclone version' and share the full output of the command.

# rclone --version

rclone v1.67.0

- os/version: amazon 2 (64 bit)

- os/kernel: 3.10.0-1160.95.1.el7.x86_64 (x86_64)

- os/type: linux

- os/arch: amd64

- go/version: go1.22.4

- go/linking: static

- go/tags: none

Which cloud storage system are you using? (eg Google Drive)

azureblob

The command you were trying to run (eg rclone copy /tmp remote:tmp)

/usr/local/bin/rclone copy --metadata --stats 60m --stats-log-level ERROR --transfers 20 --log-level ERROR --use-json-log --retries 1 src:<container_name> dst:<container_name>

The rclone config contents with secrets removed.

I do not use any special config, all variables are paste or as command arguments or env vars, so I will add here env variables that I am using:

# related to src and dst

'AZURE_SRC_STORAGE_ACCOUNT'

'AZURE_SRC_CONTAINER'

'AZURE_SRC_STORAGE_SAS_TOKEN'

'AZURE_DST_STORAGE_ACCOUNT'

'AZURE_DST_CONTAINER'

'AZURE_DST_STORAGE_SAS_TOKEN'

# related to rclone configuration

'RCLONE_CONFIG_SRC_TYPE': 'azureblob'

'RCLONE_CONFIG_SRC_SAS_URL': <src_sas_url>

'RCLONE_CONFIG_DST_TYPE': 'azureblob'

'RCLONE_CONFIG_DST_SAS_URL': <dst_sas_url>

'RCLONE_AZUREBLOB_NO_CHECK_CONTAINER': 'true'

A log from the command with the -vv flag

Do not have logs, because it happening for one of our customers and contains some confidential data, but I have output from rclone stats

{

"message": "rclone stats",

"timestamp": "2024-09-28T04:45:39.481965Z",

"level": "INFO",

"pathname": "/cloud_migration_agent/cloud_agent/cloud_agent.py",

"line_number": 232,

"process_id": 1,

"threadName": "MainThread",

"bytes": 64232164651661,

"checks": 63159142,

"deletedDirs": 0,

"deletes": 0,

"elapsedTime": 248400.017623065,

"errors": 0,

"eta": null,

"fatalError": false,

"renames": 0,

"retryError": false,

"serverSideCopies": 0,

"serverSideCopyBytes": 0,

"serverSideMoveBytes": 0,

"serverSideMoves": 0,

"speed": 341611303.33405966,

"totalBytes": 64221630675261,

"totalChecks": 63169158,

"totalTransfers": 495339,

"transferTime": 248388.317982617,

"transfers": 495338

}

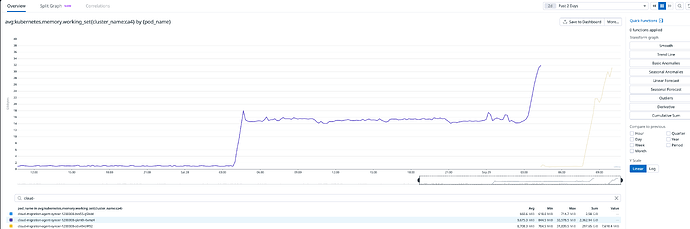

!!!! here was a first jump in memory consumption from from 1Gb to 15Gb, see the metrics !!!!

{

"message": "rclone stats",

"timestamp": "2024-09-28T03:45:39.579526Z",

"level": "INFO",

"pathname": "/cloud_migration_agent/cloud_agent/cloud_agent.py",

"line_number": 232,

"process_id": 1,

"threadName": "MainThread",

"bytes": 63436603913425,

"checks": 62210374,

"deletedDirs": 0,

"deletes": 0,

"elapsedTime": 244800.022861132,

"errors": 0,

"eta": null,

"fatalError": false,

"renames": 0,

"retryError": false,

"serverSideCopies": 0,

"serverSideCopyBytes": 0,

"serverSideMoveBytes": 0,

"serverSideMoves": 0,

"speed": 395717898.7707056,

"totalBytes": 63433873851477,

"totalChecks": 62220389,

"totalTransfers": 494910,

"transferTime": 244788.393254615,

"transfers": 494907

}

IIUC once rclone just checked the files that was already exist, it did not really consume any memory, but once did it come to files that should be transferred it started to consume a lot of memory, but to be honest it also shows some transfers before memory jump, so I unsure what happened here.

Our code restarted the pod with rclone for the same storage account and it from the beginning stated to consume a lot of memory, see next graph in timeline.

It the output from the next run:

{

"message": "rclone stats",

"timestamp": "2024-09-29T06:26:24.507395Z",

"level": "INFO",

"pathname": "/cloud_migration_agent/cloud_agent/cloud_agent.py",

"line_number": 232,

"process_id": 1,

"threadName": "MainThread",

"bytes": 372150918280,

"checks": 84822876,

"deletedDirs": 0,

"deletes": 0,

"elapsedTime": 18000.007430341,

"errors": 0,

"eta": 0,

"fatalError": false,

"renames": 0,

"retryError": false,

"serverSideCopies": 0,

"serverSideCopyBytes": 0,

"serverSideMoveBytes": 0,

"serverSideMoves": 0,

"speed": 209433916.89574507,

"totalBytes": 372150918280,

"totalChecks": 84832890,

"totalTransfers": 81273,

"transferTime": 5012.228343695,

"transfers": 81273

}