rclone v1.63 windows on one computer ubuntu on the other

edit: To clarify these computers are in different state's with different internet.

Uploading to dropbox even in sync or async mode will eventually result in me hitting the too many requests wait 300 seconds message.

But the commands cannot reliably recover from this message leaving them stuck for all eternity.

It worked perfectly with only one computer.

Stopping them both waiting 5-10minutes and restarting one of them always works perfectly.

Conclusion, the wait timer setup for dropbox is not anticipating two different computers attacking the same dropbox account via the same dropbox personal api at the same time.

Is there a flag I could use to extend this timer in a way that solves all my problems?

example command:

.\rclone copy -v --transfers 16 --bwlimit 110M --dropbox-batch-mode async --drive-chunk-size 128M --max-transfer 1500G "googledrive:blahblahblah" "dropboxsmugglefistgames:/blahblahblah"

Alternatively maybe I could move one of these two a different api and target two api's at the same dropbox account and somehow get better performance? (each computer would use a different one in this case.)

edit: This happened 2or3 times, but now I'm repeating the scenario with two api tokens instead of a shared one. Could be an hour or two until I get stuck again, or it could already be solved via this tactic.

I know some people use multiple VPS during a migration from google to dropbox though, so surely it's possible, not sure what flag or setting I might be doing poorly, or if I just have more small files than the average person.

edit: hit the first 300second wait and recovered this time, but like I said, the issue isn't that it doesn't work, it's that when it breaks it seems to break forever unless I tap two buttons on my keyboard which instantly fixes it... BUT the two api token for two connections trick could also just have this issue fixed (won't be confident about that until tomorrow.)

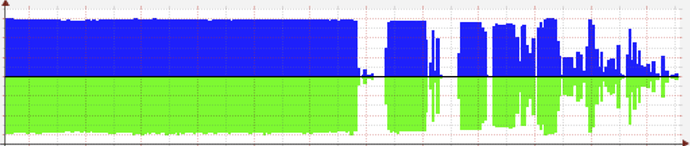

edit2: This shows the problem. Small dips are the 5minute wait it's supposed to do, giant holes are when it got stuck forever and didn't resume until I returned to a keyboard to hit ctrl-c up arrow enter

The giant block on the left is when I was only using one connection not two (never even a hiccup.)

Then again all this could just be down to comparison of performance on small files versus large files.