What is the problem you are having with rclone?

I am trying to upload a folder to AWS Glacier in order to perform a backup.incorrect region

What is your rclone version (output from rclone version)

rclone v1.53.4

os/arch: darwin/amd64

go version: go1.15.6

Which OS you are using and how many bits (eg Windows 7, 64 bit)

macOS 10.15.7

Which cloud storage system are you using? (eg Google Drive)

AWS S3 Glacier

The command you were trying to run (eg rclone copy /tmp remote:tmp)

rclone copy git-cheat-sheet-education.pdf myvault:myvault

The rclone config contents with secrets removed.

--------------------

[myvault]

type = s3

provider = AWS

env_auth = false

access_key_id = {removed}

secret_access_key = {removed}

region = eu-west-1

location_constraint = eu-west-1

acl = private

sse_kms_key_id = {removed}

storage_class = DEEP_ARCHIVE

--------------------

A log from the command with the -vv flag

user@macbook ~/Downloads rclone -vv copy git-cheat-sheet-education.pdf myvault:myvault

2021/01/27 18:22:30 DEBUG : rclone: Version "v1.53.4" starting with parameters ["rclone" "-vv" "copy" "git-cheat-sheet-education.pdf" "myvault:myvault"]

2021/01/27 18:22:30 DEBUG : Creating backend with remote "git-cheat-sheet-education.pdf"

2021/01/27 18:22:30 DEBUG : Using config file from "/Users/mvolterra/.config/rclone/rclone.conf"

2021/01/27 18:22:30 DEBUG : fs cache: adding new entry for parent of "git-cheat-sheet-education.pdf", "/Users/mvolterra/Downloads"

2021/01/27 18:22:30 DEBUG : Creating backend with remote "myvault:myvault"

2021/01/27 18:22:31 ERROR : S3 bucket myvault: Failed to update region for bucket: reading bucket location failed: AccessDenied: Access Denied

status code: 403, request id: 1867181C3EEBBF1D, host id: UxTAHKS4lbnboNXHFpqOQDmp1Bx11/rDvKUGoHf3xM5HjKHdaFB4yUFdA4iY7cBqTroULvH0Izo=

2021/01/27 18:22:31 ERROR : Attempt 1/3 failed with 1 errors and: BucketRegionError: incorrect region, the bucket is not in 'eu-west-1' region at endpoint ''

status code: 301, request id: , host id:

2021/01/27 18:22:31 ERROR : S3 bucket myvault: Failed to update region for bucket: reading bucket location failed: AccessDenied: Access Denied

status code: 403, request id: 27399B1D1168A01B, host id: u3nXzkWMn+2O4bNEF29NfahbYGjYIYMb8KJKuUXTUwn8LvUxsp3GIGiidau99O/rxNq5FYO0b3w=

2021/01/27 18:22:31 ERROR : Attempt 2/3 failed with 1 errors and: BucketRegionError: incorrect region, the bucket is not in 'eu-west-1' region at endpoint ''

status code: 301, request id: , host id:

2021/01/27 18:22:32 ERROR : S3 bucket myvault: Failed to update region for bucket: reading bucket location failed: AccessDenied: Access Denied

status code: 403, request id: E90BDAECF9D5A35C, host id: 44iL34tu6kCac7c1c0tClh/INFEO0W063biA90yUujyO9fQINmszis7pgugwI7wFxBIvVAgfCVw=

2021/01/27 18:22:32 ERROR : Attempt 3/3 failed with 1 errors and: BucketRegionError: incorrect region, the bucket is not in 'eu-west-1' region at endpoint ''

status code: 301, request id: , host id:

2021/01/27 18:22:32 INFO :

Transferred: 0 / 0 Bytes, -, 0 Bytes/s, ETA -

Errors: 1 (retrying may help)

Elapsed time: 1.5s

2021/01/27 18:22:32 DEBUG : 8 go routines active

2021/01/27 18:22:32 Failed to copy: BucketRegionError: incorrect region, the bucket is not in 'eu-west-1' region at endpoint ''

status code: 301, request id: , host id:

asdffdsa

January 27, 2021, 5:39pm

2

hello and welcome to the forum,

is that a new bucket created by rclone or a pre-existing bucket?

not sure that it matters that when i use aws s3 glacier, my config file hasregion=location_constraint

Hi asdffdsa,

BR,

asdffdsa

January 27, 2021, 5:55pm

4

sure,

where is the bucket located?

https://rclone.org/commands/rclone_mkdir/

as for aws s3, i do this

create a bucket

create an IAM user, locked down to that single bucket with limited permissions.

create rclone remote for using that bucket and IAM user

my rclone config looks like

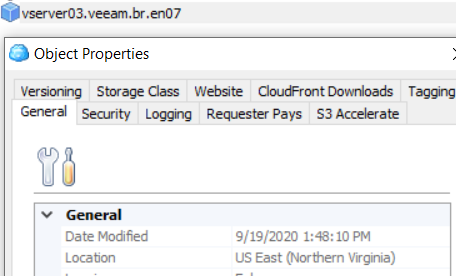

rclone config show aws01_iam_vserver03_veeam_br_en07

[aws01_iam_vserver03_veeam_br_en07]

type = s3

provider = AWS

access_key_id =

secret_access_key =

region = us-east-1

storage_class = GLACIER

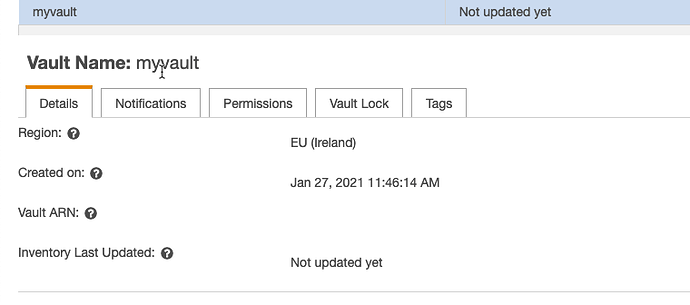

I have created the vault into aws glacier (aws console / Glacier)

This is the IAM user

{

"Version": "2012-10-17",

"Statement": [

{

"Action": "glacier:*",

"Effect": "Allow",

"Resource": "*"

}

]}

and the configuration is the same as yours now with my keys and using Ireland as region. but I still get the same error.

could it be that it needs 24 hours?

asdffdsa

January 27, 2021, 6:12pm

6

where is that bucket located?

asdffdsa

January 27, 2021, 6:19pm

8

as per the docs, you cannot use a vault with rclone...

https://rclone.org/s3/#glacier-and-glacier-deep-archive

Ok! so I need to use rclone to upload to S3 bucket, and then use a lifecycle policy to move from S3 to Glacier, does that make sense?

if let's say I have 250 GB to backup which I will never retrieve once in the Glacier, what is an estimate bill if you know?

asdffdsa

January 27, 2021, 6:27pm

10

not quite right. no need for lifecycle.

create a bucket.

create a rclone config for that bucket using storage_class = GLACIER

use rclone to upload files to that bucket.

here is a spreadsheet i once created

aws

1GB

1TB

Min stor days

cost/gb

cost/TB

Standard

$0.02300

$23.55

Standard - Infrequent Access

$0.01250

$12.80

For long lived but infrequently accessed data that needs millisecond access

One Zone - Infrequent Access

$0.01000

$10.24

For re-createable infrequently accessed data that needs millisecond access

Glacier

$0.00400

$4.10

90

For long-term backups and archives with retrieval option from 1 minute to 12 hours

Glacier Deep Archive

$0.00099

$1.01

180

For long-term data archiving that is accessed once or twice in a year and can be restored within 12 hours

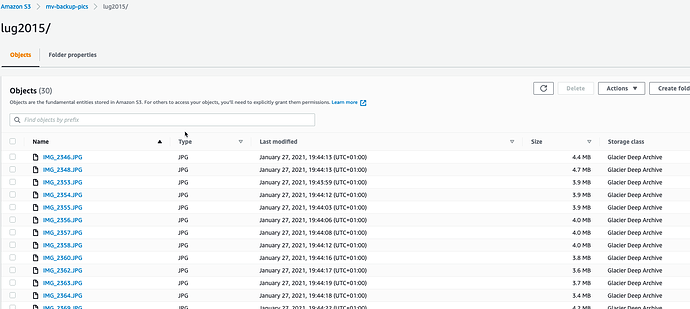

Ok I have done that.

Testing with a small folder ~130MB let's see what happens.

Thanks a lot for your help really

asdffdsa

January 27, 2021, 6:52pm

13

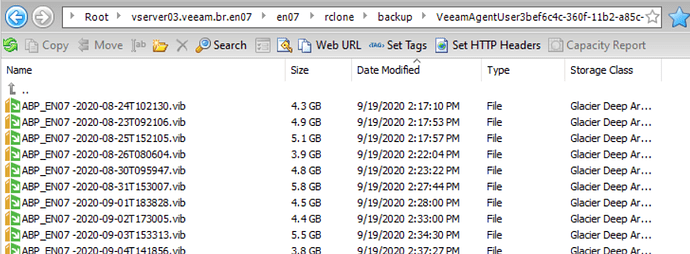

well,

the files uploaded have a storage class of deep archive, not glacier

Yeah it's even cheaper, isn't it? It's really files I don't need to access, it's pictures that are on my raid1 server. in case of a disaster I will retrieve them.

asdffdsa

January 27, 2021, 6:59pm

15

yeah, it is cheaper.

and with raid1, you really do need a backup

keep in mind that there are api fees, so use --fast-list when you can

2 Likes

system

January 31, 2021, 8:21am

17

This topic was automatically closed 3 days after the last reply. New replies are no longer allowed.