Following my previous post Rclone union: OneDrive Personal: quotaLimitReached: Why? .quotaLimitReached error.

I have tried with the following policies (after the documentation ):

I was able to upload:

Total: 8.044 TiB

Used: 5.798 TiB

Free: 2.246 TiB

Trashed: 55.562 GiB

But now I am not able to upload anymore, because some of the OneDrive Personal accounts, has reached the 1TB storage limit.

Maybe, it is related to Union: Retry with the next remote, if there's insufficcient free space left. · Issue #5308 · rclone/rclone · GitHub .

rclone v1.62.2

- os/version: alpine 3.17.0 (64 bit)

- os/kernel: 3.10.108 (x86_64)

- os/type: linux

- os/arch: amd64

- go/version: go1.20.2

- go/linking: static

- go/tags: none

Multiple OneDrive Personal . Some have 1TB storage (8 accounts), and one with free 5 GB (1 accounts).

rclone copy /tmp remote:tmp)

rclone sync /usbshare/my_local_storage_docker.hbk/ my_drive_union:usbshare/document.hbk/ --transfers=4 --checkers=20 --delete-before --log-file=/temp/rclone_document.log -vv

Some accounts were removed, but all have the same configuration.

[my_drive_01]

type = onedrive

client_id = f...

client_secret = N...

token = {"access_token":"E..."}

drive_id = 3...

drive_type = personal

[my_drive_02]

type = onedrive

client_id = 8...

client_secret = x...

token = {"access_token":"E..."}

drive_id = b...

drive_type = personal

[...]

[my_drive_union]

type = union

upstreams = my_drive_01:union my_drive_02:union my_drive_03:union my_drive_04:union my_drive_05:union my_drive_06:union my_drive_07:union my_drive_08:union

-vv flag

Logs are too long, so I posted at:

Thank you for any idea!

ncw

April 5, 2023, 10:43am

2

Looks like you found a bug!

panic: interface conversion: upstream.Entry is nil, not *upstream.Object

goroutine 4004 [running]:

github.com/rclone/rclone/backend/union.(*Object).Update(0xc0008c74d0, {0x24986f8?, 0xc000b020f0}, {0x2482c00, 0xc00053d400}, {0x7fdc7e341750?, 0xc000aba300}, {0xc0007b2f90, 0x1, 0x1})

github.com/rclone/rclone/backend/union/entry.go:76 +0x59c

github.com/rclone/rclone/fs/operations.Copy({0x24986f8, 0xc000b020f0}, {0x24ab7c0, 0xc0002233b0}, {0x24ab830?, 0xc0008c74d0?}, {0xc000c1a0a8, 0x15}, {0x24aa5d0, 0xc000aba300})

github.com/rclone/rclone/fs/operations/operations.go:437 +0x1bea

github.com/rclone/rclone/fs/sync.(*syncCopyMove).pairCopyOrMove(0xc0007ceb40, {0x24986f8, 0xc000b020f0}, 0xc000253778?, {0x24ab7c0, 0xc0002233b0}, 0x76f986?, 0xc000d62ea0?)

github.com/rclone/rclone/fs/sync/sync.go:437 +0x20a

created by github.com/rclone/rclone/fs/sync.(*syncCopyMove).startTransfers

github.com/rclone/rclone/fs/sync/sync.go:464 +0x45

I can't immediately see a fix for this so I'm going to need to be able to reproduce the bug.

Can you try simplifying the config down so you can still reproduce this bug with the smallest possible config?

Thanks

@ncw , thank you for your prompt response.

What I have done:

I have created two new @hotmail.com accounts with 5GiB each (the free ones): account_01 and account_02.

Created on my Ubuntu machine a folderA, inside a folderB, inside a folderC.

Placed on folderC, I placed 4GiB of files

I run this CLI:

rclone sync /home/juanb/rclone_union/source/ hotmail_union:rclone_union --progress --log-file=/home/juanb/rclone_union/rclone_union_sync.log

Everything goes OK. rclone copies all the files to account_01.

Then added more content, to reach a total 5.2 GiB (more than the capacity of one account)

Here is the randomness . Outcomes:

Sometimes, rclone copies to the excess files to account_02. So, no problem.

Sometimes, rclone copies to new files to account_01 to reach the maximum 5GiB, and then I start to get Errors : quotaLimitReached: Insufficient Space Available

When the result is (1), the setup allows you to upload more files to account_02.rclone uploads to account_02. Even if I change the policy to mfs or something without ep.account_01, and clear the Recycle Bin ; then re-sync using something like mfs.

So, things I noticed:

If I use the default or the epmfs policies. rclone is more prone to fail and get me the quotaLimitReached: Insufficient Space Available.

If I use policies like mfs, because each of the accounts does not reach the 5GiB limit, looks like there is no problem.

The flag --delete-before does not change the randomness . Looks like here is not the problem.

Just guessing posible causes of this issue:

Maybe OneDrive is not sharing correctly to rclone the most updated storage status. So rclone is considering there is still space... and the randomness starts.

I will keep trying to see if I can better isolate the issue.

ncw

April 7, 2023, 12:42pm

4

Great investigative work

That is an interesting idea. You can check with rclone about as the upload is progressing to see.

Note that the union also caches the values for a while - this could be affecting you. So you could try adjusting this value.

--union-cache-time int Cache time of usage and free space (in seconds) (default 120)

And there is this issue, which might be coming in to play.

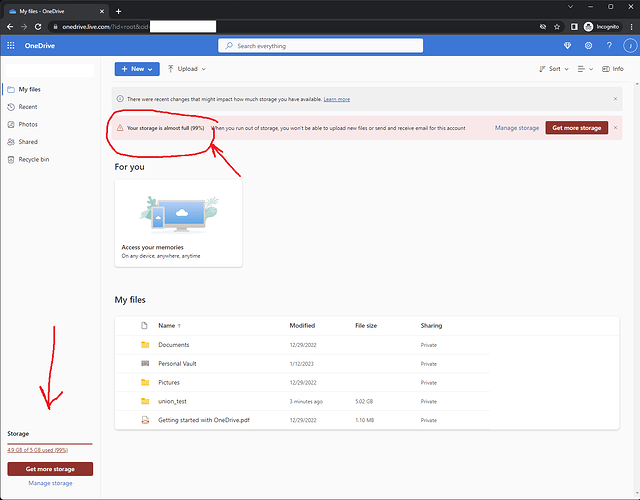

This fixed all my problems!@ncw !

It is amazing you guys thought about this issue and leave a flag/option to control it.

You can see that now reaches 99%, and continues to the other remote.

For testing , I am using:

rclone sync /home/xxx/rclone_union/source/ hotmail_union:rclone_union --progress --log-file=/home/xxx/rclone_union/rclone_union_sync.log --delete-before --union-cache-time=0

I mean, for testing, I am using --union-cache-time=0.

For your information, I am uploading 50MiB files and I have a 1Gb/s connection. So, easily can exceed what is reported in the cache for free space.

ncw

April 7, 2023, 10:26pm

6

Great, glad that fixed it. Rclone should probably make an effort to update the free itself from what has been uploaded - that would help a bit.

1 Like

system

April 10, 2023, 10:26pm

7

This topic was automatically closed 3 days after the last reply. New replies are no longer allowed.