What is the problem you are having with rclone?

When rclone updates modification time in destination (Google Cloud Storage), it seems that it rewrites an object. This is unexpected because a metadata update is enough. Moreover, rewriting causes some troubles:

- It can incur the early deletion and data retrieval charges for objects stored in a particular storage classes.

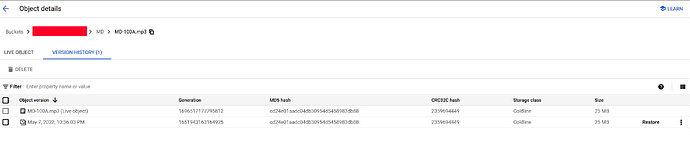

- It creates a non-current version of an object if versioning is enabled for the bucket.

Here we see that rclone created another version of the object with the same conent (same hashes).

Run the command 'rclone version' and share the full output of the command.

rclone v1.64.0

- os/version: Microsoft Windows 10 Home 21H2 (64 bit)

- os/kernel: 10.0.19044.3086 (x86_64)

- os/type: windows

- os/arch: amd64

- go/version: go1.21.1

- go/linking: static

- go/tags: cmount

Which cloud storage system are you using? (eg Google Drive)

Google Cloud Storage

The command you were trying to run (eg rclone copy /tmp remote:tmp)

rclone copy . gcs:bucket1/MD

The rclone config contents with secrets removed.

[gcs]

type = google cloud storage

project_number = ***REDACTED***

service_account_file = ~/REDACTED.json

bucket_policy_only = true

location = us-central1

A log from the command with the -vv flag

2023/10/05 20:16:09 DEBUG : rclone: Version "v1.64.0" starting with parameters ["C:\\Users\\REDACTED\\scoop\\apps\\rclone\\current\\rclone.exe" "copy" "." "gcs:bucket1/MD" "-vv"]

2023/10/05 20:16:09 DEBUG : Creating backend with remote "."

2023/10/05 20:16:09 DEBUG : Using config file from "C:\\Users\\REDACTED\\scoop\\apps\\rclone\\current\\rclone.conf"

2023/10/05 20:16:09 DEBUG : fs cache: renaming cache item "." to be canonical "//?/Q:/temp"

2023/10/05 20:16:09 DEBUG : Creating backend with remote "gcs:bucket1/MD"

2023/10/05 20:16:15 DEBUG : GCS bucket bucket1 path MD: Waiting for checks to finish

2023/10/05 20:16:15 DEBUG : MD-100A.mp3: Modification times differ by -550.5124ms: 2022-05-07 22:13:37.5505124 +0530 IST, 2022-05-07 22:13:37 +0530 IST

2023/10/05 20:16:15 DEBUG : MD-100A.mp3: md5 = cd24e01aadc04db30954d5458983db88 OK

2023/10/05 20:16:17 INFO : MD-100A.mp3: Updated modification time in destination

2023/10/05 20:16:17 DEBUG : MD-100A.mp3: Unchanged skipping

2023/10/05 20:16:17 DEBUG : GCS bucket bucket1 path MD: Waiting for transfers to finish

2023/10/05 20:16:17 INFO : There was nothing to transfer

2023/10/05 20:16:17 INFO :

Transferred: 0 B / 0 B, -, 0 B/s, ETA -

Checks: 1 / 1, 100%

Elapsed time: 7.2s

2023/10/05 20:16:17 DEBUG : 4 go routines active