Hi all,

I'm quite new to the world of rclone and mergerfs and would really appreciate the thoughts/feedback on how to best go with my setup.

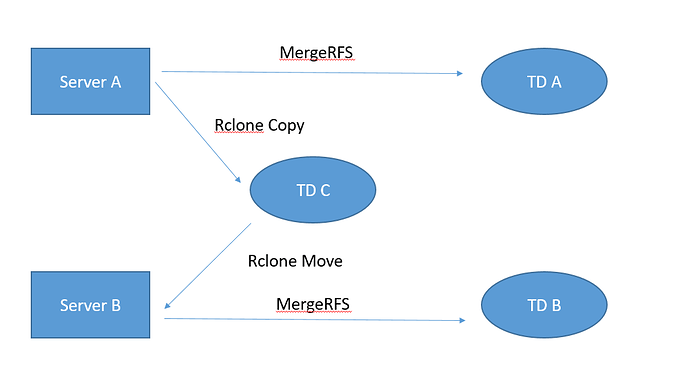

I have two servers, Server A will host the 'arrs to grab things which will ultimately end up on the teamdrive.

Server B will purely run Plex.

I have multiple TD's that I can use if required.

My thoughts.

I'd like to set up Mergerfs on server A, so the 'arrs will download and post-process in to my /media folder (/media/tv & /media/movies etc).

/media will be the mergerfs mount which will point to /local and to TDa:

As I understand it (from having a look at @Animosity022's scripts). providing I have /local as the first mountpoint for mergerfs, it will write to that first instead of TDa:

I can then use the scripts to "move" to TDa: after a day or two.

For Server B (Plex), I could just mount TDa: and have Plex read directly from that. But from looking around I understand that could cause issues? And also when plex is scanning the files, I'm assuming it's better if they were "local"?

What would your suggestions be for getting the newly downloaded items on server A (/local) over to Server B, before Server A does a move to TDa:

I'm trying to do it in a way that avoids double counting files.

I was thinking to run a script every 24 hrs that does an rclone copy from /local to TDc (An Intermediary TD) and then does an rclone move from /local to TDa:

This way it will still be in /media (just on TDa) and so the 'arrs database will still be in tact

Then on Server B.

Run a script every 24 hrs (but 12 hrs later than Server A) to rclone move from TDc to Server B /local

So plex can catalogue it etc

Then 12 hrs later (around the same time that Server A is doing an rclone copy to TDc), I do an rclone move from Server B /local to TDb - which is also set up with mergerfs under /media (which Plex would point to), so again Plex still see's the files under /media and will then stream from there.

My only thoughts are.

If more stuff gets downloaded whilst Server A is doing a copy to TDc, it would get missed on the copy, but would still get moved to TDa. So whilst it would appear in the 'arrs d/b... it would never go to TDc and so would never end up on Plex.

My solution around that is doing a weekly sync between TDa and TDb - so that way, if anything did get missed, it would then be sync'd on the weekly sync to TDb and although plex would have to "download" it to scan it.. it would only be the odd one or two files.

I know I've completely over-engineered this.. but I'm trying to look at a solution that covers all angles and eventualities.

Is there an easier way that I'm missing?

Is it possible to maybe do an rclone copy from server A to TDc "if file is <2 days old" and then only do the rclone move from Server A to TDa if files >2 days or something like that?

All help/thoughts appreciated!