hi, guys

Do you use the new parameter vfs-read-chunk-streams ?

I use GDrive, set this parameter to vfs-read-chunk-streams=4 and vfs-read-chunk-size=32M.

Want to know what is the best value when using GDrive, for PLEX streaming.

Thank you.

It's a brand new feature, you'd have to test somet things out and see what works best for you and your setup.

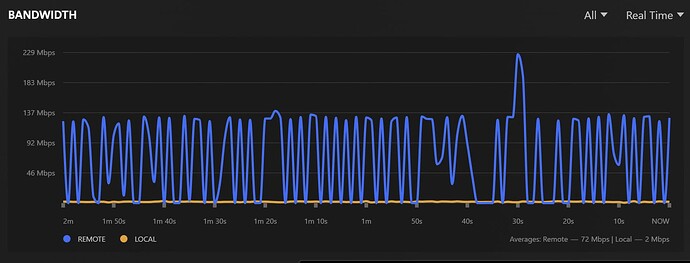

traffic statistics of VPS, watching an 4K REMUX from remote VPS

enp0s3 / traffic statistics

rx | tx

--------------------------------------+------------------

bytes 6.42 GiB | 5.43 GiB

--------------------------------------+------------------

max 540.69 Mbit/s | 416.01 Mbit/s

average 88.95 Mbit/s | 75.26 Mbit/s

min 4.58 kbit/s | 73.19 kbit/s

--------------------------------------+------------------

packets 1248850 | 834290

--------------------------------------+------------------

max 16349 p/s | 6775 p/s

average 2014 p/s | 1345 p/s

min 7 p/s | 9 p/s

--------------------------------------+------------------

time 10.33 minutes

stats of MPV

So, it's a funny one, I never had problems no cache. I never had problems with the cache. I can't imagine this causing problems either.

My only thoughts are this hits the use case if you are streaming a large thing and that thing has some high bitrate parts and you are network limited via capacity or maybe some latency.

That's so specific for each setup, you probably won't find a perfect for everyone.

You'd have to test some values and see what happens with 1 user, a few users, etc and see what your sweet spot is.

Like if you crank up values and one user floods you pipe, that probably is bad as reading ahead that much is not needed.

My advice is always start with defaults, don't chane anything and based on the data, make changes.

Thanks for your advise.

And, may I ask, where is your github repo now?

https://github.com/animosity22/homescripts CAN NOT open any more.

Doesn't exist.

I use home storage and no cloud based providers.

I feel like each new addition like this only make it harder to understand how everything works together

Like right now I use this:

--vfs-read-chunk-size=0

--buffer-size=0

--vfs-read-ahead=256M

How would this work together with the new settings?

I feel your pain here!

The new --vfs-read-chunk-streams parameter enables parallel downloading of files.

The rationale is in issue #4760. There is some good discussion on that issue (eg this comment) about how to use the feature and tweak it.

It certainly helps in some scenarios but won't help everyone everywhere. If you have high latency to your remote, or your remote limits you on bandwidth on a per stream basis then it will help.

At some point I'd like to write some guidance on the best parameters to use but I need help from the community testing it out.

But when using the vfs-read-ahead are the downloaded chunks stored in the vfs cache ?

I can test the settings for you, I have gb/s of write and 10 gbps uplink and many backends if you tell me what to do and what settings to use I can try them and report the results

If you are using the VFS cache, then yes, otherwise no.

You could try some experiments. What you should notice is that it is quicker to download whole files into the cache.

If you give me the mount commands and the benchmarks to use I can do the experiments.

My use case is that the backend is just a backup, all data is basically read-only, and there is enough local disk space for everything, so what we want to do when something is requested is to download it do disk as fast as possible so that all next reads are only from disk

You could try this for starters

--vfs-read-chunk-streams 16

--vfs-read-chunk-size 4M

That should work well for a high performance backend (like s3).

What you should see is improved performance coping the file to disk.

An easy way to test the download speed is

rclone -P cat /mnt/yourmount/file --discard

This topic was automatically closed 60 days after the last reply. New replies are no longer allowed.