What is the problem you are having with rclone?

Since the 1.59 betas I've been trying to sync web assets from one local folder to a remote Cloudflare R2 bucket and I don't seem to get rclone to actually do partial sync on subsequent runs.

I've mentioned it already on a github thread but reaching out here to not spam over there.

I've revisited it after the stable release and I'm getting the same result: running the same sync command transfers 100% of the files every time.

Previously I've tried different combinations of --fast-list , --checksum and --s3-no-head without success. Now, with the stable release, --s3-no-head throws on me with:

2022/07/30 16:07:36 DEBUG : rclone: Version "v1.59.0" starting with parameters ["rclone" "sync" "-vv" "--s3-no-head" "_build/admin" "r2:admin"]

2022/07/30 16:07:36 DEBUG : Creating backend with remote "_build/admin"

2022/07/30 16:07:36 DEBUG : Using config file from "/home/sam/.config/rclone/rclone.conf"

2022/07/30 16:07:36 DEBUG : fs cache: renaming cache item "_build/admin" to be canonical "/home/sam/Code/masterworks/frontend/_build/admin"

2022/07/30 16:07:36 DEBUG : Creating backend with remote "r2:admin"

2022/07/30 16:07:36 DEBUG : r2: detected overridden config - adding "{NBTUO}" suffix to name

2022/07/30 16:07:36 DEBUG : fs cache: renaming cache item "r2:admin" to be canonical "r2{NBTUO}:admin"

2022/07/30 16:07:37 DEBUG : S3 bucket admin: Waiting for checks to finish

2022/07/30 16:07:37 DEBUG : S3 bucket admin: Waiting for transfers to finish

panic: reflect: Elem of invalid type s3.PutObjectInput

goroutine 56 [running]:

reflect.(*rtype).Elem(0x0?)

reflect/type.go:965 +0x134

github.com/rclone/rclone/lib/structs.SetFrom({0x1c7ab20?, 0xc00052dc20}, {0x1c9f500?, 0xc000a46000})

github.com/rclone/rclone/lib/structs/structs.go:22 +0xdf

github.com/rclone/rclone/backend/s3.(*Object).Update(0xc00037c090, {0x2142048, 0xc000634f00}, {0x2130ae0, 0xc000644000}, {0x7fde1a64ad38?, 0xc000300540?}, {0xc000c36060, 0x1, 0x1})

github.com/rclone/rclone/backend/s3/s3.go:4573 +0x1345

github.com/rclone/rclone/backend/s3.(*Fs).Put(0xc00090fd40, {0x2142048, 0xc000634f00}, {0x2130ae0, 0xc000644000}, {0x7fde1a64ad38?, 0xc000300540?}, {0xc000c36060, 0x1, 0x1})

github.com/rclone/rclone/backend/s3/s3.go:3085 +0x125

github.com/rclone/rclone/fs/operations.Copy({0x2142048, 0xc000634f00}, {0x2152930, 0xc00090fd40}, {0x0?, 0x0?}, {0xc00011a290, 0xa}, {0x2152000, 0xc000300540})

github.com/rclone/rclone/fs/operations/operations.go:501 +0x1b24

github.com/rclone/rclone/fs/sync.(*syncCopyMove).pairCopyOrMove(0xc000849d40, {0x2142048, 0xc000634f00}, 0x0?, {0x2152930, 0xc00090fd40}, 0x0?, 0x0?)

github.com/rclone/rclone/fs/sync/sync.go:416 +0x1bb

created by github.com/rclone/rclone/fs/sync.(*syncCopyMove).startTransfers

github.com/rclone/rclone/fs/sync/sync.go:443 +0x45

Which didn't happen on the beta releases I tried, but I would say this is unrelated.

I've tried again with a totally new R2 bucket and after deleting my existing rclone config (~/.config/rclone/rclone.conf) and recreating it with the interactive rclone config command (see its resulting config file later on this post). No luck, it transfers 100% of the files every time.

I've checked the md5 hashes of the -vv output and they are all the same on every run.

I've tried with and without trailing slashes in all the permutations for SOURCE and DESTINATION, always getting same result.

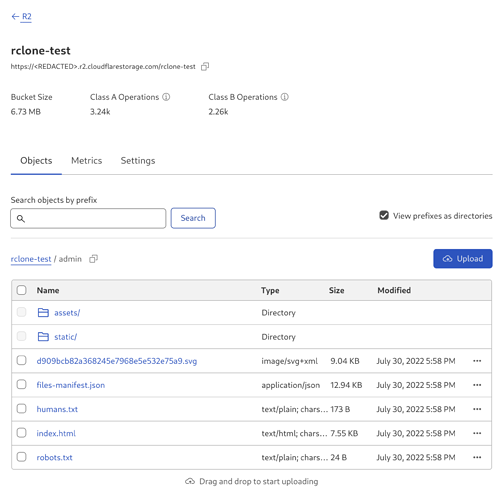

I've triple-checked that the files were actually uploaded to the R2 bucket, they were.

Any ideas on what am I doing wrong?

Run the command 'rclone version' and share the full output of the command.

rclone v1.59.0

- os/version: fedora 36 (64 bit)

- os/kernel: 5.18.13-200.fc36.x86_64 (x86_64)

- os/type: linux

- os/arch: amd64

- go/version: go1.18.3

- go/linking: static

- go/tags: none

Which cloud storage system are you using? (eg Google Drive)

Cloudflare R2

The command you were trying to run (eg rclone copy /tmp remote:tmp)

rclone sync -vv _build/admin r2:admin

The rclone config contents with secrets removed.

[r2]

type = s3

provider = Cloudflare

access_key_id = <REDACTED>

secret_access_key = <REDACTED>

region = auto

endpoint = https://<REDACTED>.r2.cloudflarestorage.com/rclone-test