What is the problem you are having with rclone?

Using sync command to sync big files from SMB to Backblaze makes rclone use insanely high amount of memory, leading to OS (Windows Server 2012) crash.

Run the command 'rclone version' and share the full output of the command.

rclone v1.62.2

- os/version: Microsoft Windows Server 2012 Standard (64 bit)

- os/kernel: 6.2.9200.24266 Build 9200.24266.24266 (x86_64)

- os/type: windows

- os/arch: amd64

- go/version: go1.20.2

- go/linking: static

- go/tags: cmount

Which cloud storage system are you using? (eg Google Drive)

- SMB hosted on a QNAP NAS (TS-431P2 running QTS 5.0.1)

- Backblaze B2

The command you were trying to run (eg rclone copy /tmp remote:tmp)

rclone.exe sync --stats-log-level NOTICE --stats 1h --transfers 300 --exclude *.temp serveurbackup:bkp Backblaze:azimut-backups

The rclone config contents with secrets removed.

[Backblaze]

type = b2

account = REDACTED

key = REDACTED

hard_delete = true

endpoint = https://api.backblazeb2.com

[serveurbackup]

type = smb

host = REDACTED

user = REDACTED

pass = REDACTED

A log from the command with the -vv flag

I don't have logs with -vv flags because the process runs for days and I'm afraid of log size, am I wrong?

Log file

2023/05/23 02:24:09 ERROR : Veeam: Entry doesn't belong in directory "Veeam" (too short) - ignoring

2023/05/23 02:24:12 ERROR : Veeam/Archive: Entry doesn't belong in directory "Veeam/Archive" (too short) - ignoring

2023/05/23 02:24:12 ERROR : Veeam/Backup: Entry doesn't belong in directory "Veeam/Backup" (too short) - ignoring

2023/05/23 03:24:05 NOTICE:

Transferred: 121.765 GiB / 3.036 TiB, 4%, 29.462 MiB/s, ETA 1d4h50m

Checks: 28371 / 28371, 100%

Transferred: 58 / 59, 98%

Elapsed time: 1h0.9s

Transferring:

* Veeam/VM-WS2016B/VM-WS…-05-09T020730_67F4.vbk: 3% /3.010Ti, 29.179Mi/s, 29h7m11s

2023/05/23 04:24:05 NOTICE:

Transferred: 222.752 GiB / 3.036 TiB, 7%, 29.546 MiB/s, ETA 1d3h47m

Checks: 28371 / 28371, 100%

Transferred: 58 / 59, 98%

Elapsed time: 2h0.9s

Transferring:

* Veeam/VM-WS2016B/VM-WS…-05-09T020730_67F4.vbk: 6% /3.010Ti, 29.394Mi/s, 27h55m46s

2023/05/23 05:24:05 NOTICE:

Transferred: 323.653 GiB / 3.036 TiB, 10%, 30.542 MiB/s, ETA 1d1h56m

Checks: 28371 / 28371, 100%

Transferred: 58 / 59, 98%

Elapsed time: 3h0.9s

Transferring:

* Veeam/VM-WS2016B/VM-WS…-05-09T020730_67F4.vbk: 9% /3.010Ti, 30.112Mi/s, 26h18m38s

2023/05/23 06:24:05 NOTICE:

Transferred: 425.024 GiB / 3.036 TiB, 14%, 29.023 MiB/s, ETA 1d2h18m

Checks: 28371 / 28371, 100%

Transferred: 58 / 59, 98%

Elapsed time: 4h0.9s

Transferring:

* Veeam/VM-WS2016B/VM-WS…-05-09T020730_67F4.vbk: 12% /3.010Ti, 29.640Mi/s, 25h45m23s

2023/05/23 07:24:05 NOTICE:

Transferred: 525.970 GiB / 3.036 TiB, 17%, 32.166 MiB/s, ETA 22h50m28s

Checks: 28371 / 28371, 100%

Transferred: 58 / 59, 98%

Elapsed time: 5h0.9s

Transferring:

* Veeam/VM-WS2016B/VM-WS…-05-09T020730_67F4.vbk: 16% /3.010Ti, 32.058Mi/s, 22h55m5s

2023/05/23 08:24:05 NOTICE:

Transferred: 626.446 GiB / 3.036 TiB, 20%, 32.396 MiB/s, ETA 21h47m47s

Checks: 28371 / 28371, 100%

Transferred: 58 / 59, 98%

Elapsed time: 6h0.9s

Transferring:

* Veeam/VM-WS2016B/VM-WS…-05-09T020730_67F4.vbk: 19% /3.010Ti, 32.461Mi/s, 21h45m10s

2023/05/23 09:24:05 NOTICE:

Transferred: 724.036 GiB / 3.036 TiB, 23%, 29.262 MiB/s, ETA 23h10m56s

Checks: 28371 / 28371, 100%

Transferred: 58 / 59, 98%

Elapsed time: 7h0.9s

Transferring:

* Veeam/VM-WS2016B/VM-WS…-05-09T020730_67F4.vbk: 22% /3.010Ti, 28.945Mi/s, 23h26m10s

2023/05/23 10:24:05 NOTICE:

Transferred: 820.766 GiB / 3.036 TiB, 26%, 28.520 MiB/s, ETA 22h49m16s

Checks: 28371 / 28371, 100%

Transferred: 58 / 59, 98%

Elapsed time: 8h0.9s

Transferring:

* Veeam/VM-WS2016B/VM-WS…-05-09T020730_67F4.vbk: 25% /3.010Ti, 28.020Mi/s, 23h13m40s

2023/05/23 11:24:05 NOTICE:

Transferred: 919.348 GiB / 3.036 TiB, 30%, 28.986 MiB/s, ETA 21h29m12s

Checks: 28371 / 28371, 100%

Transferred: 58 / 59, 98%

Elapsed time: 9h0.9s

Transferring:

* Veeam/VM-WS2016B/VM-WS…-05-09T020730_67F4.vbk: 28% /3.010Ti, 28.847Mi/s, 21h35m23s

2023/05/23 12:24:05 NOTICE:

Transferred: 1017.196 GiB / 3.036 TiB, 33%, 25.440 MiB/s, ETA 23h23m14s

Checks: 28371 / 28371, 100%

Transferred: 58 / 59, 98%

Elapsed time: 10h0.9s

Transferring:

* Veeam/VM-WS2016B/VM-WS…-05-09T020730_67F4.vbk: 32% /3.010Ti, 25.025Mi/s, 23h46m33s

2023/05/23 13:24:05 NOTICE:

Transferred: 1.091 TiB / 3.036 TiB, 36%, 30.411 MiB/s, ETA 18h37m46s

Checks: 28371 / 28371, 100%

Transferred: 58 / 59, 98%

Elapsed time: 11h0.9s

Transferring:

* Veeam/VM-WS2016B/VM-WS…-05-09T020730_67F4.vbk: 35% /3.010Ti, 30.654Mi/s, 18h28m54s

2023/05/23 14:24:05 NOTICE:

Transferred: 1.188 TiB / 3.036 TiB, 39%, 28.462 MiB/s, ETA 18h54m43s

Checks: 28371 / 28371, 100%

Transferred: 58 / 59, 98%

Elapsed time: 12h0.9s

Transferring:

* Veeam/VM-WS2016B/VM-WS…-05-09T020730_67F4.vbk: 38% /3.010Ti, 28.336Mi/s, 18h59m46s

2023/05/23 15:24:05 NOTICE:

Transferred: 1.283 TiB / 3.036 TiB, 42%, 25.457 MiB/s, ETA 20h3m42s

Checks: 28371 / 28371, 100%

Transferred: 58 / 59, 98%

Elapsed time: 13h0.9s

Transferring:

* Veeam/VM-WS2016B/VM-WS…-05-09T020730_67F4.vbk: 41% /3.010Ti, 25.866Mi/s, 19h44m40s

2023/05/23 16:24:05 NOTICE:

Transferred: 1.376 TiB / 3.036 TiB, 45%, 31.428 MiB/s, ETA 15h23m3s

Checks: 28371 / 28371, 100%

Transferred: 58 / 59, 98%

Elapsed time: 14h0.9s

Transferring:

* Veeam/VM-WS2016B/VM-WS…-05-09T020730_67F4.vbk: 44% /3.010Ti, 31.539Mi/s, 15h19m49s

2023/05/23 17:24:05 NOTICE:

Transferred: 1.473 TiB / 3.036 TiB, 49%, 30.995 MiB/s, ETA 14h41m12s

Checks: 28371 / 28371, 100%

Transferred: 58 / 59, 98%

Elapsed time: 15h0.9s

Transferring:

* Veeam/VM-WS2016B/VM-WS…-05-09T020730_67F4.vbk: 48% /3.010Ti, 31.159Mi/s, 14h36m33s

2023/05/23 18:24:05 NOTICE:

Transferred: 1.570 TiB / 3.036 TiB, 52%, 26.721 MiB/s, ETA 15h58m55s

Checks: 28371 / 28371, 100%

Transferred: 58 / 59, 98%

Elapsed time: 16h0.9s

Transferring:

* Veeam/VM-WS2016B/VM-WS…-05-09T020730_67F4.vbk: 51% /3.010Ti, 26.948Mi/s, 15h50m51s

2023/05/23 19:24:05 NOTICE:

Transferred: 1.669 TiB / 3.036 TiB, 55%, 26.972 MiB/s, ETA 14h45m52s

Checks: 28371 / 28371, 100%

Transferred: 58 / 59, 98%

Elapsed time: 17h0.9s

Transferring:

* Veeam/VM-WS2016B/VM-WS…-05-09T020730_67F4.vbk: 54% /3.010Ti, 26.929Mi/s, 14h47m17s

2023/05/23 20:24:05 NOTICE:

Transferred: 1.768 TiB / 3.036 TiB, 58%, 27.817 MiB/s, ETA 13h16m34s

Checks: 28371 / 28371, 100%

Transferred: 58 / 59, 98%

Elapsed time: 18h0.9s

Transferring:

* Veeam/VM-WS2016B/VM-WS…-05-09T020730_67F4.vbk: 57% /3.010Ti, 28.391Mi/s, 13h0m28s

2023/05/23 21:24:05 NOTICE:

Transferred: 1.867 TiB / 3.036 TiB, 62%, 27.075 MiB/s, ETA 12h34m21s

Checks: 28371 / 28371, 100%

Transferred: 58 / 59, 98%

Elapsed time: 19h0.9s

Transferring:

* Veeam/VM-WS2016B/VM-WS…-05-09T020730_67F4.vbk: 61% /3.010Ti, 26.520Mi/s, 12h50m8s

2023/05/23 22:24:05 NOTICE:

Transferred: 1.966 TiB / 3.036 TiB, 65%, 31.176 MiB/s, ETA 9h59m38s

Checks: 28371 / 28371, 100%

Transferred: 58 / 59, 98%

Elapsed time: 20h0.9s

Transferring:

* Veeam/VM-WS2016B/VM-WS…-05-09T020730_67F4.vbk: 64% /3.010Ti, 31.081Mi/s, 10h1m28s

2023/05/23 23:24:05 NOTICE:

Transferred: 2.067 TiB / 3.036 TiB, 68%, 29.222 MiB/s, ETA 9h39m38s

Checks: 28371 / 28371, 100%

Transferred: 58 / 59, 98%

Elapsed time: 21h0.9s

Transferring:

* Veeam/VM-WS2016B/VM-WS…-05-09T020730_67F4.vbk: 67% /3.010Ti, 29.053Mi/s, 9h43m1s

2023/05/24 00:24:05 NOTICE:

Transferred: 2.190 TiB / 3.036 TiB, 72%, 26.846 MiB/s, ETA 9h10m32s

Checks: 28371 / 28371, 100%

Transferred: 58 / 59, 98%

Elapsed time: 22h0.9s

Transferring:

* Veeam/VM-WS2016B/VM-WS…-05-09T020730_67F4.vbk: 71% /3.010Ti, 27.054Mi/s, 9h6m17s

2023/05/24 01:24:05 NOTICE:

Transferred: 2.302 TiB / 3.036 TiB, 76%, 31.209 MiB/s, ETA 6h51m2s

Checks: 28371 / 28371, 100%

Transferred: 58 / 59, 98%

Elapsed time: 23h0.9s

Transferring:

* Veeam/VM-WS2016B/VM-WS…-05-09T020730_67F4.vbk: 75% /3.010Ti, 31.145Mi/s, 6h51m53s

2023/05/24 02:24:05 NOTICE:

Transferred: 2.420 TiB / 3.036 TiB, 80%, 61.547 MiB/s, ETA 2h54m55s

Checks: 28371 / 28371, 100%

Transferred: 58 / 59, 98%

Elapsed time: 1d0.9s

Transferring:

* Veeam/VM-WS2016B/VM-WS…-05-09T020730_67F4.vbk: 79% /3.010Ti, 61.607Mi/s, 2h54m45s

2023/05/24 03:24:45 NOTICE:

Transferred: 2.485 TiB / 3.036 TiB, 82%, 0 B/s, ETA -

Checks: 28371 / 28371, 100%

Transferred: 58 / 59, 98%

Elapsed time: 1d1h11.9s

Transferring:

* Veeam/VM-WS2016B/VM-WS…-05-09T020730_67F4.vbk: 81% /3.010Ti, 0/s, 2562047h47m16s

2023/05/24 04:24:55 NOTICE:

Transferred: 2.485 TiB / 3.036 TiB, 82%, 0 B/s, ETA -

Checks: 28371 / 28371, 100%

Transferred: 58 / 59, 98%

Elapsed time: 1d2h30.3s

Transferring:

* Veeam/VM-WS2016B/VM-WS…-05-09T020730_67F4.vbk: 81% /3.010Ti, 0/s, 2562047h47m16s

2023/05/24 05:24:22 NOTICE:

Transferred: 2.485 TiB / 3.036 TiB, 82%, 0 B/s, ETA -

Checks: 28371 / 28371, 100%

Transferred: 58 / 59, 98%

Elapsed time: 1d3h9.4s

Transferring:

* Veeam/VM-WS2016B/VM-WS…-05-09T020730_67F4.vbk: 81% /3.010Ti, 0/s, 2562047h47m16s

2023/05/24 06:24:19 NOTICE:

Transferred: 2.485 TiB / 3.036 TiB, 82%, 0 B/s, ETA -

Checks: 28371 / 28371, 100%

Transferred: 58 / 59, 98%

Elapsed time: 1d4h8.2s

Transferring:

* Veeam/VM-WS2016B/VM-WS…-05-09T020730_67F4.vbk: 81% /3.010Ti, 0/s, 2562047h47m16s

2023/05/24 07:24:25 NOTICE:

Transferred: 2.485 TiB / 3.036 TiB, 82%, 0 B/s, ETA -

Checks: 28371 / 28371, 100%

Transferred: 58 / 59, 98%

Elapsed time: 1d5h9.0s

Transferring:

* Veeam/VM-WS2016B/VM-WS…-05-09T020730_67F4.vbk: 81% /3.010Ti, 0/s, 2562047h47m16s

2023/05/24 08:24:32 NOTICE:

Transferred: 2.485 TiB / 3.036 TiB, 82%, 0 B/s, ETA -

Checks: 28371 / 28371, 100%

Transferred: 58 / 59, 98%

Elapsed time: 1d6h15.8s

Transferring:

* Veeam/VM-WS2016B/VM-WS…-05-09T020730_67F4.vbk: 81% /3.010Ti, 0/s, 2562047h47m16s

2023/05/24 09:24:28 NOTICE:

Transferred: 2.485 TiB / 3.036 TiB, 82%, 0 B/s, ETA -

Checks: 28371 / 28371, 100%

Transferred: 58 / 59, 98%

Elapsed time: 1d7h12.2s

Transferring:

* Veeam/VM-WS2016B/VM-WS…-05-09T020730_67F4.vbk: 81% /3.010Ti, 0/s, 2562047h47m16s

More details

I'm running rclone on a Windows Server 2012 host to sync our backups from their storage (via SMB) to Backblaze B2.

One of the backup file is 3+TB of size, so uploading it takes some time. The host machine has 64GB of mostly unused memory (hence the --transfers 300 switch, which works fine).

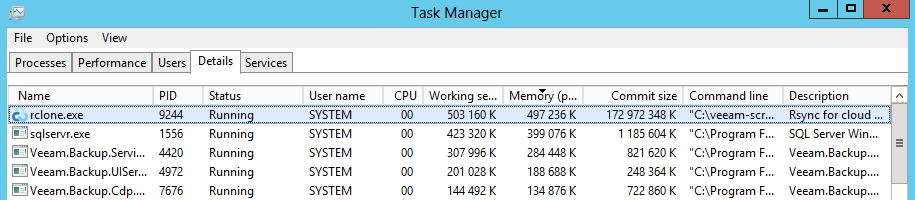

However, from time to time, rclone will start to lose its mind and eat memory as if it was unlimited. From what I see in the logs, something goes wrong with the transfer, the ETA grows to 300 years, then memory goes up, CPU stays stable and network activity stops.

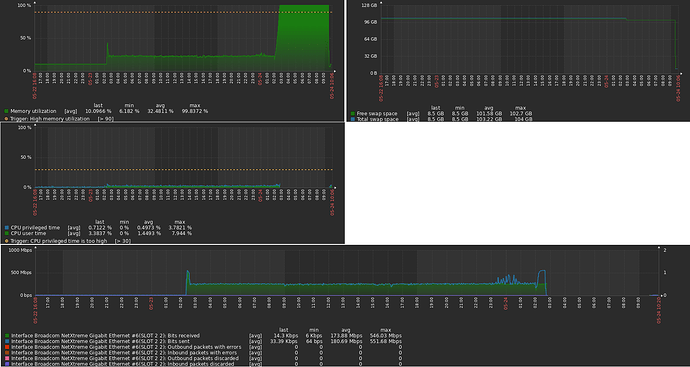

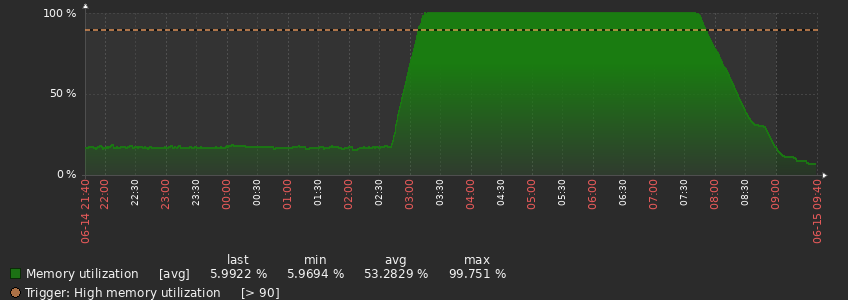

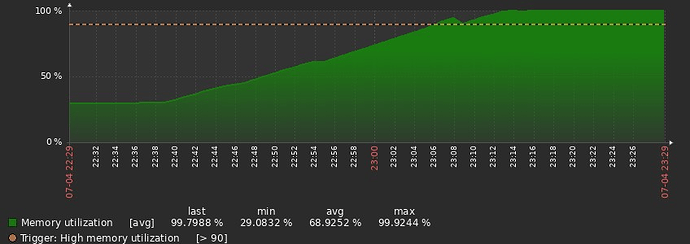

Memory usage: rclone starts at about 2am on 05/23, uses a few GB of RAM, then starts eating memory the day after, at about 2am on 05/24. Server rebooted on 05/24 at 9:30am.

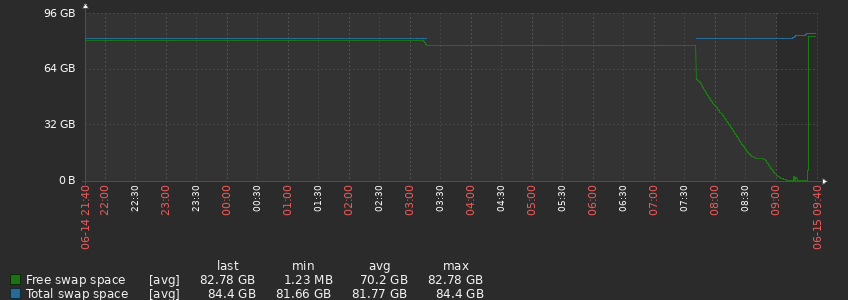

SWAP usage: not certain why it is so high all the time (from previous runs of rclone?)

CPU usage: rather stable all the time

Network usage: Goes up when rclone starts, stable most of the time, does something weird at 2am, goes down at 3am

(One big image because new users can only upload one image...)

When this happens, the system goes unresponsive and I have to kill rclone.exe (when I'm lucky enough that RDP still works), or cold reboot the server.

It doesn't look like that the communication with the NAS gets interrupted at any time, the NAS' uptime right now is 23 days.

I would at least expect rclone to detect when the transfers start to fail and either retry or stop.