What is the problem you are having with rclone?

I have a tiny directory, 110 MB in total, 17k files. I run this command every 5 minutes.

rclone copy --transfers=8 --fast-list /src remote:dest

My conf is the following:

[remote]

type = s3

provider = Cloudflare

access_key_id = x

secret_access_key = x

endpoint = https://x.r2.cloudflarestorage.com

no_check_bucket = true

This gave me a relatively big surprise bill, about $40, showing I am currently doing 150 million Class B requests per month. Which comes out correctly, as listing 17k files every 5 minutes is exactly 150 million requests per month.

But I'm wondering, why does rclone need to make a list request for every single file?

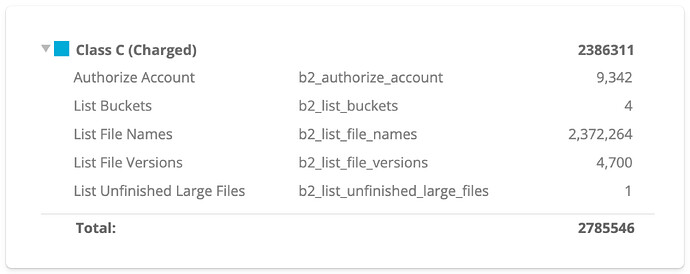

Case in point, I have a much bigger backup, running into Backblaze B2. 7 million (!) files, 500 GB, running once per hour. It's been going on for years, yet I average only 3.8 M requests per month, which is less than what single rclone command would cause if this was on Cloudflare R2!

This is the Backblaze B2 command:

rclone sync \

--config=/dev/null \

--b2-account x \

--b2-key x \

--crypt-remote :b2:x \

--crypt-filename-encryption=off \

--crypt-directory-name-encryption=false \

--crypt-password x \

--transfers=8 \

--multi-thread-streams=8 \

--fast-list \

--links \

--local-no-check-updated \

/src \

:crypt:

Can you shine some light on what is happening here? Is this normal with the Cloudflare integration? Basically meaning that Cloudflare cannot be used for a regular sync cronjob?

Run the command 'rclone version' and share the full output of the command.

rclone v1.65.2

- os/version: ubuntu 22.04 (64 bit)

- os/kernel: 5.15.0-133-generic (x86_64)

- os/type: linux

- os/arch: amd64

- go/version: go1.21.6

- go/linking: static

- go/tags: none

Which cloud storage system are you using? (eg Google Drive)

Backblaze B2

A log from the command that you were trying to run with the -vv flag

I'm happy to provide a log if you tell me exactly what parameters might be interesting here.