This is question to Google. I have no clue and I am not gdrive user. From what I hear it is not very well documented. Which is not such surprise. It is cheap consumer level service without any strong SLAs in place. They have huge number of customers doing random things - so 100% they have mechanism in place to protect service availability vs any hard set limits. If too busy - slow down what is possible.

Google's API limits are in your Console panel for your quota. You aren't really hitting any known / documented API limits for those as you have to generally hit 1 billion API hits per day.

As I understand, when I hit those limits, I get 423, not 429 am I right? So unless there's 423, API limit is not the problem.

This seems IP/IP Range related.

429s are re-used by Google unfortunately so they mean like 4-5 different things.

Your particular 429 seems to be Google Geo IP blocking you or something as I never experienced that ever in my Google Drive use.

Are you running from some dedicated server/IP range? I only ever ran from my house.

Are you running from some dedicated server/IP range? I only ever ran from my house.

Yes, I am running from a VPS with static IP. But it seems to have a good reputation and was never a problem until now.

The infinite loop it got itself into seems to have degraded the reputation.

Is there no way to pause upload on 429 instead of abandoning upload altogether? Because retrying upload within just a minute still works fine. Is there no adaptive backoff strategy on being rate limited?

often, that can be any number of things.

a bit off-topic, but all of sudden, i am having 429 when trying to login to rclone forum.

been working for 4+ years, without issue. but now, only with rclone forum, i get 429

https://forum.rclone.org/t/cannot-connect-to-forum-over-mullvad-proxy-server/44796

I think the only thing the same is a 429 as they just happen to be the same error ![]()

yeah, you are right, but just pointing out how 429 can just suddenly happen and then just go away.

fwiw, the OP is also having another 429 issue, same as myself.

https://forum.rclone.org/t/cannot-connect-to-forum-over-mullvad-proxy-server/44796/2

who is the provider?

are you doing anything else with that VPS, other than upload to gdrive?

i would change the static ip address of the VPS.

that might resolve the problem

@ncw Do you have any suggestions?

Is there any way to just pause the transfer and then resume after cooldown on 429 instead of restarting upload?

you could tail the rclone debug log.

when you get a 429, set --bwlimit to a very low value, wait for a period of time, then raise the limit.

Still getting this error.

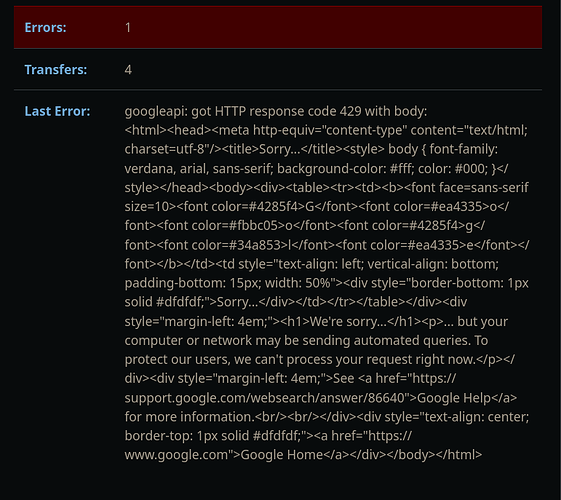

vfs cache: failed to upload try #1, will retry in 10s: vfs cache: failed to transfer file from cache to remote: googleapi: got HTTP response code 429 with

body: <html><head><meta http-equiv="content-type" content="text/html; charset=utf-8"/><title>Sorry...</title><style> body { font-family: ve

rdana, arial, sans-serif; background-color: #fff; color: #000; }</style></head><body><div><table><tr><td><b><font face=sans-serif size=10><

font color=#4285f4>G</font><font color=#ea4335>o</font><font color=#fbbc05>o</font><font color=#4285f4>g</font><font color=#34a853>l</font>

<font color=#ea4335>e</font></font></b></td><td style="text-align: left; vertical-align: bottom; padding-bottom: 15px; width: 50%"><div sty

le="border-bottom: 1px solid #dfdfdf;">Sorry...</div></td></tr></table></div><div style="margin-left: 4em;"><h1>We're sorry...</h1><p>... b

ut your computer or network may be sending automated queries. To protect our users, we can't process your request right now.</p></div><div

style="margin-left: 4em;">See <a href="https://support.google.com/websearch/answer/86640">Google Help</a> for more information.<br/><br/></

div><div style="text-align: center; border-top: 1px solid #dfdfdf;"><a href="https://www.google.com">Google Home</a></div></body></html>

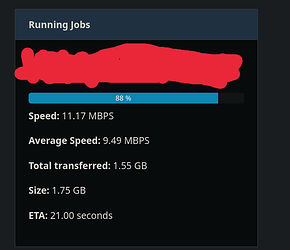

2024/02/27 23:39:56 INFO : vfs cache: cleaned: objects 71 (was 71) in use 1, to upload 0, uploading 1, total size 1.774Gi (was 1.774Gi)

As you can see, now files of size 1.7GiB are failing.

I have now tried --low-level-retries=200, this still doesn't solve the problem.

My hypothesis is, low level retries are being exhausted very fast. Is there any way to pace low level retries? Maybe a cooldown period like retry cooldown.

Always fails at the last minute ![]()

Wherever your machine is, it seems you are being limited by Google. If you have support, you can ask them.

You won't have a flag or anything else to get around this.

The problem is, downloads work fine.

And upload retries work fine after a cooldown period. So if there was a way to temporarily control retries and let the rate limiting cool down, then the loop can be broken.

You crank up something to crazy values I guess:

--retries int Retry operations this many times if they fail (default 3)

--retries-sleep Duration Interval between retrying operations if they fail, e.g. 500ms, 60s, 5m (0 to disable) (default 0s)

I'd want to figure out why though which is why you'd want to check with your provider I'd imagine and/or Google Support.

Hey ! I discovered something.

I had removed chunk size (--drive-chunk-size). This is why smaller files were being rate limited.

I increased it from default 8 to 64 and immediately the speed increased a lot and the file of 1.7 GB got uploaded successfully.

I think the key is larger chunk size to reduce number of chunks, I guess. Unless this has some unintended effect.

I am planning to set this to 256.

drive chunk size is only for uploading and never had any issues with making larger.

I used 128M for my settings.

https://rclone.org/drive/#drive-chunk-size

I am having problem uploading. Not downloading.

(I haven't tested with downloading)

Update: With chunk size set to 256, I could upload a file sized 2.7GB.

So far this has been working.

Edit, this still kept failing for the original file of size 3.19 GB.

I was able to solve that by using

--drive-chunk-size=512M \

--drive-pacer-burst=195 \

--drive-pacer-min-sleep=20000ms \

After just --drive-chunk-size=512M did not work.

My plan is to see if it causes any problems for download speeds. If not, I will just keep it as it is.

Else, I will wait for a week with this config to let Google Drive rate limiting to calm down.