ok so here we go, I did update my .bat file and here is its content for now :

@echo off

title Rclone Music Mount READ/WRITE

rclone mount --log-file="C:\logs\rclone.log" -v --attr-timeout 5000h --dir-cache-time 5000h --drive-pacer-burst 200 --drive-pacer-min-sleep 10ms --no-checksum --poll-interval 0 --rc --vfs-cache-mode full --vfs-read-chunk-size 1M --vfs-cache-poll-interval 1h --vfs-read-chunk-size-limit off --vfs-cache-max-size 500G --vfs-cache-max-age 5000h --cache-dir F:/cache/ gcrypted:music_library/ X:

timeout /t 60 /nobreak

rclone rc vfs/refresh recursive=true --drive-pacer-burst 200 --drive-pacer-min-sleep 10ms --timeout 30m --user-agent

pause

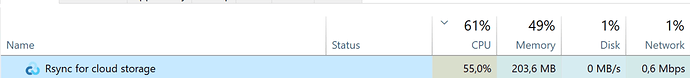

As soon I start it, I see it uses a lot of cpu, which seems to be a good thing :

I can see in the logs tat the rc service was started :

2021/11/23 08:38:57 NOTICE: Serving remote control on http://localhost:5572/

here is what this URL reports though :

and now a little extract of my logs (I do put extracts as it do delete 50k folders after the first few seconds, growing the size considerably!

2021/11/23 08:46:37 DEBUG : rclone: Version "v1.57.0" starting with parameters ["rclone" "mount" "--log-file=C:\logs\rclone.log" "-vv" "--attr-timeout" "5000h" "--dir-cache-time" "5000h" "--drive-pacer-burst" "200" "--drive-pacer-min-sleep" "10ms" "--no-checksum" "--poll-interval" "0" "--rc" "--vfs-cache-mode" "full" "--vfs-read-chunk-size" "1M" "--vfs-cache-poll-interval" "1h" "--vfs-read-chunk-size-limit" "off" "--vfs-cache-max-size" "500G" "--vfs-cache-max-age" "5000h" "--cache-dir" "F:/cache/" "gcrypted:music_library/" "X:"]

2021/11/23 08:46:37 NOTICE: Serving remote control on http://localhost:5572/

2021/11/23 08:46:37 DEBUG : Creating backend with remote "gcrypted:music_library/"

2021/11/23 08:46:37 DEBUG : Using config file from "C:\Users\meaning\.config\rclone\rclone.conf"

2021/11/23 08:46:37 DEBUG : Creating backend with remote "gdrive:gdrive-crypted/tdruus33sc6ecfmdm5mle2hq90"

2021/11/23 08:46:37 DEBUG : gdrive: detected overridden config - adding "{cHldw}" suffix to name

2021/11/23 08:46:38 DEBUG : fs cache: renaming cache item "gdrive:gdrive-crypted/tdruus33sc6ecfmdm5mle2hq90" to be canonical "gdrive{cHldw}:gdrive-crypted/tdruus33sc6ecfmdm5mle2hq90"

2021/11/23 08:46:38 DEBUG : fs cache: switching user supplied name "gdrive:gdrive-crypted/tdruus33sc6ecfmdm5mle2hq90" for canonical name "gdrive{cHldw}:gdrive-crypted/tdruus33sc6ecfmdm5mle2hq90"

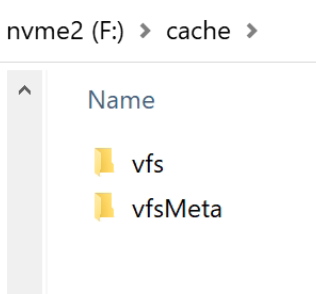

2021/11/23 08:46:38 DEBUG : vfs cache: root is "F:\cache"

2021/11/23 08:46:38 DEBUG : vfs cache: data root is "\\?\F:\cache\vfs\gcrypted\music_library"

2021/11/23 08:46:38 DEBUG : vfs cache: metadata root is "\\?\F:\cache\vfsMeta\gcrypted\music_library"

2021/11/23 08:46:38 DEBUG : Creating backend with remote "F:/cache/vfs/gcrypted/music_library/"

2021/11/23 08:46:38 DEBUG : fs cache: renaming cache item "F:/cache/vfs/gcrypted/music_library/" to be canonical "//?/F:/cache/vfs/gcrypted/music_library/"

2021/11/23 08:46:38 DEBUG : Creating backend with remote "F:/cache/vfsMeta/gcrypted/music_library/"

2021/11/23 08:46:38 DEBUG : fs cache: renaming cache item "F:/cache/vfsMeta/gcrypted/music_library/" to be canonical "//?/F:/cache/vfsMeta/gcrypted/music_library/"

2021/11/23 08:47:00 ERROR : Local file system at //?/F:/cache/vfs/gcrypted/music_library/: Failed to list "10-2019/Alex Falk - OOF (WEB FLAC 24) ": directory not found

2021/11/23 08:47:01 ERROR : Local file system at //?/F:/cache/vfs/gcrypted/music_library/: Failed to list "10-2019/Rembert De Smet & Ferre Baelen - Le Mystérieux EP [FLAC] ": directory not found

2021/11/23 08:47:01 ERROR : Local file system at //?/F:/cache/vfs/gcrypted/music_library/: Failed to list "10-2019/Various Artists - Best of Klassik 2018 - Die grosse Gala der OPUS KLASSIK-Preistrager ": directory not found

2021/11/23 08:47:01 INFO : 10-2019/Various Artists - Best of Klassik 2018 - Die grosse Gala der OPUS KLASSIK-Preistrager : Removing directory

2021/11/23 08:47:01 ERROR : 10-2019/Various Artists - Best of Klassik 2018 - Die grosse Gala der OPUS KLASSIK-Preistrager : Failed to rmdir: remove \?\F:\cache\vfs\gcrypted\music_library\10-2019\Various Artists - Best of Klassik 2018 - Die grosse Gala der OPUS KLASSIK-Preistrager␠: The system cannot find the file specified.

2021/11/23 08:47:01 ERROR : Local file system at //?/F:/cache/vfs/gcrypted/music_library/: vfs cache: failed to remove empty directories from cache path "": remove \?\F:\cache\vfs\gcrypted\music_library\10-2019\Various Artists - Best of Klassik 2018 - Die grosse Gala der OPUS KLASSIK-Preistrager␠: The system cannot find the file specified.

2021/11/23 08:47:01 ERROR : Local file system at //?/F:/cache/vfsMeta/gcrypted/music_library/: Failed to list "10-2019/Alex Falk - OOF (WEB FLAC 24) ": directory not found

2021/11/23 08:47:03 ERROR : Local file system at //?/F:/cache/vfsMeta/gcrypted/music_library/: Failed to list "10-2019/Rembert De Smet & Ferre Baelen - Le Mystérieux EP [FLAC] ": directory not found

2021/11/23 08:47:03 ERROR : Local file system at //?/F:/cache/vfsMeta/gcrypted/music_library/: Failed to list "10-2019/Various Artists - Best of Klassik 2018 - Die grosse Gala der OPUS KLASSIK-Preistrager ": directory not found

2021/11/23 08:47:03 INFO : 10-2019/Various Artists - Best of Klassik 2018 - Die grosse Gala der OPUS KLASSIK-Preistrager : Removing directory

2021/11/23 08:47:03 ERROR : 10-2019/Various Artists - Best of Klassik 2018 - Die grosse Gala der OPUS KLASSIK-Preistrager : Failed to rmdir: remove \?\F:\cache\vfsMeta\gcrypted\music_library\10-2019\Various Artists - Best of Klassik 2018 - Die grosse Gala der OPUS KLASSIK-Preistrager␠: The system cannot find the file specified.

2021/11/23 08:47:03 ERROR : Local file system at //?/F:/cache/vfs/gcrypted/music_library/: vfs cache: failed to remove empty directories from metadata cache path "": remove \?\F:\cache\vfsMeta\gcrypted\music_library\10-2019\Various Artists - Best of Klassik 2018 - Die grosse Gala der OPUS KLASSIK-Preistrager␠: The system cannot find the file specified.

2021/11/23 08:47:03 DEBUG : Network mode mounting is disabled

2021/11/23 08:47:03 DEBUG : Mounting on "X:" ("gcrypted music_library")

2021/11/23 08:47:03 DEBUG : Encrypted drive 'gcrypted:music_library/': Mounting with options: ["-o" "attr_timeout=1.8e+07" "-o" "uid=-1" "-o" "gid=-1" "--FileSystemName=rclone" "-o" "volname=gcrypted music_library"]

2021/11/23 08:47:03 DEBUG : Encrypted drive 'gcrypted:music_library/': Init:

2021/11/23 08:47:03 DEBUG : Encrypted drive 'gcrypted:music_library/': >Init:

2021/11/23 08:47:03 DEBUG : /: Statfs:

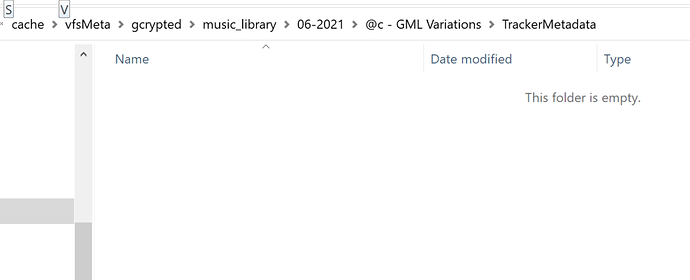

and after the initial start, it then starts to "list" all my files and folders I guess which shows like this :

2021/11/23 08:50:08 DEBUG : /07-2021/(APD 43) dISHARMONY - dark-Live-fest (2021) WEB/TrackerMetadata: Releasedir: fh=0xF

2021/11/23 08:50:08 DEBUG : /07-2021/(APD 43) dISHARMONY - dark-Live-fest (2021) WEB/TrackerMetadata: >Releasedir: errc=0

2021/11/23 08:50:08 DEBUG : /07-2021/(APD 43) dISHARMONY - dark-Live-fest (2021) WEB/TrackerMetadata: Getattr: fh=0xFFFFFFFFFFFFFFFF

2021/11/23 08:50:08 DEBUG : /07-2021/(APD 43) dISHARMONY - dark-Live-fest (2021) WEB/TrackerMetadata: >Getattr: errc=0

2021/11/23 08:50:08 DEBUG : /07-2021/(APD 43) dISHARMONY - dark-Live-fest (2021) WEB/TrackerMetadata: Getattr: fh=0xFFFFFFFFFFFFFFFF

2021/11/23 08:50:08 DEBUG : /07-2021/(APD 43) dISHARMONY - dark-Live-fest (2021) WEB/TrackerMetadata: >Getattr: errc=0

2021/11/23 08:50:08 DEBUG : /07-2021/(APD 43) dISHARMONY - dark-Live-fest (2021) WEB/TrackerMetadata: Opendir:

2021/11/23 08:50:08 DEBUG : /07-2021/(APD 43) dISHARMONY - dark-Live-fest (2021) WEB/TrackerMetadata: OpenFile: flags=O_RDONLY, perm=-rwxrwxrwx

2021/11/23 08:50:08 DEBUG : /07-2021/(APD 43) dISHARMONY - dark-Live-fest (2021) WEB/TrackerMetadata: >OpenFile: fd=07-2021/(APD 43) dISHARMONY - dark-Live-fest (2021) WEB/TrackerMetadata/ (r), err=

2021/11/23 08:50:08 DEBUG : /07-2021/(APD 43) dISHARMONY - dark-Live-fest (2021) WEB/TrackerMetadata: >Opendir: errc=0, fh=0xF

2021/11/23 08:50:08 DEBUG : /09-2019/(2019 - WEB - 24bitFLAC) DJ Ladybarn - Phonk In Tha Garage/TrackerMetadata: Readdir: ofst=0, fh=0x1E

2021/11/23 08:50:08 DEBUG : pacer: low level retry 1/10 (error googleapi: Error 403: User Rate Limit Exceeded. Rate of requests for user exceed configured project quota. You may consider re-evaluating expected per-user traffic to the API and adjust project quota limits accordingly. You may monitor aggregate quota usage and adjust limits in the API Console

2021/11/23 08:50:08 DEBUG : pacer: Rate limited, increasing sleep to 1.053817085s

here we can see that the API limit was reached a few seconds after I started rclone.

So, will the refresh thing now "cache" my files and avoid me to reach the API limits once everything will be put in cache (for real?) ? and should I be worried because I get that "not found" message when connecting 127.0.0.1 ?

Also, if I understand this correctly, the initial scan of all the files and folders will now happen, reaching the API limit, but the folder and file structure (metadata) should now be stored somewhere, right ? Sorry I am still a little bit confused about how this actually do work.

![]()