What is the problem you are having with rclone?

Previously, we had issues with our pods running out of memory (see this post). Since running the recommended beta this issue is solved. However, now since running the beta that writes listing to disk (instead of memory), our egress has been significantly impacted. Before it was consistently going at 1GiB/s. Right now, we are lucky to reach 25MiB/s. See graphs below:

When we were still on rclone v1.69.2-beta.8581.84f11ae44.v1.69-stable:

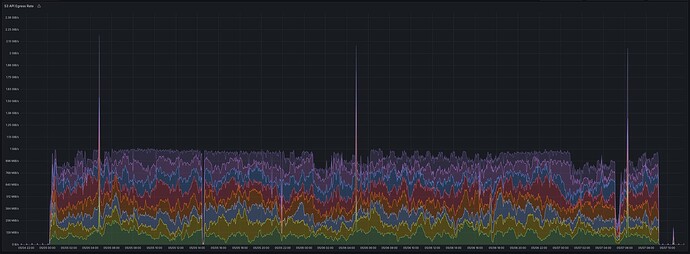

Now, on rclone v1.70.0-beta.8730.9d55b2411:

Is this expected? I am aware this is beta, but since the memory fix is not yet released in a stable version I have to run this version.

Run the command 'rclone version' and share the full output of the command.

rclone v1.70.0-beta.8730.9d55b2411

- os/version: alpine 3.21.3 (64 bit)

- os/kernel: 6.8.0-60-generic (x86_64)

- os/type: linux

- os/arch: amd64

- go/version: go1.24.3

- go/linking: static

- go/tags: none

Which cloud storage system are you using? (eg Google Drive)

MinIO to Ceph RGW

The command you were trying to run (eg rclone copy /tmp remote:tmp)

rclone sync source:"prod-bucket"/ target:"prod-bucket"/ --retries=3 --low-level-retries 10 --log-level=INFO --use-mmap --list-cutoff=1000000 --metadata --transfers=50 --checkers=8 --checksum --s3-use-multipart-etag=true --multi-thread-cutoff=256Mi --s3-chunk-size=5Mi

The rclone config contents with secrets removed.

2025/05/07 07:08:18 NOTICE: Config file "/.rclone.conf" not found - using defaults

We are using env variables to set it, but basically should look like:

[minio]

type = s3

provider = minio

access_key_id = xxx

secret_access_key = xxx

endpoint = xxx

region = ""

[ceph]

type = s3

provider = Ceph

access_key_id = xxx

secret_access_key = xxx

endpoint = xxx

sse_customer_algorithm = xxx

sse_customer_key_base64 = xxx

sse_customer_key_md5 = xxx

region = ""

A log from the command with the -vv flag

It reports back 531.352 MiB/s now. However, I do not see that number back in our dashboards at all.

[2025-05-21 12:17:33 UTC] INFO: START rclone sync from https://s3.xxx.xxx.net/prod-bucket to https://objectstore.xxx.xxx/prod-bucket

[2025-05-21 12:17:33 UTC] INFO: Executing command: rclone sync source:"prod-bucket"/ target:"prod-bucket"/ --retries=3 --low-level-retries 10 --log-level=INFO --use-mmap --list-cutoff=1000000 --metadata --transfers=50 --checkers=8 --checksum --s3-use-multipart-etag=true --multi-thread-cutoff=256Mi --s3-chunk-size=5Mi

2025/05/21 12:17:33 NOTICE: Config file "/.rclone.conf" not found - using defaults

...

[setting defaults with env]

...

2025/05/21 12:18:33 INFO :

Transferred: 0 B / 0 B, -, 0 B/s, ETA -

Checks: 0 / 0, -, Listed 938700

Elapsed time: 1m0.0s

2025/05/21 12:18:37 NOTICE: S3 bucket prod-bucket: Switching to on disk sorting as more than 1000000 entries in one directory detected

2025/05/21 12:19:33 INFO :

Transferred: 0 B / 0 B, -, 0 B/s, ETA -

Checks: 0 / 0, -, Listed 1829200

Elapsed time: 2m0.0s

...

[lots of listing and ultimately transfering]

...

2025/05/28 12:12:33 INFO :

Transferred: 1.815 TiB / 1.815 TiB, 100%, 531.352 MiB/s, ETA 0s

Checks: 22758806 / 22758806, 100%, Listed 111755913

Transferred: 645494 / 645494, 100%

Elapsed time: 6d23h55m0.0s