What is the problem you are having with rclone?

Certain files won't upload with the S3 backend (MinIO) wrapped with rclone crypt.

Run the command 'rclone version' and share the full output of the command.

rclone v1.69.0-beta.8363.4db09331c

- os/version: Microsoft Windows 11 Pro 23H2 (64 bit)

- os/kernel: 10.0.22631.4169 (x86_64)

- os/type: windows

- os/arch: amd64

- go/version: go1.23.2

- go/linking: static

- go/tags: cmount

Which cloud storage system are you using? (eg Google Drive)

S3 (MinIO)

The command you were trying to run (eg rclone copy /tmp remote:tmp)

rclone --config rclone-hot-storage.conf copy "c:\Users\Araki\Desktop\temp\Backups\PCs\Laptop Lenovo C340-11\bionic-20211121-2249.tar.gz" "s3_crypt:Backups\PCs\Laptop Lenovo C340-11\bionic-20211121-2249.tar.gz" -P -vvv

Please run 'rclone config redacted' and share the full output. If you get command not found, please make sure to update rclone.

[s3]

type = s3

provider = Minio

access_key_id = XXX

secret_access_key = XXX

endpoint = XXX

location_constraint = XXX

acl = private

region = XXX

[s3_crypt]

type = crypt

remote = s3:rclone-data/encrypted

password = XXX

password2 = XXX

filename_encoding = base32768

Despite the forum's warning, I'm not submitting the endpoint publicly in plain text

A log from the command that you were trying to run with the -vv flag

2024/10/04 19:48:50 DEBUG : rclone: Version "v1.69.0-beta.8363.4db09331c" starting with parameters ["rclone" "--config" "rclone-hot-storage.conf" "copy" "c:\\Users\\Araki\\Desktop\\temp\\Backups\\PCs\\Laptop Lenovo C340-11\\bionic-20211121-2249.tar.gz" "s3_crypt:Backups\\PCs\\Laptop Lenovo C340-11\\bionic-20211121-2249.tar.gz" "-P" "-vvv"]

2024/10/04 19:48:50 DEBUG : Creating backend with remote "c:\\Users\\Araki\\Desktop\\temp\\Backups\\PCs\\Laptop Lenovo C340-11\\bionic-20211121-2249.tar.gz"

2024/10/04 19:48:50 DEBUG : Using config file from "V:\\rclone\\rclone-hot-storage.conf"

2024/10/04 19:48:50 DEBUG : fs cache: renaming child cache item "c:\\Users\\Araki\\Desktop\\temp\\Backups\\PCs\\Laptop Lenovo C340-11\\bionic-20211121-2249.tar.gz" to be canonical for parent "//?/c:/Users/Araki/Desktop/temp/Backups/PCs/Laptop Lenovo C340-11"

2024/10/04 19:48:50 DEBUG : Creating backend with remote "s3_crypt:Backups\\PCs\\Laptop Lenovo C340-11\\bionic-20211121-2249.tar.gz"

2024/10/04 19:48:50 DEBUG : Creating backend with remote "s3:rclone-data/encrypted/痠毕獾潓驡胁貥篜俟/䝩辦ဌ稨㢫缽㜘唍駟/ဖꆎꑬ嫐ꙓތꏪ材纎㲁鮼為靾柑㮂铊砄ɟ/䠼睇㭚嗜龛㣴ꍄ鉏␚桎䜙覸爯╜橩劓憻ɟ"

2024/10/04 19:48:50 DEBUG : fs cache: renaming cache item "s3_crypt:Backups\\PCs\\Laptop Lenovo C340-11\\bionic-20211121-2249.tar.gz" to be canonical "s3_crypt:Backups/PCs/Laptop Lenovo C340-11/bionic-20211121-2249.tar.gz"

2024/10/04 19:48:50 DEBUG : bionic-20211121-2249.tar.gz: Need to transfer - File not found at Destination

2024/10/04 19:48:50 DEBUG : bionic-20211121-2249.tar.gz: Computing md5 hash of encrypted source

2024/10/04 19:48:57 DEBUG : 䠼睇㭚嗜龛㣴ꍄ鉏␚桎䜙覸爯╜橩劓憻ɟ: open chunk writer: started multipart upload: NGI1YzhjNGUtZDdhYS00OTBiLTliNDEtZWNlYTYwYTg5NjA1LjAzMGQwODFmLWVmZjgtNDU5ZC1iN2UzLThhNDA1ODNmOGE5M3gxNzI4MDYwNTM2NDM5OTI5Njc0

2024/10/04 19:48:57 DEBUG : bionic-20211121-2249.tar.gz: multipart upload: starting chunk 0 size 5Mi offset 0/2.875Gi

2024/10/04 19:48:57 DEBUG : bionic-20211121-2249.tar.gz: multipart upload: starting chunk 1 size 5Mi offset 5Mi/2.875Gi

2024/10/04 19:48:57 DEBUG : bionic-20211121-2249.tar.gz: multipart upload: starting chunk 2 size 5Mi offset 10Mi/2.875Gi

2024/10/04 19:48:57 DEBUG : bionic-20211121-2249.tar.gz: multipart upload: starting chunk 3 size 5Mi offset 15Mi/2.875Gi

2024/10/04 19:48:57 DEBUG : bionic-20211121-2249.tar.gz: Cancelling multipart upload

2024/10/04 19:48:57 DEBUG : 䠼睇㭚嗜龛㣴ꍄ鉏␚桎䜙覸爯╜橩劓憻ɟ: multipart upload "NGI1YzhjNGUtZDdhYS00OTBiLTliNDEtZWNlYTYwYTg5NjA1LjAzMGQwODFmLWVmZjgtNDU5ZC1iN2UzLThhNDA1ODNmOGE5M3gxNzI4MDYwNTM2NDM5OTI5Njc0" aborted

2024/10/04 19:48:57 ERROR : bionic-20211121-2249.tar.gz: Failed to copy: failed to upload chunk 1 with 5242880 bytes: operation error S3: UploadPart, https response error StatusCode: 404, RequestID: 17FB4DE6FF8D2EEC, HostID: e8a951b3eb570bf9fcd6fabcdc8fdf3f745c8f289f3c8a42e09005c3cfde6602, api error NoSuchUpload: The specified multipart upload does not exist. The upload ID may be invalid, or the upload may have been aborted or completed.

2024/10/04 19:48:57 ERROR : Attempt 1/3 failed with 1 errors and: failed to upload chunk 1 with 5242880 bytes: operation error S3: UploadPart, https response error StatusCode: 404, RequestID: 17FB4DE6FF8D2EEC, HostID: e8a951b3eb570bf9fcd6fabcdc8fdf3f745c8f289f3c8a42e09005c3cfde6602, api error NoSuchUpload: The specified multipart upload does not exist. The upload ID may be invalid, or the upload may have been aborted or completed.

2024/10/04 19:48:57 DEBUG : bionic-20211121-2249.tar.gz: Need to transfer - File not found at Destination

2024/10/04 19:48:57 DEBUG : bionic-20211121-2249.tar.gz: Computing md5 hash of encrypted source

2024/10/04 19:48:58 INFO : Signal received: interrupt

2024/10/04 19:48:58 INFO : Exiting...

Info

Seemingly, the relevant part is here:

2024/10/04 19:48:57 ERROR : bionic-20211121-2249.tar.gz: Failed to copy: failed to upload chunk 1 with 5242880 bytes: operation error S3: UploadPart, https response error StatusCode: 404, RequestID: 17FB4DE6FF8D2EEC, HostID: e8a951b3eb570bf9fcd6fabcdc8fdf3f745c8f289f3c8a42e09005c3cfde6602, api error NoSuchUpload: The specified multipart upload does not exist. The upload ID may be invalid, or the upload may have been aborted or completed.

2024/10/04 19:48:57 ERROR : Attempt 1/3 failed with 1 errors and: failed to upload chunk 1 with 5242880 bytes: operation error S3: UploadPart, https response error StatusCode: 404, RequestID: 17FB4DE6FF8D2EEC, HostID: e8a951b3eb570bf9fcd6fabcdc8fdf3f745c8f289f3c8a42e09005c3cfde6602, api error NoSuchUpload: The specified multipart upload does not exist. The upload ID may be invalid, or the upload may have been aborted or completed.

When running rclone move, there are about 50 files in total that report the same issue, all relatively big in size (the smallest I believe is about ~300MB). Initially, I thought the files turned corrupted somehow when I was transfering them using the sftp backend with an outdated version of rclone, but that turned out not to be the case once I localized it (though it still might've broken something in the bucket?).

Running rclone backend --config rclone-hot-storage.conf list-multipart-uploads s3: returns this:

{

"rclone-data": []

}

So does aws cli.

On the backend side, MinIO also can't find anything related to aborted/incompleted files with ./mc ls --recursive --incomplete /home/minio/minio/rclone-data/ (no output).

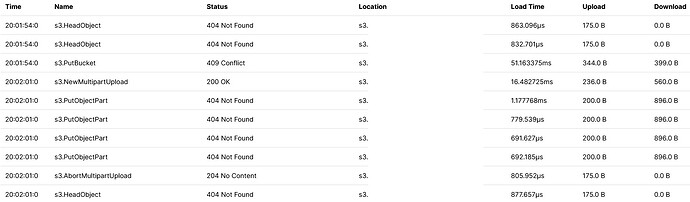

And tracing while uploading returns this:

Weirdly, just changing the filename fixes the issue, but I'd like to fix it without renaming the files or creating a new bucket if that's possible. Is there anything I can do about this?

Thanks.