I found the issue you hit with master branch. I'll fix it but yeah... don't use unreleased versions unless I suggest otherwise

ugly one? so what branch you advice on git clone ?

so what branch you advice on git clone ?

The latest release / tag. Releases are on the release page. Use that.

so i am now using excludes with downloads/** and that data is not uploaded as supposed!

but now i have one doubt or i am misunderstanding everything since the beggining.

let's pick one example of my last download:

i downloaded:

Supernatural.S12.1080p.AMZN.WEB-DL.DDP5.1.H.264-NTb and when transmission ended it i got

Size:

72.4 GB (4,320 pieces @ 16.0 MiB)

Location:

/gmedia/downloads/completed/tv

after a while sonarr pick it and make a new pointer to the correct place inside /gmedia/tv/Supernatural.

Some time after that step my cron job starts and upload the data to gdrive however my HDD still have space ocuppied with the files of the show that transmission downloaded.

Isn't supposed that data inside of /gmedia/downloads/completed/ be just pointers? Or this is the right behaviour and i should have space for it if i want to make permanent seed?

Thanks

That's right as mergerfs isn't magically making space for you

If reduces one copy by using a hard link.

So if you /gmedia/downloads/file.mkv and it makes a copy to /gmedia/tv/file.mkv, it does that by using a hard link, which is a pointer to the file and if the file was 1GB, it still shows 1GB on the local disk.

Once /gmedia/tv/file.mkv is uploaded, the counter goes 1 and you have the 1 local copy seeing until you remove it. I do not seed from my Google Drive as torrent traffic is just a bad use for seeing imo and I don't do it.

i always thought that after the upload of the /gmedia/tv/file.mkv to GD the pointer of /gmedia/downloads/file.mkv start to point to cloud and not to the block data on the own disk ending up releasing local space.

The way hard links work, you cannot hard link across separate devices as they are pointers so that does not work like that unfortunately.

Hi @Animosity022, using rclone mount on google drive which is faster for reading and scanning files in Plex, a root folder with all movies or a root folder but with seperate folders inside for movies that have sequels or are part of a collection e.g, MCU. Does Google Drive API works faster when all stuff is in folder or does it not matter? Asking because I recently put all stuff out of folders in one root folder and didn't really notice any difference and now want to put back titles with sequels in their own folder.

Few Other Questions:

- Does taking a whole day on initial scan normal with 2500 titles?

- Is their an equivalent of plex_autoscan script for Windows 10?

- Does Plex Library Options: "Scan my Library automatically" & "Run a partial scan when changes are detected" work using rclone mount and WinFSP?

Info:

Rclone 1.51.0

Operating System: Windows 10 64-bit

Rclone mount: Google Drive (unencrypted) Read-only

Mount command: mount GDrive: P: --config "C:\Users<user>.config\rclone\rclone.conf" --dir-cache-time 1000h

API: My Own

It really depends. Having more folders tends to be helpful as there are more API calls to get them and they go a bit faster in the beginning. If the folders aren't changing, as big folder would take a little longer initially, but stay cached and make less calls. I just follow standard Sonarr/Radarr/Plex naming and have a folder for each movie.

Depends on many things like your connection, computer, etc. If things are moving and progress, that doesn't seem too bad at all!

No idea as I don't use Windows.

No idea as I don't use Windows.

If you have questions on my settings, please ask away here and for anything not related to my settings, just open a new thread and someone or I can help out.

Thanks!

You definitely want each movie in its own folder, regardless of the OS. On Windows in particular, especially with more than a few thousand folders, you should create separate folders for each letter. If you don't, Explorer will take quite a while to list all the individual folders, and a Plex scan will take a very long time, even with a cached/primed directory.

There is no plex_autoscan script for Windows that I'm aware of. "Scan my Library automatically" should work, but partial scan does not. I would generally recommend running a manual scan just once a day.

My current mount, thanks to @Animosity022:

rclone mount --buffer-size 256M --dir-cache-time 1000h --poll-interval 15s --rc --read-only --timeout 1h -v

After I upload and before I run a Plex scan, I prime the mount with the following:

rclone rc vfs/refresh recursive=true --fast-list -v

This makes the scan faster by caching all files and folders first. Best thing is it only takes a few minutes.

@pamoman - Hey there! Rather than using this post to troubleshoot something completely different than what I am using, it's always best to make a new post and if you have any specific questions on my settings, I'm happy to answer them here.

Hi @Animosity022 I want to use your configs on

https://github.com/animosity22/homescripts/blob/master/systemd/rclone.service I am bit confused on where to start off. I got everything setup on rclone but hit the rate limit for the google drive, so I'm trying to fix it by adopting your config. Hope you can help, thanks.

I'd probably start a new thread and use the question template. The service file is used for starting the service so I'm not sure what your question is.

Actually, how do I use the service file? I'm completely new to rclone. I read through your repo readme as well, but wasn't able to find it.

Just start a new post rather as your question isn't related to any of my settings.

Here is some systemd information:

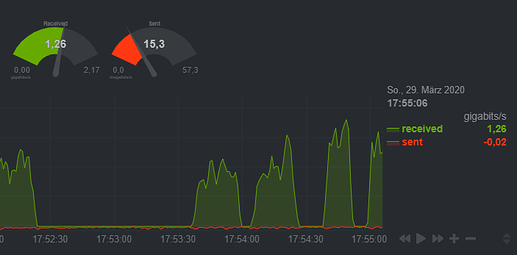

Today I have notice that my vps has huge incoming traffic with my setup (rclone + emby). The vps has 2,5TB outgoing and 23TB incoming traffic.

first step: I check the traffic meter in netdata while streaming:

As you can see the the incoming traffic is much higher than the outgoing...

I guess rclone read files mutiple times with vfs-mount while streaming. Has anybody a similar setup and can checkout his traffic history.

mount is:

--allow-other \

--umask 0007 \

--uid 120 \

--gid 120 \

--async-read=true \

--vfs-read-wait 60ms \

--buffer-size 2G \

--fast-list \

--timeout 30m \

--dir-cache-time 96h \

--drive-chunk-size 128M \

--vfs-cache-max-age 72h \

--vfs-cache-mode writes \

--vfs-cache-max-size 100G \

--vfs-read-chunk-size 128M \

--vfs-read-chunk-size-limit 2G \

--log-file=/var/log/rclone/mount1.log \

--log-level=INFO \

--user-agent "GoogleDriveFS/36.0.18.0 (Windows;OSVer=10.0.19041;)"If you could, please make a new post. If you have a question regarding my settings, you can use this post.

In general, rclone only downloads what you ask it to, so you'd want check your setup.

Any idea to speed up mediainfo loading in emby? It takes a pretty long time to load posters in the library. Like your latest config I don't use the vfs stuff as I can't seem to figure out whether it is good or bad for me. I use my gdrive only for streaming on emby.

Here is my config:

rclone mount Gsuite000: /home/gdrive

--stats 5s

--timeout 10s

--buffer-size 256M

--contimeout 10s

--dir-cache-time 2h

--no-modtime

--poll-interval 30m

-v

I am using a proxy for rclone. The upload bandwidth of the proxy is capped at 30Mbps, and I have more than 4,000 movies in the library, so I am not sure if I am asking for too much.