Interesting, I'm using the same setup (mergerfs and write locally first) and had the connection to Plex setup in Sonarr/Radarr to notify on download/upgrade/rename and Plex would never scan and add the media. The connection from Sonarr/Radarr was working prior to moving to a MergerFS/Rclone setup though. I may have to disable Plex Autoscan and try it with the Sonarr/Radarr connection again.

Feel free to make a new post and if you share details, I'm happy to help look through as it should work without an issue.

I have the problem when I play direct stream as a 4k that it always hangs short times.

but when I transcode it the buffer gets bigger (according to Tautulli) and it runs smoothly.

how is your experience using which buffer with which size?

my acutal mount

--cache-db-purge --stats 1s --buffer-size 1G --timeout 5s --contimeout 5s gdrive:/ /mnt/

Would recommend to make a new post and use the question tag so you can fill in all the details. You don't seem to be using my settings as this post is for questions related to any of my setup that I can answer.

Thanks

I see from your guide that you turn off Deep Analyusis in Plex. But this feature is a very useful for avoiding the dreaded "you don't have enough bandwidth to play this media" error. This error usually comes about because Deep Analysis has not courred on the media you are watching and therefore the Streaming Brain doubles the required bandwdith as a precaution. For high bitrate files on low bandwith connections this can result in the above error, even if they could actually stream the file at its original bitrate.

I, as well as some people I share my server with, have limited download speeds, raning from 20Mbps to 80 Mbps. Most are on the lower side of this range, so running deep analysis will prevent my Plex server from A: generating this error message to the end user and B: transcode where necessary for a client devices on a low bandwdith connection. My aim to avoid transcodes all together, a I am on a shared server.

I am not currently using mergerfs, but am thinking of doing so. Manily because I want to have deep anaylisys performed on the files I download before they end up on gdrive.

Currently I do this by downloading to folder a and keeping the files there for 5 days. I perform deep analysis (manually at the moment, but an working on a script) on these files whilst they are in folder a. Then after 5 days a scheduled rclone move puts the files in gdrive, which is folder b. Both folder a and folder b are configured in my plex libraries.

But the problem I have currently is that once the rclone move happens, plex wipes out the deep analysis of the file because the file is now in a different location (folder b instead of folder a where the analaysis ocurred). Even though its the same file, because the file path has changed it treats it as a new media item and gives it a new ID. (annoying).

So I was thinking that merfefs would avoid this issue issue. But I am struggling to figure out how I could, programatically, determine which files are local and perform deep analysis on those files. In your expirience would mergerfs provide a means to identify which files are local?

Yes, I've very familiar with the setting and I don't use it on my server. Running deep analysis on a file is a ton of overhead for every item and since I don't use it, I turn it off.

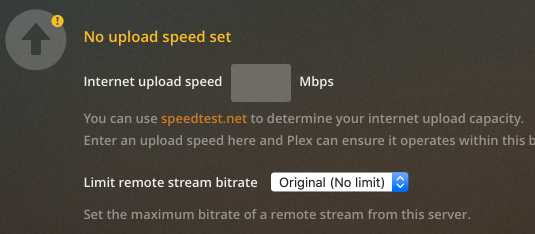

If you limit the bitrate with that setting above and you get not enough bandwidth, it really has to do with your upload as it's already limiting the bandwidth to the number you have there. You could also be hitting transcoding issues and keeping up as well. A lot of factors go into that message as client plays a large role too.

You'd be better off just having your friends limit the bitrate on their devices as that's a much easier setting.

You can't figure out what's local or not as that's the beauty of a merged file system. You'd want to do deep analysis before you uploaded it. That could probably be done via a script or something but outside of my settings as I don't use it. You can probably post to the plex reddit as they are helpful as well on things like that.

My setup the path names never change for anything as that's why I use mergerfs so I don't see those issues.

Thanks for your reponse.

My upload bandwdith is not the issue for most clients as I have a hosted server that has a minimum of 400GB upload and max 1Gbps. The issue they get is that if I do not set a per stream bandwdith limit, their clients devices do not always report their bandwdith correctly, and the error message appears. if I put a per stream limit of 20Mbps or under, depending on the client devcies available bandwidth, it usually resolves the issue. typically 12Mbps is the sweet spot for my end users. But this increases the transcode reqruiement due to the double bandwith requirement set by not performing a deep analysis.

Yes I could get them to set the bandwdith limit on their end for each client devices, but this will only make plex transcode more often than it should. Typically the Quality for remote strems on each client dvivces is 2 Mbps by default for mobile devices and mayby 4 Mbps for streaming devices like ATV or FIreTV. Very annoying when trying to reduce transcodes and increase direct play or direct streams

I go to great efforts to download high bitrate media and strip it down to its bare bones by reduce the bitrate and therefore bandwidth requirment as much as possible (without sacrificing percevied viewing quality).

I am thinking that I might be able to do some kind of sqlite lookup for each local file and obtaint the media ID number from the Plex database, and then pass this on to a Plex Media Scanner --analyze-deeply command.

Annoyingling it looks like my shared hosted server does not have merger fs though. But does have unionfs. I'll have a play with that and see what i can get working. If I get it working I'll post back here if anyone is interested.

It increases if you use the limit bandwidth option as you need deep analysis for that.

If you set quality on the client, it uses that and transcodes to that if needed.

You can always start a new topic to get some feedback from some other folks too as I don't use it and it would not be related to my settings as I don't recommend setting it.

Hello. I need some help.

I trying to understand your solution and I have some issues understanding some points.

I have 2 folders

/remote

/local

So the idea is to create with mergerfs a single one

/joined

So you point Sonarr, Radarr to there and you dont have problems will empty folders and wrong paths.

The thing that I don't understand is:

I download to a temp folder (/home/me/downloads), and when done, Sonarr will move to /joined/TV. Doing that will mergerfs puts the file in the /local folder? I understand that then you make a cron to move the files from local to cloud, but i don't understand how moving files to /join you ensure that is going to local. It just works?

Thanks for the guide!

In my case, I call this /gmedia which is my merger folder.r I point Sonarr and Radarr to /joined in your case and i basically exclude any of my Sonarr/Radarr items and only move the final destination stuff in Plex.

So

root@gemini:/gmedia# ls -al

total 21

drwxrwxr-x 5 felix felix 4096 Oct 2 06:11 .

drwxr-xr-x 29 root root 4096 Oct 2 06:25 ..

-rw-rw-r-- 1 felix felix 243 May 19 17:33 mounted

drwxrwxr-x 1 felix felix 0 Jun 17 2018 Movies

drwxrwxr-x. 7 felix felix 4096 Sep 30 13:43 NZB

drwxrwsr-x. 5 felix felix 4096 Sep 29 07:09 torrents

drwxrwxr-x 7 felix felix 4096 Oct 2 10:02 TV

NZB and torrents are exclude from my moves over night and they remain local.

Movies and TV are uploaded each night which empties out my local storage.

Does that help?

Ya! Thanks!

Also, I did a test with 2 random folders to test mergerfs behavior before doing it to my shares. With your flags it copies the files always to the first folder you mount, also if conflict files name, always shows the 1st folder file you mount. That's pretty nice and very easy to work with!

Thank you for your time and help!

@Animosity022 Thanks a lot for your github contribution. The readme says you are using Ubuntu 18.04. I noticed some commitments about Arch Linux. I would like to rebuild your setup (partial) but i am not sure about whether i should use Ubuntu or Arch linux. What is your recommendation (my basic linux skills but should be enough)

It really does not matter too much. I've used Fedora/Arch/Ubuntu mainly.

Arch really isn't a starter Linux imo as it's a requires a bit more to get installed and working. Ubuntu is probably the best starter and it tends to just work. Fedora is a bit more bleeding edge but also a good choice.

I'm not understanding. with this setup(mergerfs) you have sonarr hardlinking directly into something like /mnt/combined/media which would create partial~ files until it 'uploads' fully, which means.

so you have

/home/local/files

/mnt/notlocal/drive

which mergerfs is

/mnt/combined/media

if you have radarr hardlink directly into /mnt/combined/media you will get partial~ files.

you say this but why would you need to upload with a cron when you have them going directly into mergerfs?

If they go in mergerfs, they WILL upload to google drive automatically

You only get partial files if you are copying and not hardlinking. If you see partial files, you have an issue somewhere in your setup.

The way my setup works is I use a local disk and a rclone mount and combine them with mergerfs. I use a mergerfs policy to always write to the first disk that is listed, which is my local disk.

So the flow looks like:

[felix@gemini gmedia]$ cp /etc/hosts .

[felix@gemini gmedia]$ ls -al hosts

-rw-r--r-- 1 felix felix 221 Oct 9 14:38 hosts

[felix@gemini gmedia]$ ls -al /local/hosts

-rw-r--r-- 1 felix felix 221 Oct 9 14:38 /local/hosts

[felix@gemini gmedia]$ ls -al /GD/hosts

ls: cannot access '/GD/hosts': No such file or directory

[felix@gemini gmedia]$ rclone move /local/hosts gcrypt:

[felix@gemini gmedia]$ ls -al /local/hosts

ls: cannot access '/local/hosts': No such file or directory

[felix@gemini gmedia]$ ls -al /GD/hosts

-rw-rw-r-- 1 felix felix 221 Oct 9 14:38 /GD/hosts

[felix@gemini gmedia]$ ls -al hosts

-rw-rw-r-- 1 felix felix 221 Oct 9 14:38 hosts

If you write locally, nothing moves it to your Google Drive. You have to move it via rclone copy or move command. I do that overnight.

ok i figured it out. everything in /local must be within fuse

sorry for the inconvenience

thank you.

if you don't watch folders for automatic changes in plex, and you dont use plex_autoscan, you manually scan?

I don't use plex autoscan.

Plex is connected to Sonarr and Radarr and they notify plex when changes happen.

Hi All,

Thanks for the info on github @Animosity022 verry helpfull.

I'm trying to do something similar like you however I have two separate servers, I have a Plex server with a google drive mounted just like you have /gmedia and my local drive is mounted at /media/local/.

The thing is my second server (Seedbox) is running deluge and has support for using rclone but cannot mount any remote folders, thats just a limitation of the server. However it can ofcourse upload files to the drive.

Now the seedbox is able to run Sonarr however i'm not able to mount the gdrive so i tought lets just sync the files over tho the first server and use remote mapping in sonarr. Well yes this will work but it botters me The seedbox is fast in uploading to google so I rather not send the files back to server 1 first before sending them to my drive, as the seedbox can do it.

So I would need the seedbox do the filename handling like renaming etc and then tell sonarr on to import the movie without doing anything, just like bulk import does.

Also the advantage of the seedbox is that It would be able to sync 24/7 within the daily limit ofcourse.

I can just not figure out how I can tell sonarr to do all of this across two servers without being able to mount my gdrive on second server.

Any idea's perhaps

Best Regards,

Toetje

I'd suggest to make a new post as I try to keep this for questions or things about my particular settings and I can pick up on that post and try to help out.