What is the problem you are having with rclone?

I'm using rclone to connect to webdav, one directory is working properly, the other is not.

Both are properly loaded via web browser.

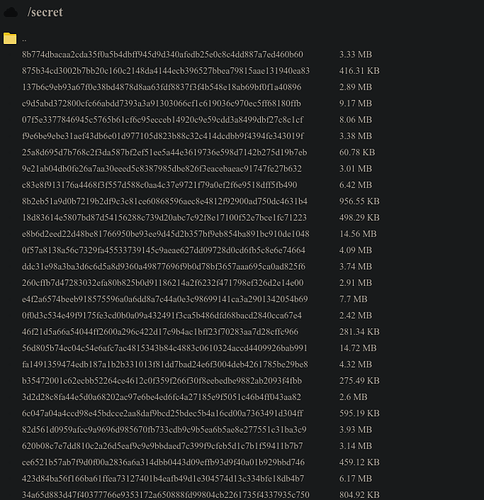

The directory, which is properly loaded by rclone contains common jpg pictures. The directory "secret", which isn't loaded properly contains files named as sha256 hashes. See attached picture.

Run the command 'rclone version' and share the full output of the command.

rclone v1.61.1

- os/version: arch (64 bit)

- os/kernel: 6.1.2-arch1-1 (x86_64)

- os/type: linux

- os/arch: amd64

- go/version: go1.19.4

- go/linking: dynamic

Which cloud storage system are you using? (eg Google Drive)

webdav

The command you were trying to run (eg rclone copy /tmp remote:tmp)

$ rclone -vv lsf test:secret --dump bodies --retries 1 --low-level-retries 1

The rclone config contents with secrets removed.

[test]

type = webdav

url = http://127.0.0.1:4443/8tERmJ7R/Cloud%20Drive

A log from the command with the -vv flag

<7>DEBUG : rclone: Version "v1.61.1" starting with parameters ["rclone" "-vv" "lsf" "test:secret" "--dump" "bodies" "--retries" "1" "--low-level-retries" "1"]

<7>DEBUG : rclone: systemd logging support activated

<7>DEBUG : Creating backend with remote "test:secret"

<7>DEBUG : Using config file from "/home/ksj/.config/rclone/rclone.conf"

<7>DEBUG : found headers:

<7>DEBUG : You have specified to dump information. Please be noted that the Accept-Encoding as shown may not be correct in the request and the response may not show Content-Encoding if the go standard libraries auto gzip encoding was in effect. In this case the body of the request will be gunzipped before showing it.

<7>DEBUG : >>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>

<7>DEBUG : HTTP REQUEST (req 0xc00089ba00)

<7>DEBUG : PROPFIND /8tERmJ7R/Cloud%20Drive/secret HTTP/1.1

Host: 127.0.0.1:4443

User-Agent: rclone/v1.61.1

Depth: 1

Referer: http://127.0.0.1:4443/8tERmJ7R/Cloud%20Drive/

Accept-Encoding: gzip

<7>DEBUG : >>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>

<7>DEBUG : <<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<

<7>DEBUG : HTTP RESPONSE (req 0xc00089ba00)

<7>DEBUG :

<7>DEBUG : <<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<

<7>DEBUG : pacer: low level retry 1/1 (error read tcp 127.0.0.1:60146->127.0.0.1:4443: i/o timeout)

<7>DEBUG : pacer: Rate limited, increasing sleep to 20ms

Failed to create file system for "test:secret": read metadata failed: read tcp 127.0.0.1:60146->127.0.0.1:4443: i/o timeout