rclone v1.53.1 with rclone cache mode full, read-only with the following settings:

ExecStart=/usr/bin/rclone mount

--config=/home/xtreamcodes/.config/rclone/rclone.conf

--allow-other

--vfs-read-chunk-size=3M

--vfs-read-chunk-size-limit=0

--vfs-read-ahead=3M

--buffer-size=0

--vfs-cache-max-age=168h

--vfs-cache-max-size=1T

--vfs-cache-mode=full

--cache-dir=/mnt/cache

--no-modtime

--no-checksum

--umask=002

--log-level=DEBUG

--log-file=/opt/rclone.log

--async-read=false

--rc

--rc-addr=localhost:5572

--bwlimit-file 1M \

--read-only \

My reasoning of the flags:

-

0 buffer size as to make every read buffer to disk and then serve to user from disk and to not waste bandwidth filling that memory buffer

-

3M chunks to make for a faster file open (3M should be enough for a 7 seconds buffer from a single request)

-

3M read ahead, so there will be always a buffer of 7 seconds in the disk

The way I see the workflow of rclone when a file is requested would be like this:

File is requested > 3M chunk downloaded and served to the user immediately > followed by a 3M chunk download and buffered to disk so the next read will be from the disk

So for each open file there will be 2 requests for 3M chunks at once.

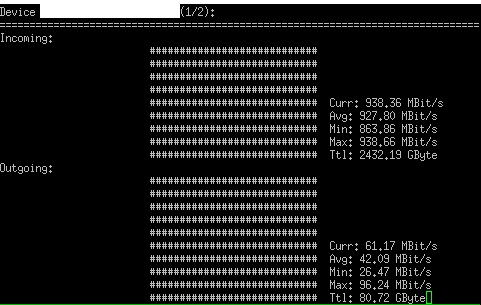

However with just 6 files open it's using 100% of my download bandwidth:

even with --bwlimit-file of 1M how's that possible?

I need help finding the optimal settings for the new cache mode, that targets minimal read-ahead (bandwidth spent downloading things in advance) with only what is strictly required to work.

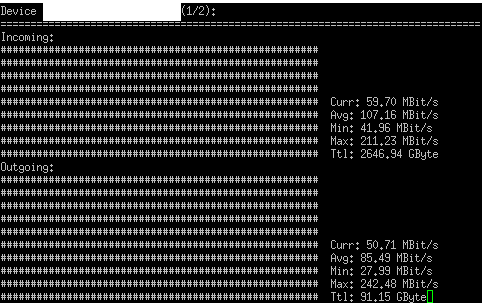

With the default settings it does behave way better:

^ this with the defaults for the flags --buffer-size, --vfs-read-ahead, vfs-read-chunk-size-limit, vfs-read-chunk-size

What is the explanation behind this?