What is the problem you are having with rclone?

I'm worried about the CPU consumption on the destination host.

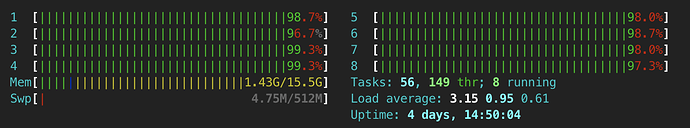

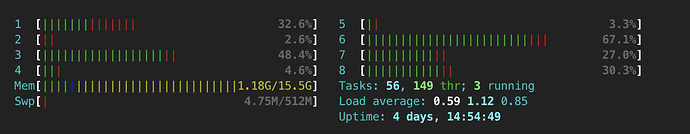

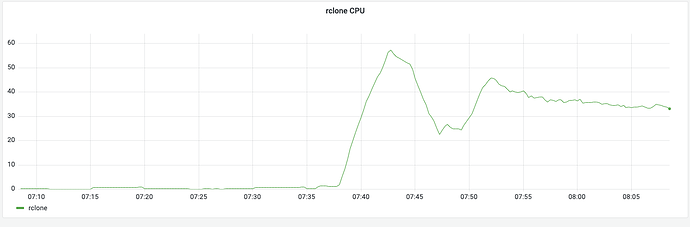

While monitoring both instances during a rclone sync command to evaluate timings and performance, I noticed that during file checks of a sync command the cpu consumption is totally maxed out. And this can last several minutes. Although during file transfers only, the usage performance is a lot healthier.

I'm concerned about putting this sync in a cron job.

Any thoughts ?

Should I worry ?

Can file checks be throttled in any way ?

Thanks for your admirable work into this now legendary tool

Kind regards.

What is your rclone version (output from rclone version)

Which cloud storage system are you using? (eg Google Drive)

Local Linux ext4

Remote Nextcloud Webdav

The command you were trying to run (eg rclone copy /tmp remote:tmp)

rclone sync {local} {remote} -P -v

8TB of contents in several 700'000 files.

The rclone config contents with secrets removed.

irrelevant

A log from the command with the -vv flag

Paste log here

)

)