What is the problem you are having with rclone?

rclone sync from local to GoogleDrive hanging at 100%: everything runs perfectly in the beginning of the sync, but sometimes, when it reaches 100% it just hangs there for hours and hours. The only way out is killing the process and then running it again with exactly the same command; so far the second execution always finishes OK (it's a very intermittent error).

What is your rclone version (output from rclone version)

rclone v1.50.2

- os/arch: darwin/amd64

- go version: go1.13.4

Which OS you are using and how many bits (eg Windows 7, 64 bit)

Mac OSX High Sierra

Which cloud storage system are you using? (eg Google Drive)

Google Drive, with a legit (ie, >5 users) GSuite Unlimited account

The command you were trying to run (eg rclone copy /tmp remote:tmp)

rclone -v --checkers=12 --transfers=8 --multi-thread-cutoff=1k \

--multi-thread-streams=8 --low-level-retries=1000 --user-agent REDACTED \

--rc --bwlimit "09:00,4m 21:00,off sat-09:01,off sun-09:01,off" \

--fast-list sync /REDACTED/REDACTED/REDACTED GDRIVE_REMOTE:REMOTEDIR

The rclone config contents with secrets removed.

[GDRIVE_REMOTE]

type = drive

client_id = REDACTED.apps.googleusercontent.com

client_secret = REDACTED

service_account_file =

token = {"access_token":"REDACTED","token_type":"Bearer","refresh_token":"1/REDACTED","expiry":"2020-08-20T11:00:27.698728-03:00"}

root_folder_id = REDACTED

A log from the command with the -vv flag

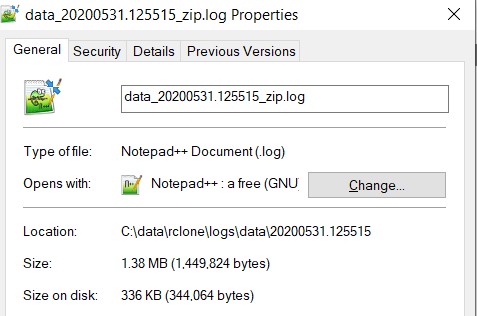

Unfortunately I don't have a -vv log as the problem is intermittent and not easily reproducible, and I don't have the free disk space to run it with -vv all the time; I have it running with -v, here's the current log:

2020/08/20 03:21:37 NOTICE: Serving remote control on http://127.0.0.1:5572/

2020/08/20 03:22:37 NOTICE: Scheduled bandwidth change. Bandwidth limits disabled

2020/08/20 03:22:38 INFO :

Transferred: 0 / 0 Bytes, -, 0 Bytes/s, ETA -

Errors: 0

Checks: 0 / 0, -

Transferred: 0 / 0, -

Elapsed time: 0s

2020/08/20 03:23:07 INFO : hints.34766: Copied (new)

2020/08/20 03:23:18 INFO : Google drive root 'REDACTED': Waiting for checks to finish

2020/08/20 03:23:32 INFO : integrity.34766: Copied (new)

2020/08/20 03:23:32 INFO : Google drive root 'REDACTED': Waiting for transfers to finish

2020/08/20 03:23:38 INFO :

Transferred: 163.411k / 991.613 MBytes, 0%, 4.315 kBytes/s, ETA 2d17h20m17s

Errors: 0

Checks: 30814 / 30814, 100%

Transferred: 2 / 6, 33%

Elapsed time: 37.8s

Transferring:

* index.34766: 0% /628.873M, 0/s, -

* data/34/34764: 0% /362.580M, 0/s, -

* data/34/34765:100% /17, 0/s, 0s

* data/34/34766:100% /17, 0/s, 0s

2020/08/20 03:24:37 INFO : data/34/34766: Copied (new)

2020/08/20 03:24:38 INFO :

Transferred: 269.371M / 991.613 MBytes, 27%, 2.753 MBytes/s, ETA 4m22s

Errors: 0

Checks: 30814 / 30814, 100%

Transferred: 3 / 6, 50%

Elapsed time: 1m37.8s

Transferring:

* index.34766: 13% /628.873M, 2.430M/s, 3m42s

* data/34/34764: 50% /362.580M, 3.018M/s, 59s

* data/34/34765:200% /17, 0/s, -

2020/08/20 03:25:21 INFO : data/34/34765: Copied (new)

2020/08/20 03:25:38 INFO :

Transferred: 608.027M / 991.613 MBytes, 61%, 3.852 MBytes/s, ETA 1m39s

Errors: 0

Checks: 30814 / 30814, 100%

Transferred: 4 / 6, 67%

Elapsed time: 2m37.8s

Transferring:

* index.34766: 41% /628.873M, 2.894M/s, 2m7s

* data/34/34764: 96% /362.580M, 2.817M/s, 4s

2020/08/20 03:26:38 INFO :

Transferred: 925.017M / 991.613 MBytes, 93%, 4.246 MBytes/s, ETA 15s

Errors: 0

Checks: 30814 / 30814, 100%

Transferred: 4 / 6, 67%

Elapsed time: 3m37.8s

Transferring:

* index.34766: 89% /628.873M, 5.222M/s, 12s

* data/34/34764:100% /362.580M, 86.782k/s, 0s

2020/08/20 03:27:38 INFO :

Transferred: 991.613M / 991.613 MBytes, 100%, 3.569 MBytes/s, ETA 0s

Errors: 0

Checks: 30814 / 30814, 100%

Transferred: 4 / 6, 67%

Elapsed time: 4m37.8s

Transferring:

* index.34766:100% /628.873M, 264.989k/s, 0s

* data/34/34764:100% /362.580M, 1.806k/s, 0s

2020/08/20 03:28:38 INFO :

Transferred: 991.613M / 991.613 MBytes, 100%, 2.935 MBytes/s, ETA 0s

Errors: 0

Checks: 30814 / 30814, 100%

Transferred: 4 / 6, 67%

Elapsed time: 5m37.8s

Transferring:

* index.34766:100% /628.873M, 5.514k/s, 0s

* data/34/34764:100% /362.580M, 38/s, 0s

2020/08/20 03:29:38 INFO :

Transferred: 991.613M / 991.613 MBytes, 100%, 2.492 MBytes/s, ETA 0s

Errors: 0

Checks: 30814 / 30814, 100%

Transferred: 4 / 6, 67%

Elapsed time: 6m37.8s

Transferring:

* index.34766:100% /628.873M, 117/s, 0s

* data/34/34764:100% /362.580M, 0/s, 0s

[This same block then repeats ad-nauseam, without any intervening errors/warnings/messages, until the process is killed]

If it helps, the data being sync'ed is a borgbackup repo tree with ~15TB total (only 1GB or so of it is currently modified each day).

I have one of those processes hanged right now; if there's anything I can try and take out of it (ie, a SIGQUIT core file, dtruss output, or whatever) please let me know. I can wait a few more hours before I'm forced to kill and restart it. Unfortunately, my use case does not me allow to run it with -vv all the time, as it would generate a ton of output and I don't have the disk space to store it all (free space is really tight on this machine).

Another idea would be to implement (if it's not implemented already) some special way to turn -vv on an already-running rclone process; say, SIGUSR1 or creating a file somewhere.