Previously I was running rclone in a "screen" or "byobu" on my linux server which was working very well since I could ssh in and out without any issues and always read the logs in the console if necessary (using the -v flag)

the major downside is that rclone would not start automatically if the system was rebooted.

So I decided to start rclone as a service using "supervisor" to make it more stable.

While trying to automate the start and stop I noticed a couple of problems that are actually not connected to supervisor, but rather a problem of how I use rclone.

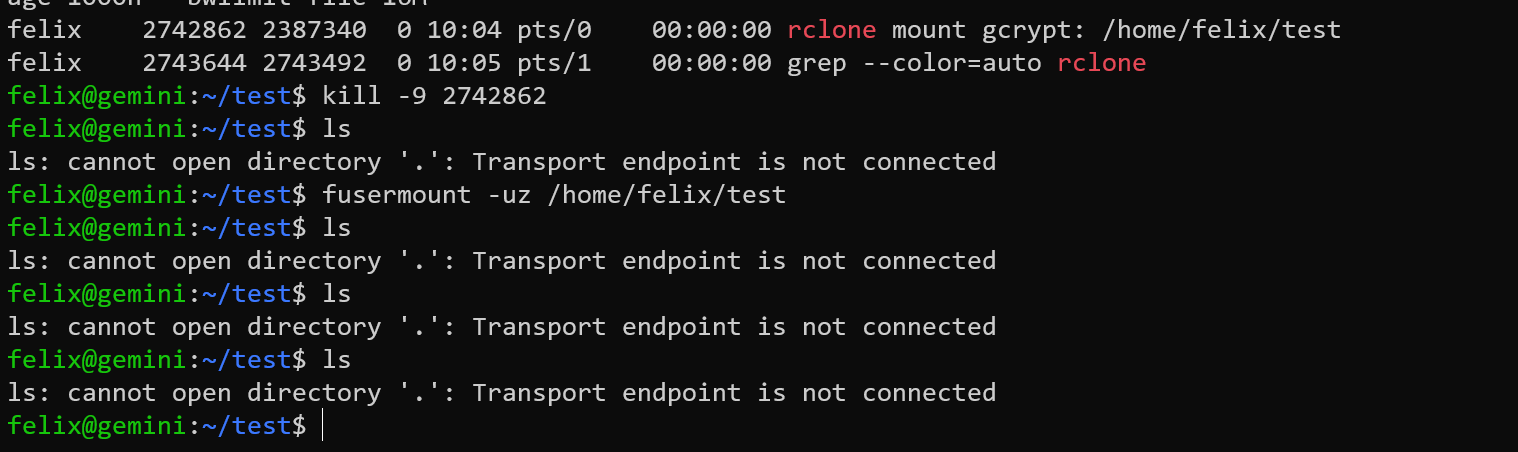

The major problem is that usually rclone doesn't quit 'clean' for me. Suppose I run rclone mount manually on my shell. I then quit rclone by Ctr+C and want to restart it, but it won't let me because the unmounting didn't work. At this point the folder is already not usable anymore, but blocks remounting.

whiteloader@whiteloader:~$ rclone mount crypt2: /mnt/shared/fusemounts/SB/ --allow-other -v --fast-list --buffer-size=16M --vfs-cache-mode full --dir-cache-time 172h --vfs-read-chunk-size 16M --vfs-read-chunk-size-limit 512M --vfs-cache-max-age 5000h --vfs-cache-poll-interval 10m --umask 0 --vfs-write-back 5m --cache-dir /mnt/shared/media/cache/ --vfs-cache-max-size 1T

2021/01/24 21:53:30 Fatal error: Can not open: /mnt/shared/fusemounts/SB/: open /mnt/shared/fusemounts/SB/: transport endpoint is not connected

Well it's no problem if I do it manually, I simply run fusermount -uz /mnt/shared/fusemounts/SB and then I can mount again. However my supervisor can't do that and fails after 3 tries of starting rclone.

.

So my question is:

Is there a command line option for rclone that forces the remount ? (basically running fusermount -uz automatically if mounting fails. If not, could I workaround this somehow by cleverly using supervisor or scripting?

Alternatively is it possible to instruct rclone to force unmount the mount when it quits?

.

below is a copy of my supervisor config for you reference:

[program:rcloneSB]

command=rclone mount crypt2: /mnt/shared/fusemounts/suptest/ --allow-other -v --fast-list --buffer-size=16M --vfs-cache-mode full --dir-cache-time 172h --vfs-read-chunk-size 16M --vfs-read-chunk-size-limit 512M --vfs-cache-max-age 5000h --vfs-cache-poll-interval 10m --umask 0 --vfs-write-back 5m --cache-dir /mnt/shared/media/cache/ --vfs-cache-max-size 1T

autostart=true

user=whiteloader

stdout_logfile=/home/whiteloader/rclonelogs/sb.log

stdout_logfile_maxbytes=100MB

stdout_logfile_backups=20

stderr_logfile=/home/whiteloader/rclonelogs/sb_err.loc

stderr_logfile_maxbytes=100MB

stderr_logfile_backups=10

environment=HOME="/home/whiteloader",USER="whiteloader"

another weird thing is that all my output from rclone in supervisor goes to stderr instead of stdout. Not sure if this is a rclone or supervisor problem though.

Thank you very much in advance!

What is the problem you are having with rclone?

rclone not exiting cleanly and then not able to restart automatically

What is your rclone version (output from rclone version)

rclone v1.53.4

- os/arch: linux/amd64

- go version: go1.15.6

Which OS you are using and how many bits (eg Windows 7, 64 bit)

linux ubuntu LTS 18.04

Which cloud storage system are you using? (eg Google Drive)

Google Drive

The rclone config contents with secrets removed.

[gdrive]

type = drive

client_id = 1234.apps.googleusercontent.com

client_secret = 1234

scope = drive

root_folder_id =

service_account_file =

token = {"access_token":KF$}

team_drive = 0AKUk9PVA

upload_cutoff = 64M

chunk_size = 64M

[crypt2]

type = crypt

remote = gdrive:crypt

filename_encryption = standard

directory_name_encryption = true