What is the problem you are having with rclone?

I'm getting insanely high egress rates when using rclone mount with an S3-compatible storage running a media streaming application.

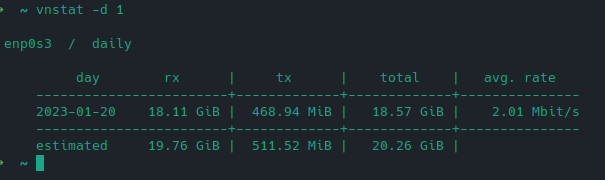

I run a media streaming application for personal use (Ampache). I use idrive e2 (S3-compatible) to store my media files (circa 200GB) and mount the buckets with rclone mount. Ampache runs a daily catalog update job over this rclone mounted media repository and I got a pretty high bill after few days of use. I'm trying to understand what is causing those high egress rates, because the catalog update job just looks for modified/added files to update the catalog. I suspect the catalog update job causes the entire mass of data to be downloaded (egressed) every time to the server running Ampache. Is that supposed to be happening? Am I using the wrong parameters for my application? Are there rclone mount parameters to change/limit this behavior?

Thanks in advance.

Run the command 'rclone version' and share the full output of the command.

rclone v1.61.0

- os/version: arch 22.0.0 (64 bit)

- os/kernel: 5.15.85-1-MANJARO (x86_64)

- os/type: linux

- os/arch: amd64

- go/version: go1.19.4

- go/linking: dynamic

- go/tags: none

Which cloud storage system are you using? (eg Google Drive)

Idrive e2 (S3-compatible)

The command you were trying to run (eg rclone copy /tmp remote:tmp)

rclone mount --verbose idrive:bucket /mnt/idrive/bucket --ignore-checksum --allow-other --allow-non-empty --drive-pacer-min-sleep 10ms --drive-pacer-burst 200 --vfs-cache-mode writes --bwlimit-file 32M```

#### The rclone config contents with secrets removed.

<!-- You should use 3 backticks to begin and end your paste to make it readable. -->

Paste config here

#### A log from the command with the `-vv` flag

<!-- You should use 3 backticks to begin and end your paste to make it readable. Or use a service such as https://pastebin.com or https://gist.github.com/ -->

Paste log here