rclone sync is using all my memory with the iDrive+crypt backend

$ rclone version

rclone v1.62.2

- os/version: ubuntu 18.04 (64 bit)

- os/kernel: 4.15.0-200-generic (x86_64)

- os/type: linux

- os/arch: amd64

- go/version: go1.20.2

- go/linking: static

- go/tags: none

IDrive e2

rclone copy /tmp remote:tmp)sudo rclone --log-file=/tmp/rclone.log --config /root/.config/rclone/rclone.conf --retries 1 --ignore-errors sync --exclude-if-present .rclone-ignore --links -v -v --memprofile=/tmp/rclone-profile3 /home idrive-e2-encrypted:backup-marelle-home

I stopped the command once it started using 10% of my memory. Experimentally it will continue to grow without limit, and ends up stalling when all the time is spent swapping.

[idrive-e2]

type = s3

provider = IDrive

access_key_id = ***

secret_access_key = ***

endpoint = k5a8.par.idrivee2-45.com

acl = private

memory_pool_use_mmap = true

[idrive-e2-encrypted]

type = crypt

remote = idrive-e2:

filename_encryption = standard

password = ***

password2 = ***

directory_name_encryption = false

-vv flagThe log is too big, as it contains one entry per file. With removing duplicate after hiding the file names, I get:

2023/03/16 14:10:21 DEBUG : rclone: Version "v1.62.2" starting with parameters ["rclone" "--log-file=/tmp/rclone.log" "--config" "/root/.config/rclone/rclone.conf" "--retries" "1" "--ignore-errors" "sync" "--exclude-if-present" ".rclone-ignore" "--links" "-v" "-v" "--memprofile=/tmp/rclone-profile3" "/home" "idrive-e2-encrypted:backup-marelle-home"]

2023/03/16 14:10:21 DEBUG : Creating backend with remote "/home"

2023/03/16 14:10:25 DEBUG : Using config file from "/root/.config/rclone/rclone.conf"

2023/03/16 14:10:25 DEBUG : local: detected overridden config - adding "{b6816}" suffix to name

2023/03/16 14:10:25 DEBUG : fs cache: renaming cache item "/home" to be canonical "local{b6816}:/home"

2023/03/16 14:10:25 DEBUG : Creating backend with remote "idrive-e2-encrypted:backup-marelle-home"

2023/03/16 14:10:25 DEBUG : Creating backend with remote "idrive-e2:mr9p09bhqntfjn7dran3jjtnlbt8gdaonq3s6q6b7chbco2lmfi0"

2023/03/16 14:10:25 DEBUG : Resolving service "s3" region "us-east-1"

2023/03/16 14:10:25 DEBUG : Creating backend with remote "idrive-e2:backup-marelle-home"

2023/03/16 14:10:25 DEBUG : Resolving service "s3" region "us-east-1"

2023/03/16 14:10:25 DEBUG : ***: Excluded

2023/03/16 14:10:25 DEBUG : ***: Size and modification time the same (differ by 0s, within tolerance 1ns)

2023/03/16 14:10:52 NOTICE: ***: Duplicate directory found in destination - ignoring

2023/03/16 14:10:52 NOTICE: ***: Duplicate directory found in destination - ignoring

2023/03/16 14:10:52 NOTICE: ***: Duplicate directory found in destination - ignoring

2023/03/16 14:10:52 NOTICE: ***: Duplicate directory found in destination - ignoring

2023/03/16 14:11:25 INFO :

Transferred: 0 B / 0 B, -, 0 B/s, ETA -

Checks: 38803 / 48813, 79%

Elapsed time: 1m3.9s

Checking:

* ***: checking

* ***: checking

* ***: checking

* ***: checking

* ***: checking

* ***: checking

* ***: checking

* ***: checking

2023/03/16 14:11:56 INFO : Signal received: interrupt

2023/03/16 14:11:56 INFO : Saving Memory profile "/tmp/rclone-profile3"

2023/03/16 14:11:56 INFO : Exiting...

I also attach the text pprof profile:

File: rclone

Type: inuse_space

Time: Mar 16, 2023 at 2:11pm (CET)

Showing nodes accounting for 778.54MB, 96.26% of 808.76MB total

Dropped 138 nodes (cum <= 4.04MB)

flat flat% sum% cum cum%

382.03MB 47.24% 47.24% 382.03MB 47.24% github.com/rclone/rclone/fs.NewDir (inline)

281.02MB 34.75% 81.99% 297.54MB 36.79% github.com/aws/aws-sdk-go/private/protocol/xml/xmlutil.XMLToStruct

74.47MB 9.21% 91.19% 457.50MB 56.57% github.com/rclone/rclone/backend/s3.(*Fs).listDir.func1

12.01MB 1.48% 92.68% 12.01MB 1.48% strings.(*Builder).grow (inline)

7MB 0.87% 93.54% 7MB 0.87% strings.genSplit

7MB 0.87% 94.41% 7.52MB 0.93% encoding/xml.(*Decoder).rawToken

4.50MB 0.56% 94.97% 4.50MB 0.56% github.com/aws/aws-sdk-go/private/protocol/xml/xmlutil.(*XMLNode).findNamespaces (inline)

2.50MB 0.31% 95.27% 4.50MB 0.56% github.com/rclone/rclone/lib/encoder.ToStandardPath

2.50MB 0.31% 95.58% 8MB 0.99% github.com/rclone/rclone/backend/crypt.caseInsensitiveBase32Encoding.DecodeString

2.50MB 0.31% 95.89% 10.02MB 1.24% encoding/xml.(*Decoder).Token

1MB 0.12% 96.02% 767.55MB 94.90% github.com/rclone/rclone/backend/s3.(*Fs).list

1MB 0.12% 96.14% 14MB 1.73% github.com/rclone/rclone/backend/crypt.(*Cipher).decryptSegment

1MB 0.12% 96.26% 5MB 0.62% github.com/rfjakob/eme.Transform

0 0% 96.26% 303.05MB 37.47% github.com/aws/aws-sdk-go/aws/request.(*HandlerList).Run

0 0% 96.26% 303.05MB 37.47% github.com/aws/aws-sdk-go/aws/request.(*Request).Send

0 0% 96.26% 302.05MB 37.35% github.com/aws/aws-sdk-go/aws/request.(*Request).sendRequest

0 0% 96.26% 301.55MB 37.29% github.com/aws/aws-sdk-go/private/protocol/restxml.Unmarshal

0 0% 96.26% 300.54MB 37.16% github.com/aws/aws-sdk-go/private/protocol/xml/xmlutil.UnmarshalXML

0 0% 96.26% 301.04MB 37.22% github.com/aws/aws-sdk-go/service/s3.(*S3).ListObjectsV2WithContext

0 0% 96.26% 21MB 2.60% github.com/rclone/rclone/backend/crypt.(*Cipher).DecryptFileName

0 0% 96.26% 21MB 2.60% github.com/rclone/rclone/backend/crypt.(*Cipher).decryptFileName

0 0% 96.26% 769.05MB 95.09% github.com/rclone/rclone/backend/crypt.(*Fs).List

0 0% 96.26% 20.50MB 2.53% github.com/rclone/rclone/backend/crypt.(*Object).Remote

0 0% 96.26% 4.50MB 0.56% github.com/rclone/rclone/backend/local.(*Fs).List

0 0% 96.26% 767.55MB 94.90% github.com/rclone/rclone/backend/s3.(*Fs).List

0 0% 96.26% 383.04MB 47.36% github.com/rclone/rclone/backend/s3.(*Fs).itemToDirEntry

0 0% 96.26% 301.04MB 37.22% github.com/rclone/rclone/backend/s3.(*Fs).list.func1

0 0% 96.26% 767.55MB 94.90% github.com/rclone/rclone/backend/s3.(*Fs).listDir

0 0% 96.26% 301.04MB 37.22% github.com/rclone/rclone/backend/s3.(*v2List).List

0 0% 96.26% 15.50MB 1.92% github.com/rclone/rclone/fs.CompareDirEntries

0 0% 96.26% 15.50MB 1.92% github.com/rclone/rclone/fs.DirEntries.Less

0 0% 96.26% 303.05MB 37.47% github.com/rclone/rclone/fs.pacerInvoker

0 0% 96.26% 794.06MB 98.18% github.com/rclone/rclone/fs/list.DirSorted

0 0% 96.26% 19MB 2.35% github.com/rclone/rclone/fs/list.filterAndSortDir

0 0% 96.26% 794.06MB 98.18% github.com/rclone/rclone/fs/march.(*March).makeListDir.func1

0 0% 96.26% 4.50MB 0.56% github.com/rclone/rclone/fs/march.(*March).processJob.func1

0 0% 96.26% 789.55MB 97.63% github.com/rclone/rclone/fs/march.(*March).processJob.func2

0 0% 96.26% 4.50MB 0.56% github.com/rclone/rclone/lib/encoder.MultiEncoder.ToStandardPath (inline)

0 0% 96.26% 303.05MB 37.47% github.com/rclone/rclone/lib/pacer.(*Pacer).Call

0 0% 96.26% 303.05MB 37.47% github.com/rclone/rclone/lib/pacer.(*Pacer).call

0 0% 96.26% 5.35MB 0.66% runtime.doInit

0 0% 96.26% 5.85MB 0.72% runtime.main

0 0% 96.26% 15.50MB 1.92% sort.Stable

0 0% 96.26% 8MB 0.99% sort.insertionSort

0 0% 96.26% 15.50MB 1.92% sort.stable

0 0% 96.26% 7.50MB 0.93% sort.symMerge

0 0% 96.26% 12.01MB 1.48% strings.(*Builder).Grow (inline)

0 0% 96.26% 7MB 0.87% strings.Split (inline)

That doesn't seem like a lot at all.

How many objects are you dealing with?

This was a stopped run to get some profile quickly following the instruction.

A previous run (still stopped before the end) had:

File: rclone

Type: inuse_space

Time: Mar 16, 2023 at 2:03pm (CET)

Showing nodes accounting for 5081.31MB, 96.71% of 5254.11MB total

Dropped 291 nodes (cum <= 26.27MB)

flat flat% sum% cum cum%

2608.74MB 49.65% 49.65% 2608.74MB 49.65% github.com/rclone/rclone/fs.NewDir (inline)

1864.68MB 35.49% 85.14% 1970.88MB 37.51% github.com/aws/aws-sdk-go/private/protocol/xml/xmlutil.XMLToStruct

495.88MB 9.44% 94.58% 3109.12MB 59.18% github.com/rclone/rclone/backend/s3.(*Fs).listDir.func1

33MB 0.63% 95.21% 33MB 0.63% github.com/aws/aws-sdk-go/private/protocol/xml/xmlutil.(*XMLNode).findNamespaces (inline)

25MB 0.48% 95.68% 66.20MB 1.26% encoding/xml.(*Decoder).Token

24MB 0.46% 96.14% 41.20MB 0.78% encoding/xml.(*Decoder).rawToken

17.50MB 0.33% 96.47% 40.51MB 0.77% github.com/rclone/rclone/lib/encoder.ToStandardPath

11MB 0.21% 96.68% 5176.05MB 98.51% github.com/rclone/rclone/backend/s3.(*Fs).list

1.50MB 0.029% 96.71% 42.01MB 0.8% github.com/rclone/rclone/lib/encoder.MultiEncoder.ToStandardPath (inline)

0 0% 96.71% 36.03MB 0.69% crypto/tls.(*Conn).HandshakeContext (inline)

0 0% 96.71% 36.03MB 0.69% crypto/tls.(*Conn).clientHandshake

0 0% 96.71% 36.03MB 0.69% crypto/tls.(*Conn).handshakeContext

0 0% 96.71% 35.03MB 0.67% crypto/tls.(*clientHandshakeStateTLS13).handshake

0 0% 96.71% 29.53MB 0.56% crypto/tls.(*clientHandshakeStateTLS13).readServerCertificate

0 0% 96.71% 2013.45MB 38.32% github.com/aws/aws-sdk-go/aws/request.(*HandlerList).Run

0 0% 96.71% 2013.45MB 38.32% github.com/aws/aws-sdk-go/aws/request.(*Request).Send

0 0% 96.71% 1999.93MB 38.06% github.com/aws/aws-sdk-go/aws/request.(*Request).sendRequest

0 0% 96.71% 1996.43MB 38.00% github.com/aws/aws-sdk-go/private/protocol/restxml.Unmarshal

0 0% 96.71% 1988.40MB 37.84% github.com/aws/aws-sdk-go/private/protocol/xml/xmlutil.UnmarshalXML

0 0% 96.71% 1995.42MB 37.98% github.com/aws/aws-sdk-go/service/s3.(*S3).ListObjectsV2WithContext

0 0% 96.71% 5176.05MB 98.51% github.com/rclone/rclone/backend/crypt.(*Fs).List

0 0% 96.71% 5176.05MB 98.51% github.com/rclone/rclone/backend/s3.(*Fs).List

0 0% 96.71% 2613.24MB 49.74% github.com/rclone/rclone/backend/s3.(*Fs).itemToDirEntry

0 0% 96.71% 1995.42MB 37.98% github.com/rclone/rclone/backend/s3.(*Fs).list.func1

0 0% 96.71% 5176.05MB 98.51% github.com/rclone/rclone/backend/s3.(*Fs).listDir

0 0% 96.71% 1995.42MB 37.98% github.com/rclone/rclone/backend/s3.(*v2List).List

0 0% 96.71% 2016.45MB 38.38% github.com/rclone/rclone/fs.pacerInvoker

0 0% 96.71% 5183.55MB 98.66% github.com/rclone/rclone/fs/list.DirSorted

0 0% 96.71% 5183.55MB 98.66% github.com/rclone/rclone/fs/march.(*March).makeListDir.func1

0 0% 96.71% 5179.05MB 98.57% github.com/rclone/rclone/fs/march.(*March).processJob.func2

0 0% 96.71% 2016.45MB 38.38% github.com/rclone/rclone/lib/pacer.(*Pacer).Call

0 0% 96.71% 2016.45MB 38.38% github.com/rclone/rclone/lib/pacer.(*Pacer).call

0 0% 96.71% 36.03MB 0.69% net/http.(*persistConn).addTLS.func2

The number of file to save are in the millions, but the number of file in each directory is quite reasonable. Is it expected that rclone memory will scale with the overall number of files?

Also, I have been able to upload all these files with rclone. It is at the time where I do a sync with almost no change that it seems to use so much memory.

Tons of posts about the file objects and memory use:

Hi everyone,

I’ve been trying to sync a bucket from S3 to Wasabi with no success. There are some folders with a few hundred files (images, mostly) which do get synced successfully, but one folder in particular with about 6 millions images does not sync a single file even after hours running.

I feel I must be doing something wrong or not using all the recommended parameters for that kind of situation. Can anyone please give me a hint or point me to the right direction?

I have tried a lot of co…

What is the problem you are having with rclone?

I need to move 2.3 Millions of files from Oracle Cloud Gen1 (Swift) to Oracle Cloud Bucket Gen2 (S3 Compatible).

The commands Sync, Move and Copy don't start the transfer, how can I do that?

What is your rclone version (output from rclone version)

Rclone Download v1.51.0

Which OS you are using and how many bits (eg Windows 7, 64 bit)

Oracle Linux 7.7 64bit

Which cloud storage system are you using? (eg Google Drive)

Oracle Cloud Gen 1 (Swift) to…

Hi all,

I am seeing a long idle time between submitting the copy command and start of the actual transfer. Source and Target are two different S3-compatible Cloud Object Storages. There are millions of tiny (few KB) keys with the specified source prefix. The destination bucket also contains millions of objects, but none share the prefix that I am trying to copy.

Delay before rclone uploads files, a newbie question looks similar, but my objects are tiny and calculation for the downloaded keys s…

Sorry, I am missing something here. In my case, no directory contains a big number of files .

ncw

March 16, 2023, 1:58pm

6

It should scale as the largest number of files in a directory at the rate of approx 1GB for 1,000,000 files.

So if you have 100,000,000 files but the largest number of files in a directory is 1,000,000 then rclone should use of the order of 1GB of memory.

The only really unusual flag you have there is --exclude-if-present .rclone-ignore - I wonder if that is causing the problem somehow.

Can you try a --dry-run sync without that flag and see if it uses a similar amount of memory?

So I run with --dry-run and without the exclude:

sudo rclone --config /root/.config/rclone/rclone.conf --retries 1 --ignore-errors sync --dry-run --links -P --memprofile=/tmp/rclone-profile5 /home idrive-e2-encrypted:backup-marelle-home

After around 30 minutes, I see that rclone checked 364143 files. My memory is again through the roof, blocking progress.

ncw

March 16, 2023, 9:13pm

8

Can you share with me your profile file? You could email it to nick@craig-wood.com or share it with that email - thanks

So, it seems I might have solved my problem by using --fast-list. When I do the memory grow to ~2G, but then stays constant, which is expected from what I understand from this option.

It seems that the recursive approach has an issue with my config.

ncw

March 17, 2023, 7:52am

10

--fast-list should represent the worst case memory usage.

So it definitely sounds like a problem.

It would be nice to get to the bottom of it.

The --exclude-if-present flag still looks like the most likely suspect to me.

Ole

March 17, 2023, 8:45am

11

I didn't read all the details, but these lines in the log look odd to me:

I wouldn't expect duplicate directories in an object storage (e.g. S3), that conflicts with my understanding of the data models of both S3 and rclone. The destination is a crypt with directory_name_encryption = false passing the directories directly to the underlying remote, which is S3 provided by IDrive. I vaguely remember an earlier issue where an S3 provider had a bug that would endlessly repeat the content of a directory listing, not sure if it was IDrive.

This may be the root cause which is worked around when doing the directory listing in a single recursive sweep using --fast-list.

ncw

March 17, 2023, 10:45am

12

Very good thinking @Ole - it was Alibaba S3.

That could also explain why --fast-list doesn't trigger the problem.

I wonder if IDrive doesn't support v2 listing properly or there is a bug in it.

@Benjamin_L can you try your sync without --fast-list but with --s3-list-version 1.

If that does fix the problem, can you do your sync as you did originally, but with -vv --dump responses. This will generate a lot of output, but if my theory is correct you'll notice a GET request for the listing which keeps repeating. If you could paste that GET request and the response here then we'll see exactly what is up.

I already tried s3-list-version, and didn't see any difference. Just retried now:

# rclone sync . --s3-list-version 1 --dry-run idrive-e2-ro:backup-marelle-home

2023/03/18 08:53:35 NOTICE: rclone.conf.bak: Skipped copy as --dry-run is set (size 7.865Ki)

2023/03/18 08:53:35 NOTICE: rclone.conf: Skipped copy as --dry-run is set (size 5.973Ki)

2023/03/18 08:53:50 NOTICE: HwbgCGKQRDp7cxngn4g9lg/SCd8lOi6Too9m2PUcJCQKw/ospmv6Ap59_IlHlcaFgmVA/jDPHjf7-NXuAGfQ-ZKa2RA/1YavndeiNpwalTMyLbovIw/Q3teYZJkvvfkO2NCPQqDkQ/qkc9WWP3X1W0mDyNx3tj9g/byCKYO_upq2vS5kGAVHr0u4fXIbbUgxnyZcXMfkIL7s: Duplicate directory found in destination - ignoring

2023/03/18 08:53:51 NOTICE: HwbgCGKQRDp7cxngn4g9lg/SCd8lOi6Too9m2PUcJCQKw/ospmv6Ap59_IlHlcaFgmVA/jDPHjf7-NXuAGfQ-ZKa2RA/1mr2_NY7W1NjQBZqeHjhVQ/Q3teYZJkvvfkO2NCPQqDkQ/qkc9WWP3X1W0mDyNx3tj9g/cPQRzwpsoVkzqzAJqdzj_Q: Duplicate directory found in destination - ignoring

2023/03/18 08:53:53 NOTICE: HwbgCGKQRDp7cxngn4g9lg/SCd8lOi6Too9m2PUcJCQKw/ospmv6Ap59_IlHlcaFgmVA/jDPHjf7-NXuAGfQ-ZKa2RA/j9nuVmoWSwVb6__vumZyeQ/Q3teYZJkvvfkO2NCPQqDkQ/qkc9WWP3X1W0mDyNx3tj9g/ed47H9gW6IsyYBS_5ZPMTXSyPF5Jtj-HRGgGn1u7vcM: Duplicate directory found in destination - ignoring

The errors about duplicate directories are still there. I do not see these with --fast-list

Ole

March 18, 2023, 5:00pm

14

Hmm, seems like something odd is going on here. I think we need to reduce both data and possibilities to get a clearer picture.

First let's see if you can reproduce on a sub folder. Are you able reproduce with one of these commands (preferably the last):

rclone sync --dry-run ./ idrive-e2-ro:backup-marelle-home/HwbgCGKQRDp7cxngn4g9lg/SCd8lOi6Too9m2PUcJCQKw/ospmv6Ap59_IlHlcaFgmVA/jDPHjf7-NXuAGfQ-ZKa2RA/1YavndeiNpwalTMyLbovIw/Q3teYZJkvvfkO2NCPQqDkQ/

rclone sync --dry-run ./ idrive-e2-ro:backup-marelle-home/HwbgCGKQRDp7cxngn4g9lg/SCd8lOi6Too9m2PUcJCQKw/ospmv6Ap59_IlHlcaFgmVA/jDPHjf7-NXuAGfQ-ZKa2RA/1YavndeiNpwalTMyLbovIw/Q3teYZJkvvfkO2NCPQqDkQ/qkc9WWP3X1W0mDyNx3tj9g/

If so, then let's see if we can list/find one of the uplicates using the simpler rclone lsf command piped into sort | uniq -cd (assuming reproduction with the last line above):

rclone lsf -R idrive-e2-ro:backup-marelle-home/HwbgCGKQRDp7cxngn4g9lg/SCd8lOi6Too9m2PUcJCQKw/ospmv6Ap59_IlHlcaFgmVA/jDPHjf7-NXuAGfQ-ZKa2RA/1YavndeiNpwalTMyLbovIw/Q3teYZJkvvfkO2NCPQqDkQ/qkc9WWP3X1W0mDyNx3tj9g/ | sort | uniq -cd

If so, then try the same command with -vv dump responses like described by Nick here:

It may also help to know the number on entries listed to decide on the best next steps, that can be done like this:

rclone lsf -R idrive-e2-ro:backup-marelle-home/HwbgCGKQRDp7cxngn4g9lg/SCd8lOi6Too9m2PUcJCQKw/ospmv6Ap59_IlHlcaFgmVA/jDPHjf7-NXuAGfQ-ZKa2RA/1YavndeiNpwalTMyLbovIw/Q3teYZJkvvfkO2NCPQqDkQ/qkc9WWP3X1W0mDyNx3tj9g/ | wc -l

Finally I note that you are using the remote idrive-e2-ro and assume it is identical to the above idrive-e2, otherwise please post the redacted config.

So the second one do reproduce the issue:

~# rclone sync --dry-run /tmp/empty/ idrive-e2-ro:backup-marelle-home/HwbgCGKQRDp7cxngn4g9lg/SCd8lOi6Too9m2PUcJCQKw/ospmv6Ap59_IlHlcaFgmVA/jDPHjf7-NXuAGfQ-ZKa2RA/1YavndeiNpwalTMyLbovIw/Q3teYZJkvvfkO2NCPQqDkQ/qkc9WWP3X1W0mDyNx3tj9g/ 2>&1 | grep -v Skipped

2023/03/18 21:13:46 NOTICE: byCKYO_upq2vS5kGAVHr0u4fXIbbUgxnyZcXMfkIL7s: Duplicate directory found in destination - ignoring

2023/03/18 21:13:48 NOTICE:

Transferred: 0 B / 0 B, -, 0 B/s, ETA -

Checks: 8548 / 8548, 100%

Deleted: 8548 (files), 0 (dirs)

Elapsed time: 1.6s

The lsf command does not show any duplicate.

The number of file is around 10k:

# rclone lsf -R idrive-e2-ro:backup-marelle-home/HwbgCGKQRDp7cxngn4g9lg/SCd8lOi6Too9m2PUcJCQKw/ospmv6Ap59_IlHlcaFgmVA/jDPHjf7-NXuAGfQ-ZKa2RA/1YavndeiNpwalTMyLbovIw/Q3teYZJkvvfkO2NCPQqDkQ/qkc9WWP3X1W0mDyNx3tj9g/ | wc -l

10156

Doing the sync with a dump of the responses get this log: Data package from March 18th. - FileTransfer.io

About idrive-e2-ro, here is the config:

[idrive-e2-ro]

type = s3

provider = IDrive

access_key_id = ***

secret_access_key = ***

endpoint = k5a8.par.idrivee2-45.com

acl = private

memory_pool_use_mmap = true

The only difference is that the access token can only access a single bucket, and is read-only.

Ole

March 18, 2023, 10:12pm

16

Perfect Benjamin!

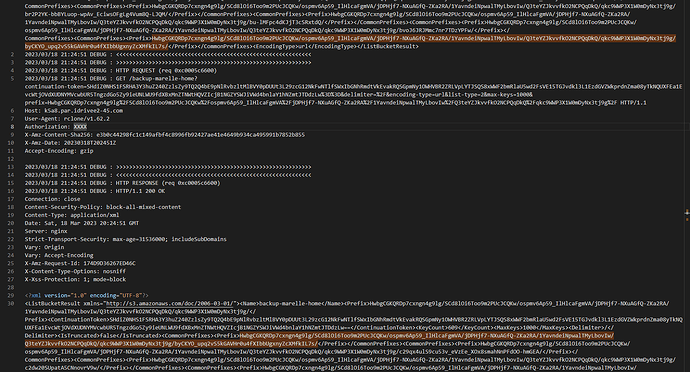

It looks like there is an Off-by-one error in the data returned by IDrive, where the directory on the boundary of a continued list response is repeated in the continuation response as you can see here:

Could be a somewhat random issue that only happens (sometimes) with long folder/file names where the response contains less than the maximum of 1000 list items - guessing based on the <KeyCount>609</KeyCount><MaxKeys>1000</MaxKeys> sequence in the beginning of the continuation response and the relatively few occurrences.

It is however odd, that it apparently doesn't happen when using --fast-list and rclone lsf

@ncw Seems like I am missing something, does this make sense to you?

ncw

March 20, 2023, 10:50am

17

Yes that looks correct @Ole

We see this directory

HwbgCGKQRDp7cxngn4g9lg/SCd8lOi6Too9m2PUcJCQKw/ospmv6Ap59_IlHlcaFgmVA/jDPHjf7-NXuAGfQ-ZKa2RA/1YavndeiNpwalTMyLbovIw/Q3teYZJkvvfkO2NCPQqDkQ/qkc9WWP3X1W0mDyNx3tj9g/byCKYO_upq2vS5kGAVHr0u4fXIbbUgxnyZcXMfkIL7s/

In the CommonPrefixes in two responses. I think this might be causing rclone to use too much memory as it is recursing into both directories, but I'm not 100% sure about that.

I'll email my contacts at iDrive and see what they say.

2 Likes

ncw

March 22, 2023, 10:13am

18

I've heard word back from the idrive team that they've fixed this problem. It will be rolling out to all regions in a few days.

So @Benjamin_L could you re-test this in a few days and see if it solves the problem - thanks. In particular you shouldn't get any Duplicate directory found in destination errors when the fix has been rolled out. I'm hoping this will solve the excess memory use too, but I'm not 100% sure it will.

1 Like

Benjamin_L

March 22, 2023, 10:15am

19

Thanks. I'll retry in a week or so without fast-dir and report back.

1 Like

So, this is way better, and I can run a full backup without exploding my memory. I still see some duplicate entries though, but a lot less than before.

Thanks!

2 Likes