Hello, so I just tried

ps -ef | grep rclone

and I got this back

dolores+ 11521 34357 0 14:21 pts/249 00:00:00 grep rclone

dolores+ 31584 1 0 14:14 ? 00:00:00 rclone mount -vvv --allow-other --allow-non-empty gcache:

/home5/doloresh4ze/gcache

dolores+ 39404 1 8 Oct08 ? 1-12:33:26 rclone mount -vvv --allow-other --allow-non-empty gcache:

/home5/doloresh4ze/gcache

dolores+ 47935 1 11 Oct18 ? 21:33:03 rclone mount -vvv --allow-other --allow-non-empty gcache:

/home5/doloresh4ze/gcache

My files are not being imported from sonarr right now and this is the issue

Couldn't import episode

/downloads/completed/Series/stephen.colbert.2018.10.25.gayle.king.1080p.web.x264-tbs-

RakuvZOiDSEN/03ea7761248548a783c5ae6104908a2d.mkv: Invalid handle to path ""

What’s going on?

I also just tried moving a file manually (via ssh) to gcache and I got this message back

doloresh4ze@lw702:~/.local/bin$ mv "IASIP - I am going into the crevice-8w0ZeihFqc8.mp4"

/home5/doloresh4ze/gcache/Media/

mv: failed to close '/home5/doloresh4ze/gcache/Media/IASIP - I am going into the crevice-

8w0ZeihFqc8.mp4': Input/output error

Looks like you have 3 different mounts going on.

You’d want to kill all 3 and make sure everything is stopped and remount it.

Normally, you’d have 1 rclone process going on.

@Animosity022

This will sound stupid but… how do I kill the mounts? Because I’ve been using

fusermount -uz /home5/doloresh4ze/gcache/

then manually delete the cache.db in

/home5/doloresh4ze/.cache/rclone/cache-backend/

and then mount again with

rclone mount -vvv --allow-other --allow-non-empty gcache: /home5/doloresh4ze/gcache

But apparently this left me with 3 mounts on! So how I do really kill them?!

So in my case, you can do the fusermount as well, and check via the ps command if the process still exists.

If it is still there:

felix@gemini:~$ ps -ef | grep rclone

felix 578 1 0 Oct15 ? 01:53:40 /usr/bin/rclone mount gcrypt: /GD --allow-other --bind 192.168.1.30 --buffer-size 1G --dir-cache-time 72h --drive-chunk-size 32M --fast-list --log-level INFO --log-file /home/felix/logs/rclone.log --umask 002 --vfs-read-chunk-size 128M --vfs-read-chunk-size-limit off

and in my case, I’d do:

kill 578

which is the process ID I’d want to kill. You can put multiple process IDs and just separate them with spaces or do multiple commands.

@Animosity022 fusermounted, killed everything, mounted back up, root folder is accessible, etc - but sonarr still isn’t importing! Very strange

Still getting the input/output error when trying to manually (sudo mv file_name gcache_folder) move something to gcache too. mmmhm

I’d take a step back and stop Sonarr/Radarr/etc.

If you unmount/stop everything, you should see nothing in the mount point if you do an ls against it.

Once you validate everything is stopped and you are a clean state, run the rclone mount command again.

Don’t start up anything. Check the directory and do a ls and see validate you are seeing files in there.

Just do a test cp of something small as I normally test with a cp /etc/hosts into my mount to make sure everything works.

Once that works, slowly start to turn things on and validate what’s going on.

@Animosity022 tryied cp something, while leaving the debug terminal on… and I got this (this is just a small part of it) and the file DIDN’T copy

2018/10/26 16:09:57 DEBUG : pacer: Rate limited, sleeping for 1.033612815s (1 consecutive low level retries)

2018/10/26 16:09:57 DEBUG : pacer: low level retry 1/1 (error googleapi: Error 403: User rate limit exceeded., userRateLimitExceeded)

2018/10/26 16:09:57 ERROR : Media/test_rclone.txt: put: error uploading: googleapi: Error 403: User rate limit exceeded., userRateLimitExceeded

2018/10/26 16:09:57 DEBUG : Media/test_rclone.txt: Received error: googleapi: Error 403: User rate limit exceeded., userRateLimitExceeded - low level retry 9/10

2018/10/26 16:09:57 DEBUG : Cache remote gcache:: put data at 'Media/test_rclone.txt'

2018/10/26 16:09:58 DEBUG : pacer: Resetting sleep to minimum 10ms on success

2018/10/26 16:09:59 DEBUG : pacer: Rate limited, sleeping for 1.067734349s (1 consecutive low level retries)

2018/10/26 16:09:59 DEBUG : pacer: low level retry 1/1 (error googleapi: Error 403: User rate limit exceeded., userRateLimitExceeded)

2018/10/26 16:09:59 ERROR : Media/test_rclone.txt: put: error uploading: googleapi: Error 403: User rate limit exceeded., userRateLimitExceeded

2018/10/26 16:09:59 ERROR : Media/test_rclone.txt: Failed to copy: googleapi: Error 403: User rate limit exceeded., userRateLimitExceeded

2018/10/26 16:09:59 ERROR : Media/test_rclone.txt: WriteFileHandle.New Rcat failed: googleapi: Error 403: User rate limit exceeded., userRateLimitExceeded

2018/10/26 16:09:59 ERROR : Media/test_rclone.txt: WriteFileHandle.Release error: googleapi: Error 403: User rate limit exceeded., userRateLimitExceeded

2018/10/26 16:09:59 DEBUG : &{Media/test_rclone.txt (w)}: >Release: err=googleapi: Error 403: User rate limit exceeded., userRateLimitExceeded

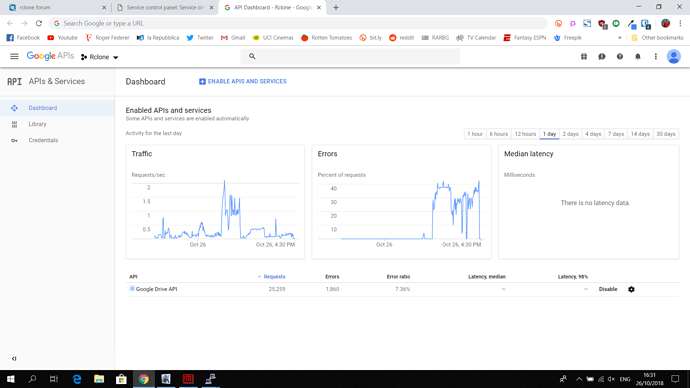

Are you using your own API Key or the standard rclone? Are you able to create your own?

Up to this point I thought my own, but this error is giving me doubts now!

Could it be a 750gb upload problem instead of an api calls problem? Because I downloaded a lot of stuff yesterday/last night but I don’t know if I reached the upload limit! I need to check the cache remote in rclone, correct?

@Animosity022 my own I think

And I can stream from Plex - so it shouldn’t be an API issue! It’s probably an upload issue then?

Yes, you can only upload 750GB in a day. I’ve heard various times of when that resets, but I don’t nearly upload that much in a day anymore.

From that message, you are definitely using your own key if you can see it in the API dashboard.

I’m do not recall the error message if you hit the daily upload limit.

Must’ve been the upload limit… it’s working now!

Thanks!