cberni

September 6, 2021, 2:55am

1

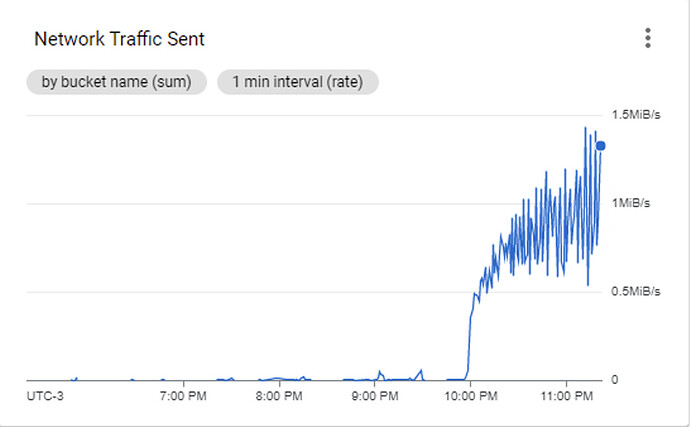

rclone copy from local to remote billing download data from remote.

I have about 4tb local and a full copy on google cloud storage. Every day the copy command is run once to copy only the last day's production. But this copy command is charging remote download.

Way is it downloading data from remote backend during the entire rclone copy local to remote? since most of the time it would be excluding files...

rclone version)

rclone v1.56.0

google cloud storage

rclone copy /tmp remote:tmp)

rclone copy /local remote:REMOTE --include-from "/include-file.txt" --max-age 2021-09-05 -vv

[remote]

type = google cloud storage

service_account_file = key.json

-vv flag

2021/09/05 21:57:17 DEBUG : --max-age 1.039787137250301d to 2021-09-04 21:00:00.000046545 -0300 -03 m=-89837.540811688

2021/09/05 21:57:17 DEBUG : rclone: Version "v1.56.0" starting with parameters ["rclone" "copy" "/local" "remote:REMOTE" "--include-from" "/include-file.txt" "--max-age" "2021-09-05" "-vv"]

2021/09/05 21:57:17 DEBUG : Creating backend with remote "/local"

2021/09/05 21:57:17 DEBUG : Using config file from "/rclone.conf"

2021/09/05 21:57:17 DEBUG : Creating backend with remote "remote:hsasaude"

.

.

.

lot of Excluded files (not last day age)

.

.

.

copy last days files...

ncw

September 6, 2021, 9:22am

2

What is in your include-from file? Maybe that could be tightened up?

cberni

September 6, 2021, 2:26pm

3

inside file have this:

with the command "rclone copy /local remote:REMOTE" will get all /local/data/** files, right?

or will it get /others/data/** files too?

cberni

September 6, 2021, 4:00pm

4

looking de debug the files seens to be right.

but seens to download data when excluding...

I have a union (/union) mount with /local and remote:REMOTE. But would not be a problem because the copy is about /local only.

ncw

September 13, 2021, 10:01am

5

cberni:

with the command "rclone copy /local remote:REMOTE" will get all /local/data/** files, right?

or will it get /others/data/** files too?

No, just /data/**

Is the output in dated folders? Maybe you could specifically include those?

Once thing you could try is doing a top-up sync where you use rclone copy with --max-age to only copy the newer files. This works well for eliminating dirctory scanning.

cberni

September 14, 2021, 4:06pm

6

yes. I'm using already the --max-age as is showed in the template here on the first post.

the problem still remains. I don't know what can be downloaded of the backend to local with a rclone copy local to backend.

the -vv log seems to be all right also.

ncw

September 15, 2021, 2:36pm

7

Rclone has to download directory listings. That is likely the traffic.

If you want to see the traffic then use -vv --dump headers which might give you a clue.

1 Like

cberni

September 21, 2021, 3:41am

8

the -vv --dump headers log bellow... lot and lot of http request and response.

that is millions of files being excluded and the request and response are being done while excluding files. Is it not supposed to request http only when transfer a file?

it is about 1 hour excluding files and 10 minutes transferring new files. Is there a way to avoid download data from backend when excluding the files?

2021/09/20 22:52:27 DEBUG : >>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>

2021/09/20 22:52:27 DEBUG : HTTP REQUEST (req 0xc0006fe600)

2021/09/20 22:52:27 DEBUG : GET /storage/v1/b/BUCKET/o?alt=json&delimiter=%2F&maxResults=1000&prefix=data%2Farchive%2F2021%2F

9%2F9%2F&prettyPrint=false HTTP/1.1

Host: storage.googleapis.com

User-Agent: rclone/v1.56.0

Authorization: XXXX

X-Goog-Api-Client: gl-go/1.16.5 gdcl/20210406

Accept-Encoding: gzip

2021/09/20 22:52:27 DEBUG : >>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>

2021/09/20 22:52:27 DEBUG : <<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<

2021/09/20 22:52:27 DEBUG : HTTP RESPONSE (req 0xc000189a00)

2021/09/20 22:52:27 DEBUG : HTTP/2.0 200 OK

Content-Length: 868

Alt-Svc: h3=":443"; ma=2592000,h3-29=":443"; ma=2592000,h3-T051=":443"; ma=2592000,h3-Q050=":443"; ma=2592000,h3-Q046=":443"; ma=2592000,h3-Q043=":443"; ma=2592000,quic=":443"; ma=2592000; v="46,43"

Cache-Control: private, max-age=0, must-revalidate, no-transform

Content-Type: application/json; charset=UTF-8

Date: Tue, 21 Sep 2021 02:52:27 GMT

Expires: Tue, 21 Sep 2021 02:52:27 GMT

Server: UploadServer

Vary: Origin

Vary: X-Origin

X-Guploader-Uploadid: ADPycduka-dDsSwHESpNi8yi7tcpYNXDcOjCxNPauIGx3-EKTUaEmThxT5cPn82joaIikmtIwY93m-PG8cCWDk7dbLw

2021/09/20 22:52:27 DEBUG : <<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<

ncw

September 21, 2021, 9:30am

9

What it is doing there is listing the directories. If you have a lot of directories then this can cause a lot of traffic.

If you have enough memory then using --fast-list will speed it up enormously I think.

1 Like

cberni

September 21, 2021, 4:32pm

10

thanks a lot! Is having a lot less calls with --fast-list. But still many calls like log bellow.

Why request and response while excluding?

2021/09/21 12:11:23 DEBUG : >>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>

2021/09/21 12:11:23 DEBUG : HTTP REQUEST (req 0xc000294600)

2021/09/21 12:11:23 DEBUG : GET /storage/v1/b/BUCKET/o?alt=json&maxResults=1000&pageToken=CjhwYWNzLWRhdGEvYXJjaGl2ZS8yMDE2LzEwLzEvOS8zREFGRjdDRC9DNDg

xQUVDQy9GNTU1Njc4QQ%3D%3D&prefix=&prettyPrint=false HTTP/1.1

Host: storage.googleapis.com

User-Agent: rclone/v1.56.0

Authorization: XXXX

X-Goog-Api-Client: gl-go/1.16.5 gdcl/20210406

Accept-Encoding: gzip

2021/09/21 12:11:23 DEBUG : >>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>

2021/09/21 12:11:24 DEBUG : <<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<

2021/09/21 12:11:24 DEBUG : HTTP RESPONSE (req 0xc000294600)

2021/09/21 12:11:24 DEBUG : HTTP/2.0 200 OK

Content-Length: 966098

Alt-Svc: h3=":443"; ma=2592000,h3-29=":443"; ma=2592000,h3-T051=":443"; ma=2592000,h3-Q050=":443"; ma=2592000,h3-Q046=":443"; ma=2592000,h3-Q043=":443"; ma=2

592000,quic=":443"; ma=2592000; v="46,43"

Cache-Control: private, max-age=0, must-revalidate, no-transform

Content-Type: application/json; charset=UTF-8

Date: Tue, 21 Sep 2021 16:11:24 GMT

Expires: Tue, 21 Sep 2021 16:11:24 GMT

Server: UploadServer

Vary: Origin

Vary: X-Origin

X-Guploader-Uploadid: ADPycdskIHzpDgtbElrjaQTXqc3E0kyOB-reIK_N2jD4fPOdg5ndbMid_HSurbO4Pz4XJaeGj6h1higqw9SQ8gzvw98

2021/09/21 12:11:24 DEBUG : <<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<

2021/09/21 12:28:35 DEBUG : data/archive/2018/2/1/14/3EB3097E/3EB30982/33523B09: Excluded from sync (and deletion)

2021/09/21 12:28:35 DEBUG : data/archive/2018/2/1/14/3EB3097E/3EB30982/33523B0A: Excluded from sync (and deletion)

2021/09/21 12:28:35 DEBUG : data/archive/2018/2/1/14/3EB3097E/3EB30982/33523B0B: Excluded from sync (and deletion)

cberni

September 21, 2021, 4:56pm

11

I think I found the problem...

I'm using rclone copy. Why I'm getting the message "Excluded from sync (and deletion)"

It's reading all the files on the remote backend. Don't have a way to just copy new files local to remote?

2021/09/21 12:46:42 DEBUG : data/archive/2016/10/18/9/625CCCAA/F5FAC885/626AC4D1: Excluded from sync (and deletion)

2021/09/21 12:46:42 DEBUG : data/archive/2016/10/18/9/625CCCAA/F5FAC885/EAEDD487: Excluded from sync (and deletion)

2021/09/21 12:46:42 DEBUG : data/archive/2016/10/18/9/625CCCAA/F5FAC885/EAEDD488: Excluded from sync (and deletion)

2021/09/21 12:46:42 DEBUG : data/archive/2016/10/18/9/625CCCAA/F5FAC885/EAEDD489: Excluded from sync (and deletion)

2021/09/21 12:46:42 DEBUG : data/archive/2016/10/18/9/625CCCAA/F5FAC885/EAEDD48A: Excluded from sync (and deletion)

2021/09/21 12:46:42 DEBUG : data/archive/2016/10/18/9/625CCCAA/F5FAC885/EAEDD48B: Excluded from sync (and deletion)

2021/09/21 12:46:42 DEBUG : data/archive/2016/10/18/9/625CCCAA/F5FAC885/EAEDD48C: Excluded from sync (and deletion)

2021/09/21 12:46:42 DEBUG : data/archive/2016/10/18/9/625CCCAA/F5FAC885/EAEDD48D: Excluded from sync (and deletion)

cberni

September 22, 2021, 12:59am

12

I'm testing here and "Excluded from sync (and deletion)" is shown when using --include-from or --max-age and I'm using both.

It seems is reading all files on backend (millions) that is not local anymore just to say it is excluded.

Is there a way to rclone copy with --include-from and --max-age that don't check files are only in backend (don't sync)?

cberni

September 22, 2021, 1:21am

13

--no-traverse did the job. Thanks a lot.

ncw

September 23, 2021, 10:53am

14

Yes --no-traverse means that rclone will try to find each file individually.

This is usually less efficient that not using it with google drive but whether it is or isn't depends on the pattern of updated files. If you have lots of files being updated in one directory the --no-traverse is a loss, whereas if you have files being updated all over the place then it can be a win.

1 Like

system

September 26, 2021, 10:54am

15

This topic was automatically closed 3 days after the last reply. New replies are no longer allowed.