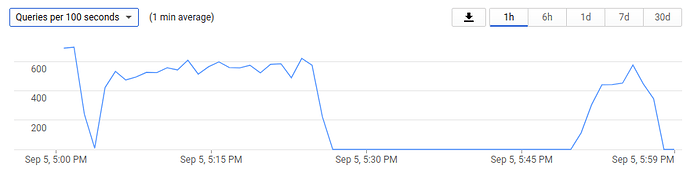

Separate from the issue where I'm get a large number of ERROR : ... Failed to copy: file already closed, I also have been having to restart rclone every morning after checking in on it. It always gets stuck overnight at some point forcing me to restart in the mornings. The command that I use is:

rclone copy . GDriveCrypt: --bwlimit 8650k --progress --fast-list --verbose --transfers=10 --checkers=20 --tpslimit=10 --tpslimit-burst=10 --drive-chunk-size=256M --log-file=./log.log

In the mornings I see no network activity, 0% CPU usage for rclone (although it is around 40% when it is doing active transfers), although the transfer rate shows as a non-zero number. I have to do a keyboard interrupt:

Transferred: 472.334G / 1.461 TBytes, 32%, 8.341 MBytes/s, ETA 1d10h55m25s

Errors: 92 (retrying may help)

Checks: 449886 / 449886, 100%

Transferred: 12763 / 22791, 56%

Elapsed time: 16h6m29.7s

Transferring:

* <file>.imgc: 5% /912.067G, 2.231M/s, 109h29m4s

* <file>.JPG: transferring

* <file>.mkv: 58% /13.054G, 2.269M/s, 40m25s

* <file>.mp4: 83% /1.640G, 2.288M/s, 2m4s

* <file>.JPG: 0% /754.299k, 0/s, -

* <file>.JPG: 0% /1.146M, 0/s, -

* <file>.jar: 0% /985.255k, 0/s, -

* <file>.png: 0% /133, 0/s, -

* <file>.JPG: 0% /2.228M, 0/s, -^C

The last item in my log file from yesterday is at 2:24:36 AM with a status of INFO : ... : Copied (new), and the one the day before is at 7:54:44 AM with the same status.

I'm not sure what to do here. I'm happy to post the logs from yesterday and the day before if someone can guide me on how to censor the personal information in them. (I'm happy to write a quick parser to do this if no one has a better solution and the logs are necessary to proceed.)