What is the problem you are having with rclone?

I have been able to reproduce this in a variety of ways. Right now I have rclone mounted using some of Animosity022's scripts (much older versions of them).

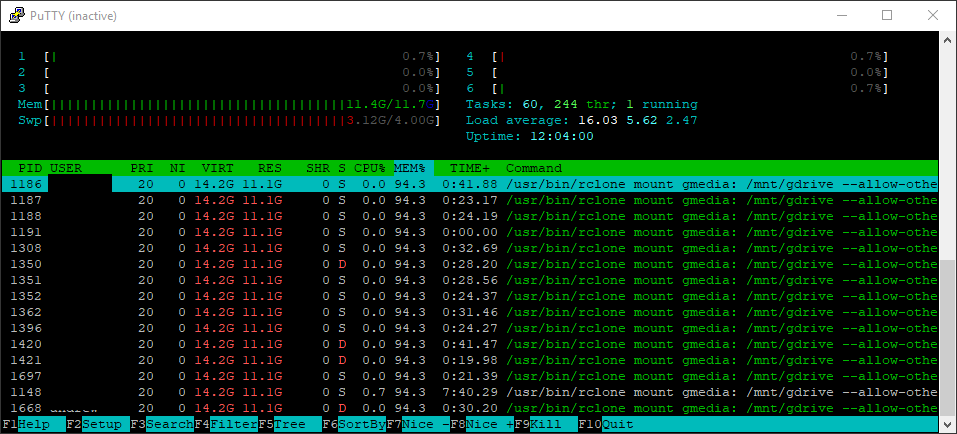

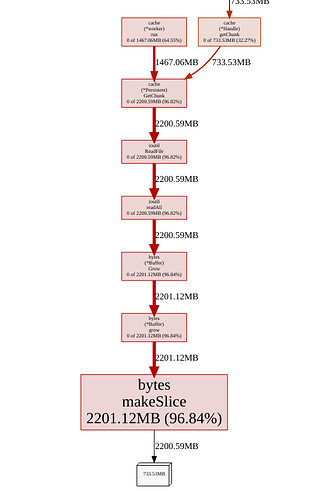

When I play files directly back from the cache mount, the ram usage is normal/minimal ~1 - 2 GB. When I scan my library however, I end up getting something that looks like this::

which eventually causes my guest VM to lockup, and then subsequently locks up all the other guests and host (to some extent). I am trying to find ways to optimize this or have a hard cap at the amount of RAM rclone takes up, is there any suggestions on how to improve this?

What is your rclone version (output from rclone version)

@plex:~$ rclone version

rclone v1.49.2

- os/arch: linux/amd64

- go version: go1.12.9

Which OS you are using and how many bits (eg Windows 7, 64 bit)

Ubuntu 18.04.3, 64-bit

Which cloud storage system are you using? (eg Google Drive)

Google Drive/Team Drives

The command you were trying to run (eg rclone copy /tmp remote:tmp)

Was using caching server, the systemd scripts are as follows:

user@plex:/etc/systemd/system$ grep ^ /dev/null gmedia*

gmedia-find.service:[Unit]

gmedia-find.service:Description=gmedia find

gmedia-find.service:PartOf=gmedia.service

gmedia-find.service:After=mnt-gdrive.mount

gmedia-find.service:

gmedia-find.service:[Service]

gmedia-find.service:Type=simple

gmedia-find.service:

gmedia-find.service:ExecStart=/usr/bin/rclone rc vfs/refresh recursive=true

gmedia-find.service:RemainAfterExit=yes

gmedia-find.service:User=user

gmedia-find.service:Group=user

gmedia-find.service:

gmedia-find.service:[Install]

gmedia-find.service:# Components of this application should be started at boot time

gmedia-find.service:WantedBy=gmedia.service

gmedia-rclone.service:[Unit]

gmedia-rclone.service:Description=RClone Service

gmedia-rclone.service:PartOf=gmedia.service

gmedia-rclone.service:

gmedia-rclone.service:[Service]

gmedia-rclone.service:Type=notify

gmedia-rclone.service:#Environment=RCLONE_CONFIG=/opt/rclone/rclone.conf

gmedia-rclone.service:Environment=RCLONE_CONFIG=/home/user/.config/rclone/rclone.conf

gmedia-rclone.service:

gmedia-rclone.service:ExecStart=/usr/bin/rclone mount gmedia: /mnt/gdrive

gmedia-rclone.service:--allow-other

gmedia-rclone.service:--dir-cache-time 96h

gmedia-rclone.service:--drive-chunk-size 16M

gmedia-rclone.service:--log-level DEBUG

gmedia-rclone.service:--log-file /var/log/rclone.log

gmedia-rclone.service:--timeout 3h

gmedia-rclone.service:--umask 002

gmedia-rclone.service:--rc

gmedia-rclone.service:

gmedia-rclone.service:ExecStop=/bin/fusermount -uz /mnt/gdrive

gmedia-rclone.service:Restart=on-failure

gmedia-rclone.service:User=user

gmedia-rclone.service:Group=user

gmedia-rclone.service:

gmedia-rclone.service:[Install]

gmedia-rclone.service:WantedBy=gmedia.service

gmedia.service:[Unit]

gmedia.service:Description=gdrive

gmedia.service:After=network-online.target

gmedia.service:Wants=network-online.target

gmedia.service:

gmedia.service:[Service]

gmedia.service:# The dummy program will exit

gmedia.service:Type=oneshot

gmedia.service:# Execute a dummy program

gmedia.service:ExecStart=/bin/true

gmedia.service:# This service shall be considered active after start

gmedia.service:RemainAfterExit=yes

gmedia.service:

gmedia.service:[Install]

gmedia.service:# Components of this application should be started at boot time

gmedia.service:WantedBy=multi-user.target

grep: gmedia.service.wants: Is a directory

user@plex:/etc/systemd/system$ grep ^ /dev/null mnt-gdrive.mount

mnt-gdrive.mount:[Unit]

mnt-gdrive.mount:Description = /gdrive MergerFS mount

mnt-gdrive.mount:PartOf=gmedia.service

mnt-gdrive.mount:After=gmedia-rclone.service

mnt-gdrive.mount:RequiresMountsFor=/data

mnt-gdrive.mount:

mnt-gdrive.mount:[Mount]

mnt-gdrive.mount:What = /mnt/gdrive

mnt-gdrive.mount:Where = /mnt/gdrive

mnt-gdrive.mount:Type = fuse.mergerfs

mnt-gdrive.mount:Options = defaults,sync_read,auto_cache,use_ino,allow_other,func.getattr=newest,category.action=all,category.create=ff

mnt-gdrive.mount:

mnt-gdrive.mount:[Install]

mnt-gdrive.mount:WantedBy=gmedia.service

user@plex:/etc/systemd/system$

A log from the command with the -vv flag (eg output from rclone -vv copy /tmp remote:tmp)

The debug log just shows it scrolling through files. I removed it because it eventually took up 1> GB of space. Happy to provide, but, file exploration doesn't seem like a huge benefit in the log.