Hi everyone,

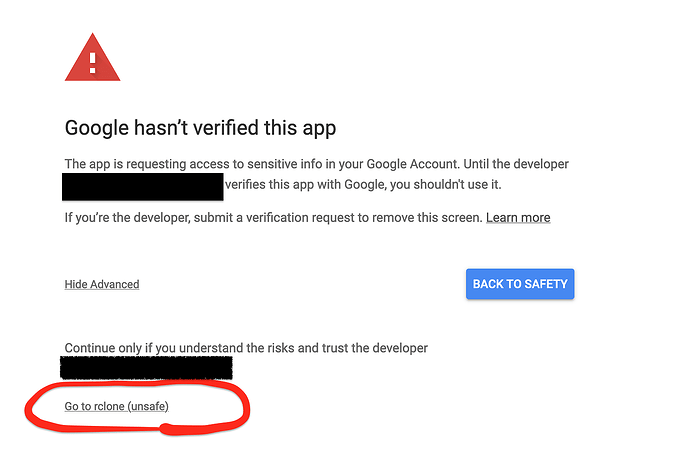

I've been using Rclone for a few years to sync a large photo collection to Google Photos. It works, for the most part, but struggles a bit and I'm looking for ways to make subsequent runs more efficient.

The command I typically use is this one:

PS C:\rclone> .\rclone.exe sync -P --tpslimit 1 --transfers 1

--retries-sleep 1m H:\Photos\ remote_google_photos:album/

--include "**.{JPG,jpg,PNG,png,TIFF,tiff,NEF,nef,ARW,arw}"

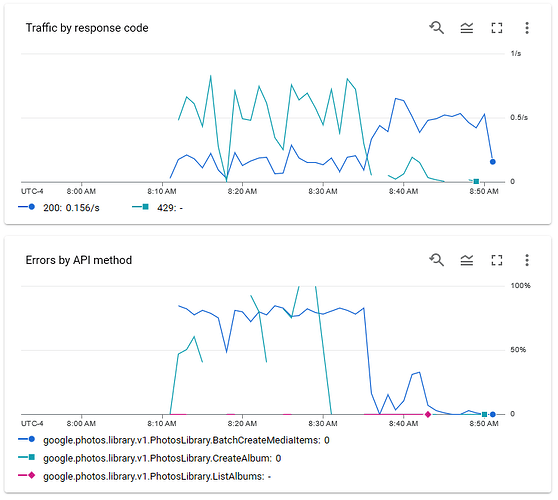

This seem to alleviate quota errors and, for the most part, runs without issue even for several days. Here's the current run I launched yesterday:

Transferred: 59.897Gi / 92.423 GiByte, 65%, 41.981 KiByte/s, ETA 1w2d9h39m33s

Errors: 125 (retrying may help)

Checks: 344425 / 344425, 100%

Transferred: 9711 / 19713, 49%

Elapsed time: 9h31m12.6s

This run is estimated to take over a week to complete. My issue is that 99% of the files in this collection have already been uploaded to Google Photos, and there may only be a few outliers that need to be readded, so an entire week of upload seems excessive.

I've been looking for a way to keep state between rclone and Google Photos. It doesn't look like Google Photos has an API to return the hash of a photo, which would have been my preferred approach to check that the upstream and local files are identical without reuploading them. Failing that, I was hoping Rclone could store a local DB with a list of photos already uploaded, and check that they have not changed locally before uploading them again. Is there a way to achieve this?

Thank you for your assistance!

Julien