asdffdsa

September 18, 2020, 7:54pm

1

What is the problem you are having with rclone?

when a bucket is created, the bucket is created as standard storage class.

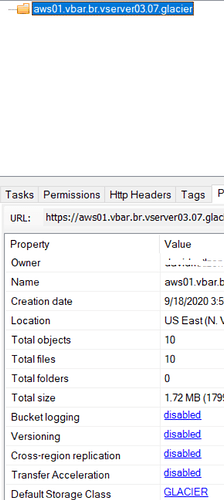

the lsf command does not list a storage class for the bucket, it is missing.;aws01.vbar.br.vserver03.07.glacier/

when using s3 browser and/or cloudberry explorer:

i can see the bucket is created as standard storage class, not glacier.

i can change the storage class of the bucket from standard to glacier.

What is your rclone version (output from rclone version)

rclone v1.53.1

- os/arch: windows/amd64

- go version: go1.15

Which OS you are using and how many bits (eg Windows 7, 64 bit)

win10.2004.64

Which cloud storage system are you using? (eg Google Drive)

aws s3

The command you were trying to run (eg rclone copy /tmp remote:tmp)

rclone.exe copy C:\data\boot aws_glacier:aws01.vbar.br.vserver03.07.glacier -vv

rclone.exe lsf -R --format=Tp aws_glacier: -vv

The rclone config contents with secrets removed.

[aws_glacier]

type = s3

provider = AWS

access_key_id = redacted

secret_access_key = redacted

region = us-east-1

storage_class = GLACIER

A log from the command with the -vv flag

DEBUG : rclone: Version "v1.53.1" starting with parameters ["rclone.exe" "copy" "C:\\data\\boot" "aws_glacier:aws01.vbar.br.vserver03.07.glacier" "-vv"]

DEBUG : Creating backend with remote "C:\\data\\boot"

DEBUG : Using config file from "c:\\data\\rclone\\scripts\\rclone.conf"

DEBUG : fs cache: renaming cache item "C:\\data\\boot" to be canonical "//?/C:/data/boot"

DEBUG : Creating backend with remote "aws_glacier:aws01.vbar.br.vserver03.07.glacier"

DEBUG : S3 bucket aws01.vbar.br.vserver03.07.glacier: Waiting for checks to finish

DEBUG : S3 bucket aws01.vbar.br.vserver03.07.glacier: Waiting for transfers to finish

INFO : S3 bucket aws01.vbar.br.vserver03.07.glacier: Bucket "aws01.vbar.br.vserver03.07.glacier" created with ACL "private"

DEBUG : dyndns.cmd: MD5 = 5232a9d7b42bbf24ab71831e41543197 OK

INFO : dyndns.cmd: Copied (new)

DEBUG : test/amsg.exe: MD5 = 62a7d7be6d6514af5e08be486757f4e0 OK

INFO : test/amsg.exe: Copied (new)

DEBUG : rclone: Version "v1.53.1" starting with parameters ["rclone.exe" "lsf" "-R" "--format=Tp" "aws_glacier:" "-vv"]

DEBUG : Using config file from "c:\\data\\rclone\\scripts\\rclone.conf"

DEBUG : Creating backend with remote "aws_glacier:"

;aws01.vbar.br.vserver03.07.glacier/

GLACIER;aws01.vbar.br.vserver03.07.glacier/dyndns.cmd

GLACIER;aws01.vbar.br.vserver03.07.glacier/test/amsg.exe

;aws01.vbar.br.vserver03.07.glacier/test/

ncw

September 18, 2020, 8:39pm

2

I didn't know buckets had a storage class, I thought it was only the objects.

It doesn't appear to mention it in the create bucket docs

https://docs.aws.amazon.com/AmazonS3/latest/API/API_CreateBucket.html#API_CreateBucket_RequestSyntax

Can you find the API for how you read/set the storage class of the bucket?

This could be a backend command

asdffdsa

September 18, 2020, 8:47pm

3

i could be confused.

i am sure you know if a bucket can have a storage class.

ncw

September 18, 2020, 9:04pm

4

I know a little bit about a lot of storage providers and there is always new stuff to learn for AWS S3!

There is a different thing called a glacier archive which rclone doesn't support.

This is the best do I've found so far

asdffdsa

September 18, 2020, 9:06pm

5

thanks,

after a re-think, what s3 browser and cloudberry is doing, is setting the default storage class.

asdffdsa

September 18, 2020, 10:36pm

6

in my testing, rclone already supports a default storage class.

asdffdsa

September 18, 2020, 10:51pm

7

doing more testing, rclone does not seem to support a default storage class for a bucket.

adding storage_class = GLACIER to a remote, the files that are copied are set to GLACIERGLACIER

ncw

September 19, 2020, 10:15am

8

Interesting. I haven't found out how to set the default storage class yet. Are there any docs for S3 browser / cloudberry which tells you what it does? Maybe you can snoop on its http transactions?

asdffdsa

September 19, 2020, 3:03pm

9

i did a lot of testing and i found that there is no default storage class for a bucket.

rclone and s3 browser do the same thing.x-amz-storage-class: GLACIER for each file copied

with s3 browser, as a convenience in the gui, you can set the default class for a bucket.

thanks

ncw

September 19, 2020, 3:14pm

10

Cool! That has cleared that up.

So setting this should be equivalent

asdffdsa:

storage_class = GLACIER

asdffdsa

September 19, 2020, 3:26pm

11

correct,

adding storage_class = GLACIER to the config file--s3-storage-class=GLACIER to the command

is the equivalent of default bucket policy in s3 browser

1 Like

system

November 19, 2020, 11:26am

12

This topic was automatically closed 60 days after the last reply. New replies are no longer allowed.