I'd like to share a small story on a problem with my rclone setup I've diagnosed as a context for this request.

I'm nonprofessionally running some embarrassingly parallel scientific computations on several compute nodes around the world (whatever I managed to snatch cheaply ;-)) that I've set up to use rclone vfs for shared storage on a single sftp server. Shared storage hosts a dataset partitioned with directories, and each partition also has a large number of subdirectories inside. A single job reads a small number of files and creating a large number of files, both within a single partition, but potentially across all subdirectories. All operations are technically at random, but if a job touches a given file, that file is very likely to be read from many times; at the same time my job queueing system ensures partitions are not used concurrently by many jobs—so rclone vfs with full caching is absolutely perfect here. The way I'm using rclone is that each time a job starts, a script sets up a new rclone remote, then computations happen, then the script waits until all uploads finish, and the remote is deleted.

Observation #1: A single partition still consists of millions of files and have a total size of around a terabyte, which leads to an observation that rclone's directory cache in rclone can reach 5-6 GB. This is not a big problem for me here specifically, though out of curiosity some time ago I did run collect a profile, and have contributed a small patch. Though, almost half of that memory is eaten by strings (file paths), so I assume it would be rather difficult to get a significant improvement here anyway.

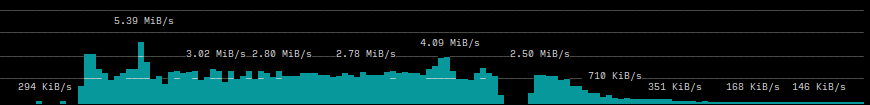

Observation #2: I've noticed that my SFTP server seemed to have much more egress than I have expected. Like, I expected the scripts to download maybe few hundred kilobytes of data per second, and I was observing ten times that. I've run my sftp server with debug logs and realized that it just keeps redownloading directory listings… despite that my jobs do not really need to list directories. With millions of files and long paths it actually have seemed to amount to megabytes per second. As a quick fix I've changed --dir-cache-time from the default 5 minutes to 60 minutes. This has reduced egress to the levels I expected. A curious side effect was also that rclone's memory usage got reduced by around a gigabyte—my guess here is that gigabyte was of expired, but not garbage collected directory cache entries.

(SFTP server egress for two job runs, one before --dir-cache-time change, one after).

I had a look at rclone's source code, and noticed that vfs.Dir.Create calls stat, which performs a full directory listing if the directory where the new file is not already cached. My jobs create a large number of files, which is probably what triggers this problem.

As such, it would be nice to be able to opt out of caching full directories when only operating on single files, so that there's no additional egress altogether. I think though that would be a major change to code—I do see that caching full directories is a useful simplification.

As a side thing, some diagnostics on the amount of traffic used for metadata transfers would be very helpful.

In any case, --dir-cache-time at 60 minutes is a good enough workaround for me at this time. That said, I do hope this kind of optimization would be useful for others.