First question

What attribute dus rclone us to determine --max-age?

Second question

would running rclone copy --max-age 39659s (11hours and 59 seconds) every 12 hours from my NAS to Google Photos be a good way to:

- prevent uploading files I deleted in Google Photos;

- include files that I uploaded to my NAS more than 12hours after creation?

- include files that I uploaded to my NAS more than 24hours after creation?

What is the problem you are having with rclone?

I recently uploaded my photo/video library from my NAS to Google Photos. I backup everything from my phone to the NAS (mostly) daily, except for when abroad or when I don't charge my phone at night.

The upload to Google Photos is for the unique features only Google offers at this quality (recognition, sharing, Chromecast ambient mode, etc.).

I don't need all the pictures that are uploaded though. For example, I delete the blurry pics, or accidental pictures when uploaded to Photos.

I am trying to figure out how to prevent re-uploading by Rclone because it finds out the file is missing when running rclone copy (twice a day). I thought about using --max-age, but I am expecting problems with what age rclone checks;

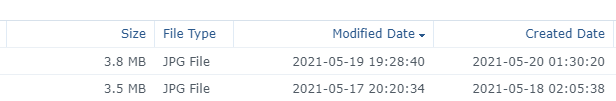

Synology, Android or Syncthing are not handeling the backup well. As far as I can tell the last picture whas taken on the 19th at 19.28 and uploaded to my NAS on the 20th at 01.30. So --max-age should use created date to work in my situation at all.

If so; would running rclone copy --max-age 39659s (11hours and 59 seconds) every 12 hours be a good way to prevent both uploading deleted files and including files that I upload more than 24hours after creation?

What is your rclone version (output from rclone version)

rclone v1.55.1

- os/type: linux

- os/arch: amd64

- go/version: go1.16.3

- go/linking: static

- go/tags: none

Which OS you are using and how many bits (eg Windows 7, 64 bit)

majorversion="6"

minorversion="2"

major="6"

minor="2"

micro="4"

productversion="6.2.4"

buildphase="GM"

buildnumber="25556"

smallfixnumber="0"

nano="0"

base="25556"

builddate="2021/03/18"

buildtime="14:40:29"

Which cloud storage system are you using? (eg Google Drive)

Google Photos

The command you were trying to run (eg rclone copy /tmp remote:tmp)

rclone copy --max-age 39659s

The rclone config contents with secrets removed.

[gphotos]

type = google photos

token = {"access_token":"token"}

client_id = client_id.apps.googleusercontent.com

client_secret = sescret

A log from the command with the -vv flag

irrelevant

Thanks in advance!