I corrected the "microsoft family" values and added plans and values, for the backblaze b2, 1fichier and mega. and I also colored the lines, grouped by company

welcome, looks better

from the spreadsheet:

Similar to AWS Glacier Deep but you can write to it directly.

not sure that is correct; as i use rclone to transfers files to AWS Deep Glacier.

as per the docs, You can upload objects using the glacier storage class

"Achieved Larger By Union on Multiple Drives"

not sure why that is in the sheet, what does it have to do with storage costs differences between providers?

union is a convince feature of rclone, works with most any provider(s)

It was a note to explain how the costing was calculated, and what was required to achieve the larger sizes.

your idea of a the spreadsheet is really working well...

i created a temp sheet, called temp01 for discussion. i can delete it after that.

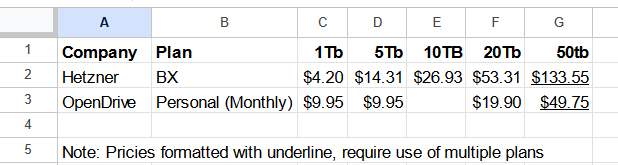

i think the issue is, which sizes require multiple plans.

in my example sheet, an underline symbol, indicates that.

and for multiple plans, might use a formula to calculate it from the other individual plans.

as prices are always changing, using a formula will make the sheet easier to maintain and update.

i tried to do an example with hetzner but i got confused.

- for 50TB, the cost is $112.47; from which individual plans was it calculated from?

- hetzner offers a 10TB plan and that is not listed in the sheet?

i would think the 50TB plan would be 20TB+20TB+10TB.

for that, i get €48.31+€48.31+€24.75 = €121.37 = $132.08

My one pence of worth comment here - what's the point to include union options/opportunities? It can be easily taken into absurdity with for example XYZ cloud offers 10GB of free storage - I can have 500 of them and use union to have 50TB for free. Yes... in theory... but does it have any real value?

That is probably fair comment, maybe the opendrive ones, should read something along the lines of "Upload Speeds Crippled over 10TB, Multiple Accounts Allowed with rclone Union", but then when I've typed it the same silly example applies but more detail could be helpful, I guess it's all about common sense etc.

might be helpful to others.

imho, needs to be clear:

- what are the exact sizes offered by provider.

- what sizes require multiple plans and possibly union remote.

i tried to show that in my temp01 sheet

So, on box.com I have paid for 3 users, but I've only created 1 user. I don't intend to split the costs with anyone, as that's against the terms of service in my opinion.

Anyways, if I am alone in using this setup, should I bother to create the other 2 users as dummys? Does this matter at all? Would creating the other users grant more api calls or some such?

I am also using two different remote VPS to transfer data from google to box, maybe I should've made them separate users?

TLDR: If you're alone on your box.com account have you created multiple users to use yourself? or have you just left some licenses you pay for unclaimed like me?

These prices are pretty good 200$ for an amount of storage that google would probably charge 300$ for.

But uh, what happens when the server starts to wear out in many years? How does that end up working out?

I did the data transfer as the individual user - I think if you are doing it from different VPS there is no harm in setting up seperate users but I am not entirely sure how this works for API accounts, in fact my understanding in the past is that rclone isn’t even an issue it’s more about excessive bandwidth

I had this case this year, after 6 years my old root server became a bit slow, and needed a refresh

So I bought a 2nd server and migrated everything to it (80% docker containers) and gave back the old server after 14 days parallel operations and testing.

Now I have a “brand new” server ![]()

box seems to have somewhat poor bandwidth at 30-50MiB/s for me (dropbox and google could hit 200MiB/s iirc). Worse though box has absolutely horrendous api limits (chokes horribly on small files, roughly ~5-10 files per minute, but also weirdly sometimes bursting up an extra 4000 files a day, tough to measure, the limiter is progressive and I haven't seen documentation on it)

I haven't tried making more users, but I doubt it would help, because it's the team that controls the custom api client_id IIRC?

Edit: After being stuck to around 5-10 files per minute for roughly 12hours I got another rapid burst of 10,000 files... so I think the progressive api throttling either resets nowish or every 12hours?

Edit2: This round has been 40000 files without any api throttle (last time it kicked in after 10000), so maybe it's peak usage hours based? or maybe uh it could be due to how many api calls I wasted on checks? As this time I set --flags to reduce the number of files that would be checked, hmm.

Edit3: The 10000 files that trigger the api throttling were small, the 40000 files that didn't trigger the api throttling were empty and I didn't notice the difference, whoops.

Edit4: when moving small files --tpslimit 12 really does help, it's a bit slow, but 40,000 files in 6hours is a lot better than 10,000 files in 12hours I was getting without it.

In the past I’ve saturated 1 gigabit on box with multiple smallish transfers - but tiny files are not great but I found that on most systems

Have you ever found a strategy that could sustain 1gbit line to box? Because I've found that throttling myself via --flags down to ~25-40MiB/s leads to overall higher speeds that saturating the line for a couple minutes. If I peg to ~125MiB/s for a minute or two it'll end up dropping to below 1MiB/s as a result.

This is the sort of thing I usually use pretty run of the mill copy commands with —transfers 25 —fast-list —ignore-checksum —size-only —check-first

Nice spreadsheet. Will be great to keep up to date, save me periodically checking the storage market.

I am using Storj and deep glacier.

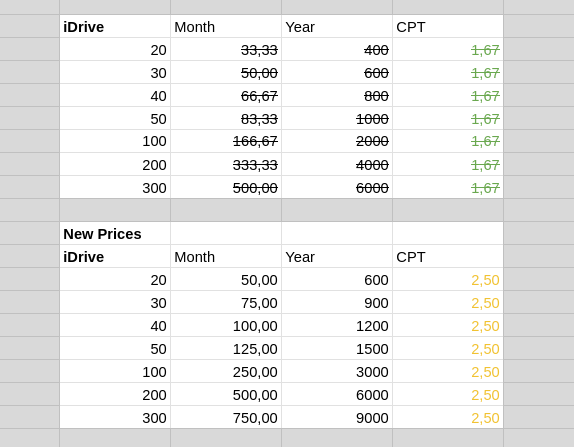

iDrive changed the price model

That is sad ![]() New prices are expensive -,-

New prices are expensive -,-