Tried new Multithreaded OpenChunkWriter functionality it has very high memory consumption when chunksize is big . Instead of loading whole chunk in memory pool why not upload these chunks through streams which will fix the memory usage.

that has not been my experience.

perhaps, use the help and support template, answer all the questions?

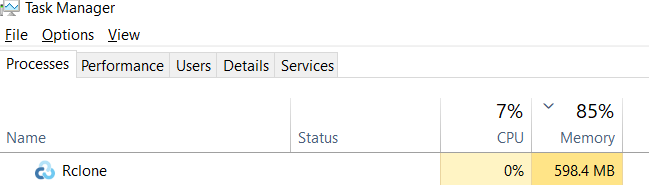

as it happens, right now, my backup script is uploading a 6.45GiB veeam backup file and rclone is only consuming 600MiB of memory

DEBUG : ABJ_SERVER02_CDRIVE_1/server02/ABJ_SERVER02_CDRIVE - server02D2023-10-31T084316_3537.vib: open chunk writer: started multipart upload: 09e0306e-b254-4f9d-8698-0539bad20e8a

Starting multi-thread copy with 52 chunks of size 128Mi with 4 parallel streams

128 x 4 = 650MiB

![]()

I tested with 512 MB with 4 upload concurrency it was consuming around 2 GB. Problem here is all splitted parts are loaded in memory pool instead of seeking file descripter to byte offset for each chunk and uploading readable stream directly .For small chunk size it will not matter but for larger chunk size current approach is very inefficient. Although disk IO will be slow when uploading chunk through streams if a person doesn't have NVme SSD but nowadays most people use NVme so it will surely improve performance.

ok, what you first wrote was loading whole file in memory and i demonstrated, that was not correct.

i see that you have re-edited your original post.

now, i understand what you mean

imho, that is not correct, especially on servers.

most times, the hard limit is a slow internet connection, so not sure the storage type would matter too much.

the backup server i am using has only 8GiB of memory, 128MB with 4 does saturate the connection.

so not sure the advantage to 512MB with 4

but the idea of reading the chunks direct from disk, and not caching in memory is something to discuss.

i am sure the ncw will stop by to comment.

NextCloud add parallel chunks upload by devnoname120 · Pull Request #6179 · rclone/rclone (github.com) someone already implemented what I was saying in my comment I wonder why it was not included in rclone

If think it's useful, feel free to submit a PR.

I rather just use a machine with enough memory as staging to a disk seems wasteful as if I have the money to buy SSD or NMVE, why not just grab a little more RAM.

That is also true but I will try to implement this in multithread interface and see if improvements are worth to be added in rclone.

Check out this as well:

Reduce RAM usage by relying on temp files · Issue #7350 · rclone/rclone (github.com)

As someone asked about this before.

That GitHub issue has a beta to test. It may give improvements but depends on which backend you are using.

I just tested fix-7350-multithread-memory it works very well btw I have used 4 upload concurrency and 512 MB part size here and getting around 15-20MB memory usage which is huge a improvement.

Great! Which backends are you copying from and to?

I enabled this in azureblob.

This topic was automatically closed 60 days after the last reply. New replies are no longer allowed.