What is the problem you are having with rclone?

Hi everyone! I am trying to copy a huge amount of files from one Google Workspace Shared Drive to another.

I have done this in the past and it normally works. However I am seeing a strange behavior with google sheet, docs, slides, etc. files

Sorry for redacting some info, I had to as it is confidential.

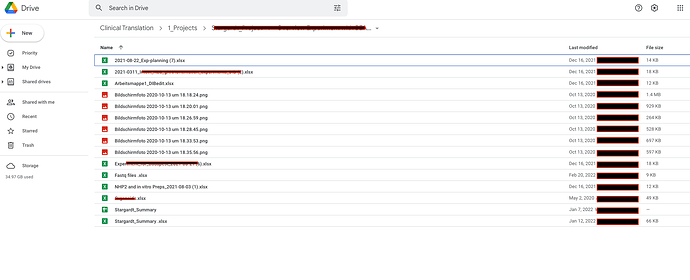

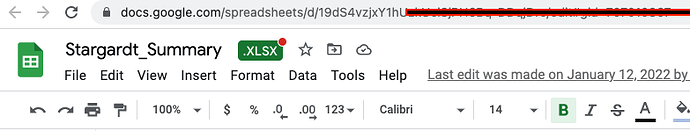

This is the view from the web browser of a given folder:

Notice at the very bottom two "Stargardt_Summary" files. One with the Excel icon, one with the GSheets icon. Notice one has "-" as file size.

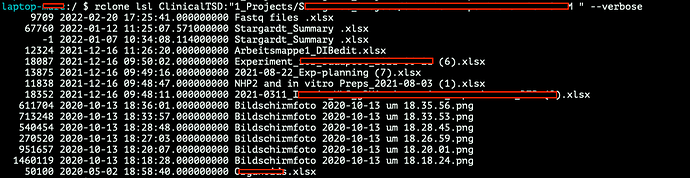

This is what I see using rclone lsl:

Notice both are named identical, and one of them has a size of "-1"

I don't understand what is this. Does anybody know?

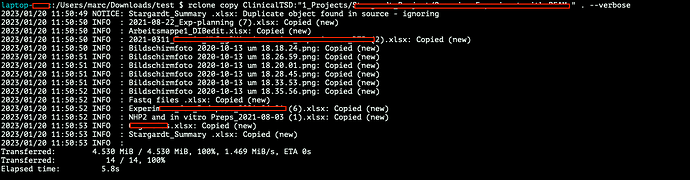

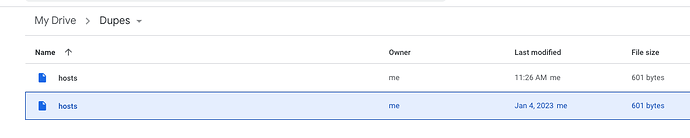

When I try to copy the whole folder with rclone copy, this is what I get:

Notice the "NOTICE" rclone message. It says it is duplicate. But I am not really sure.

The problem I am having is that I have to move a hunge amount (maybe 100k files+folders) from one Shared Drive to other, and I cannot micromanage this. I am afraid there might be some unconsistencies.

Can anyone please help?

Thanks in advance!!

Run the command 'rclone version' and share the full output of the command.

rclone v1.61.1

- os/version: darwin 11.6.7 (64 bit)

- os/kernel: 20.6.0 (x86_64)

- os/type: darwin

- os/arch: amd64

- go/version: go1.19.4

- go/linking: dynamic

- go/tags: none

Which cloud storage system are you using? (eg Google Drive)

Google Drive

The command you were trying to run (eg rclone copy /tmp remote:tmp)

rclone copy ClinicalTSD:"1_Projects/REDACTED NAME" . --verbose

The rclone config contents with secrets removed.

[ClinicalTSD]

type = drive

client_id = {REDACTED}

client_secret = {REDACTED}

scope = drive

token = {"access_token":"{REDACTED}}

team_drive = {REDACTED}

root_folder_id =

A log from the command with the -vv flag

Sorry, I need to put this in redacted mode thus an image