What is the problem you are having with rclone?

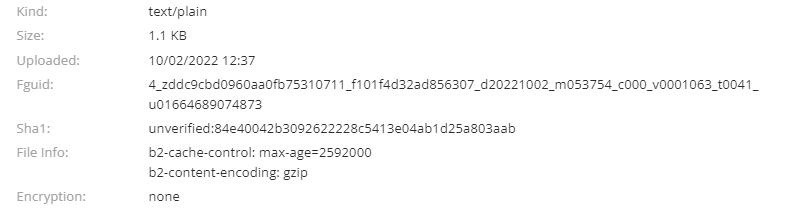

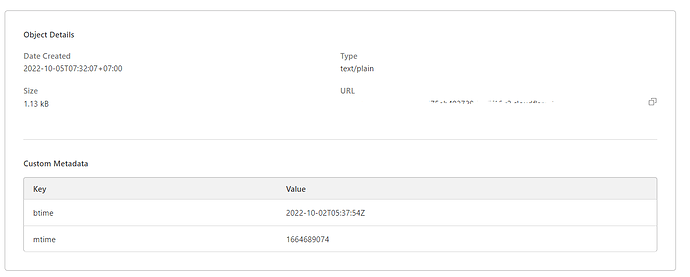

I need Content-Encoding:gzip to be preserved when sync from Backblaze B2 to Cloudflare R2 but --metadata flag doesn't work.

The command you were trying to run

rclone sync b2:b2test/1.txt r2:r2test/data --metadata --no-gzip-encoding

Run the command 'rclone version' and share the full output of the command.

rclone v1.59.2

- os/version: Microsoft Windows 10 Pro 21H2 (64 bit)

- os/kernel: 10.0.19044.1526 (x86_64)

- os/type: windows

- os/arch: amd64

- go/version: go1.18.6

- go/linking: static

- go/tags: cmount

Which cloud storage system are you using?

Copy from Backblaze B2 to Cloudflare R2

A log from the command with the -vv flag

rclone sync b2:b2test/a/1.txt r2:r2test/data --metadata --no-gzip-encoding -vv

2022/10/04 20:48:25 DEBUG : rclone: Version "v1.59.2" starting with parameters ["rclone" "sync" "b2:b2test/a/1.txt" "r2:r2test/data" "--metadata" "--no-gzip-encoding" "-vv"]

2022/10/04 20:48:25 DEBUG : Creating backend with remote "b2:b2test/a/1.txt"

2022/10/04 20:48:25 DEBUG : Using config file from "C:\\Users\\BM\\AppData\\Roaming\\rclone\\rclone.conf"

2022/10/04 20:48:26 DEBUG : fs cache: adding new entry for parent of "b2:b2test/a/1.txt", "b2:b2test/a"

2022/10/04 20:48:26 DEBUG : Creating backend with remote "r2:r2test/data"

2022/10/04 20:48:27 DEBUG : 1.txt: Need to transfer - File not found at Destination

2022/10/04 20:48:30 INFO : 1.txt: Copied (new)

2022/10/04 20:48:30 INFO :

Transferred: 1.104 KiB / 1.104 KiB, 100%, 568 B/s, ETA 0s

Transferred: 1 / 1, 100%

Elapsed time: 4.8s

2022/10/04 20:48:30 DEBUG : 9 go routines active

rclone.conf

[b2]

type = b2

account = zzzzzz

key = zzzzzz

[r2]

type = s3

provider = Cloudflare

access_key_id = zzzzzz

secret_access_key = zzzzzz

endpoint = https://zzzzzz.r2.cloudflarestorage.com

acl = private